22 min read

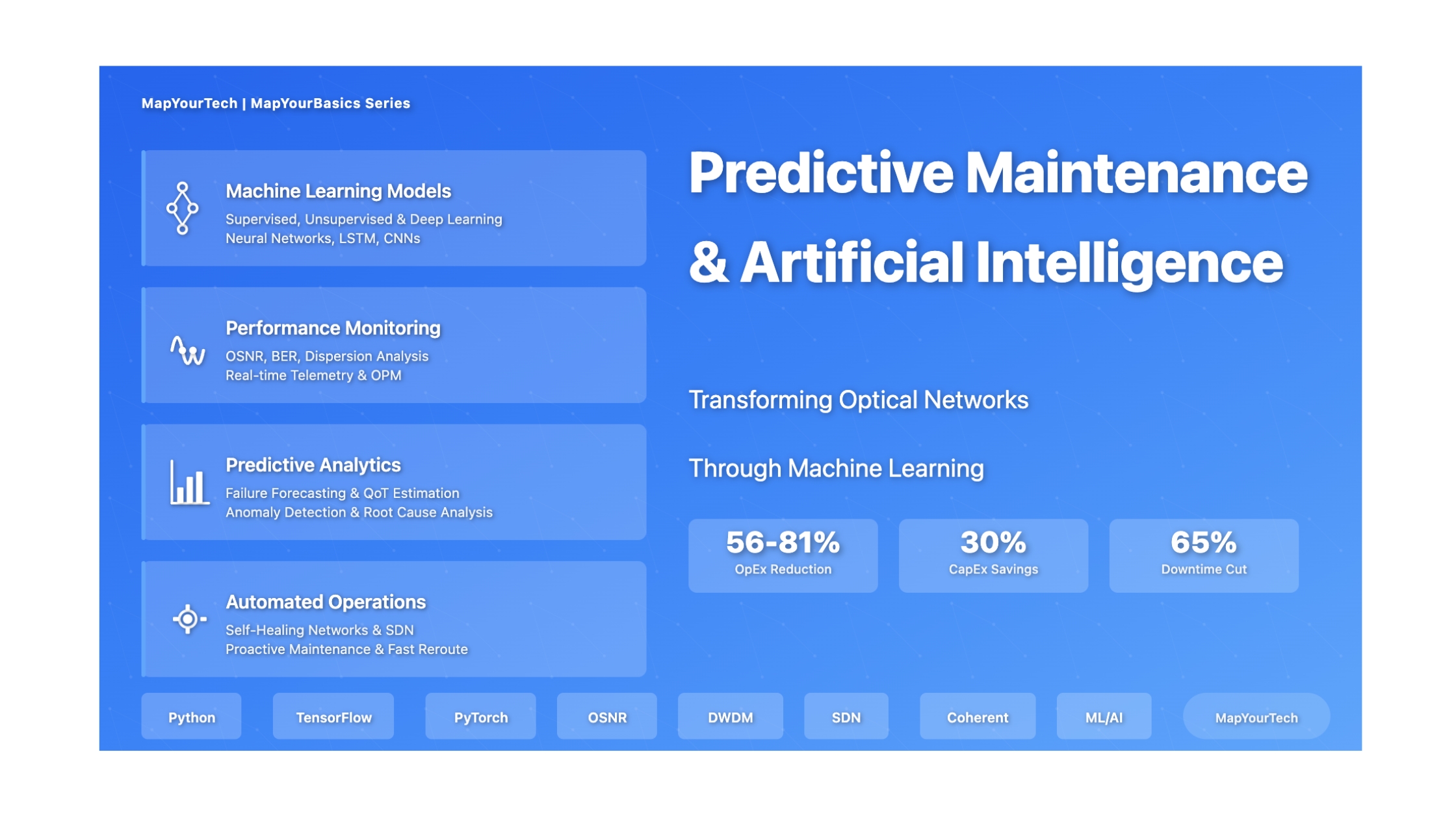

Predictive Maintenance & Failure Prevention with AI

Transforming Optical Network Operations Through Machine Learning Intelligence

Fundamentals & Core Concepts

The Evolution of Network Maintenance

Optical networks form the backbone of modern telecommunications infrastructure, carrying vast amounts of data across metro, long-haul, and submarine cable systems. Traditional maintenance approaches in these networks have relied heavily on reactive strategies, where issues are addressed only after failures occur, or time-based preventive maintenance schedules that may be inefficient. The increasing complexity of optical networks, combined with escalating bandwidth demands and the need for higher reliability, has exposed the limitations of conventional maintenance paradigms.

Predictive maintenance represents a fundamental shift from reactive repair to proactive intervention. By leveraging artificial intelligence and machine learning algorithms, network operators can analyze historical performance data, real-time monitoring telemetry, and environmental conditions to forecast when network components are likely to fail. This capability enables technicians to replace or repair equipment before failures impact service delivery, fundamentally transforming operational efficiency and network reliability.

Key Distinction: Traditional automation relies on predefined scripts and fixed rules, while AI-driven predictive maintenance leverages data patterns and learned models to make intelligent decisions. Machine learning models can adapt their behavior to changing network conditions, traffic patterns, and evolving network states without requiring manual updates to accommodate new scenarios.

The Autonomous Network Vision

The ultimate goal of AI-powered predictive maintenance is progression toward increasingly autonomous optical networks. An autonomous network can sense its environment through comprehensive telemetry and monitoring systems, reason about its current state and future trajectory, decide on optimal actions such as rerouting traffic or adjusting power levels, and act upon those decisions with minimal human intervention. This represents a transformative departure from reactive network management toward proactive, self-healing, and self-optimizing systems.

Modern optical networks generate vast amounts of high-dimensional data from diverse sources including performance counters, optical performance monitors, device logs, and configuration states. AI and machine learning algorithms excel at analyzing this complex data to identify subtle patterns, correlations, and anomalies that would be impossible for human operators or simple rule-based systems to detect reliably.

Critical Performance Indicators in Optical Networks

Optical Signal Quality Metrics

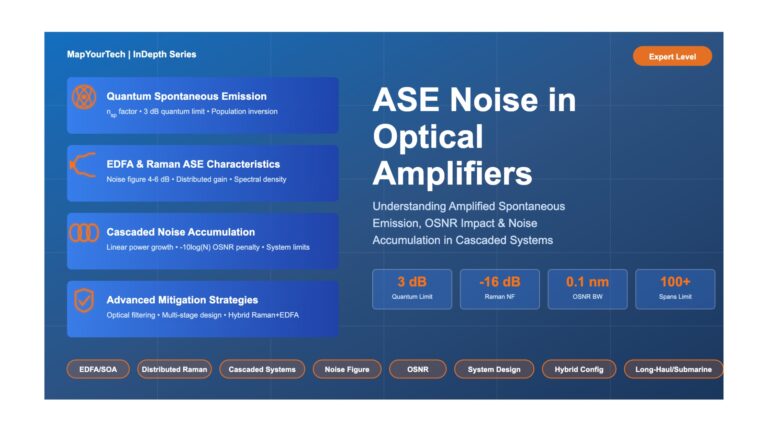

Optical Signal-to-Noise Ratio (OSNR) represents the ratio of signal power to noise power within a specific bandwidth. OSNR values exceeding 20 dB typically indicate acceptable performance for reliable communication. As optical signals traverse amplifier chains in long-haul networks, accumulated amplified spontaneous emission (ASE) noise degrades OSNR, making continuous monitoring essential for maintaining transmission quality.

Error Rate Assessment

Bit Error Rate (BER) measurements provide the ultimate indicator of transmission quality. Typical operational networks target BER values below 10⁻¹², with forward error correction enabling reliable communication even with higher pre-FEC error rates. Monitoring BER trends enables early detection of degradation patterns before service-affecting failures occur.

Dispersion Effects

Chromatic Dispersion (CD) and Polarization Mode Dispersion (PMD) cause signal spreading that accumulates with transmission distance. These impairments become increasingly critical at higher data rates, with 400G and 800G systems exhibiting heightened sensitivity to dispersion-induced penalties. AI models can predict optimal compensation strategies based on real-time channel conditions.

Power Management

Optical power levels must be carefully controlled throughout the network. Launch power that is too high triggers nonlinear impairments, while insufficient power leads to inadequate OSNR at the receiver. Predictive maintenance systems continuously optimize power settings across amplifier chains to maintain optimal performance margins while avoiding equipment stress.

Industry Implementation Example

A Tier-1 telecommunications operator deployed AI-powered predictive maintenance across its long-haul optical network infrastructure. The system continuously monitors OSNR trends across all wavelengths and applies machine learning models to predict amplifier degradation patterns. By analyzing historical performance data and identifying subtle degradation signatures, the system forecasts amplifier failures with sufficient lead time to enable proactive component replacement. This approach reduced unplanned downtime by 65% while enabling more efficient maintenance scheduling and resource allocation.

AI-Driven Predictive Maintenance Architecture

Figure 1: End-to-end AI-driven predictive maintenance architecture showing data flow from collection through ML processing to automated actions with continuous feedback.

Mathematical Framework

OSNR-Based Quality Assessment

The relationship between OSNR and system performance forms the foundation for predictive quality assessment in optical networks. The Q-factor, which directly correlates with BER, can be derived from OSNR measurements using established mathematical relationships. For a given optical bandwidth and receiver characteristics, the Q-factor in decibels relates to OSNR through the equation accounting for optical and electrical bandwidth ratios.

Machine learning models leverage these fundamental relationships while learning additional complex patterns from operational data. The mathematical framework enables AI systems to predict Quality of Transmission (QoT) metrics for both existing lightpaths and candidate paths before establishment, supporting intelligent routing and wavelength assignment decisions.

Statistical Learning Foundations

Predictive maintenance relies heavily on statistical inference and probability theory. Models must estimate probability distributions over future network states, assess confidence intervals for predictions, and quantify uncertainty in forecasts. Key statistical concepts include regression analysis for predicting continuous metrics like OSNR or BER, classification for determining failure likelihood categories, and time-series analysis for understanding temporal patterns in performance degradation.

Mathematical Prerequisites: Effective implementation of AI-driven predictive maintenance requires understanding of linear algebra for data representation and manipulation, calculus for optimization algorithms, and probability theory for uncertainty quantification. These mathematical foundations enable practitioners to select appropriate algorithms, tune model parameters, and interpret prediction confidence levels.

Optimization Algorithms

Neural networks and deep learning models used in predictive maintenance are trained through optimization processes that minimize prediction errors. Gradient descent and its variants form the core of these training algorithms, iteratively adjusting model parameters to improve predictive accuracy. The mathematical framework of backpropagation enables efficient computation of gradients through multi-layer networks, making complex pattern recognition feasible for large-scale optical network datasets.

Types & Components

Machine Learning Paradigms for Optical Networks

AI-driven predictive maintenance leverages three primary machine learning paradigms, each suited to specific aspects of network monitoring and failure prevention. The selection among these approaches depends on the nature of available data, the specific networking problem, and operational requirements.

Supervised Learning Applications

Supervised learning algorithms train on labeled datasets where both input features and correct output labels are provided. This paradigm proves particularly effective for predictive maintenance tasks where historical failure data can be used to train models that forecast future equipment failures.

Regression Models

Regression techniques predict continuous numerical values such as future OSNR levels, expected BER under various conditions, or remaining useful life of optical components. Linear regression, support vector regression, and artificial neural networks can estimate performance metrics for candidate lightpaths before establishment, enabling proactive quality of transmission assessment. Advanced regression models incorporating temporal features predict traffic demands and bandwidth requirements, supporting capacity planning initiatives.

Classification Algorithms

Classification models assign network elements or conditions to predefined categories. Applications include determining whether potential lightpath configurations will meet QoT requirements, predicting whether components are likely to fail within specific timeframes, and identifying fault types based on monitored parameters. Support vector machines, random forests, decision trees, and neural networks have demonstrated effectiveness in failure classification tasks across diverse optical network deployments.

Deep Learning Architectures

Artificial Neural Networks (ANNs) and deep learning represent a powerful subset of supervised learning capable of learning highly complex, nonlinear patterns directly from raw data. Different architectural variations address specific data characteristics in optical networks.

Convolutional Neural Networks (CNNs) excel at processing spatial hierarchies in data, making them ideal for analyzing constellation diagrams, eye diagrams, and optical spectrum analyzer outputs. These networks can automatically extract relevant features from visual representations of signal quality, enabling sophisticated modulation format recognition and impairment identification.

Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) architectures handle sequential data effectively, making them particularly suitable for time-series forecasting in optical networks. These models can learn temporal dependencies in performance monitoring data, enabling accurate prediction of future traffic patterns, equipment degradation trajectories, and impending failure events.

Unsupervised Learning for Pattern Discovery

Unsupervised learning algorithms work with unlabeled data to discover inherent structures and patterns. These techniques prove invaluable when labeled failure data is scarce or when identifying previously unknown degradation patterns.

Clustering Applications: K-means, DBSCAN, and hierarchical clustering algorithms group similar network states or traffic profiles, enabling identification of typical operational modes and detection of anomalous behaviors. Clustering techniques can identify outliers that may indicate soft failures or gradual performance degradation before traditional threshold-based alarms trigger.

Anomaly detection algorithms identify rare events or observations that differ significantly from normal operational patterns. These techniques detect soft failures characterized by subtle, gradual performance degradation that might precede hard failures, unusual traffic patterns potentially indicating security threats, and unexpected changes in network behavior warranting investigation. Isolation forests and one-class support vector machines represent specialized algorithms designed specifically for anomaly detection tasks.

Reinforcement Learning for Dynamic Optimization

Reinforcement learning (RL) enables agents to learn optimal decision-making policies through trial-and-error interaction with network environments. The agent receives rewards or penalties for actions and learns strategies that maximize cumulative rewards over time. This paradigm addresses problems involving sequential decision-making in dynamic environments.

Applications in optical networks include dynamic resource allocation where models learn optimal policies for wavelength and spectrum assignment based on real-time network state and traffic demands. Reinforcement learning algorithms can discover sophisticated routing strategies that minimize latency, reduce congestion, or decrease blocking probability. Q-learning and deep reinforcement learning techniques have demonstrated effectiveness in network self-configuration and self-optimization scenarios, enabling automated parameter tuning without explicit programming of decision rules.

Hybrid Approach Implementation

Advanced predictive maintenance systems often combine multiple machine learning paradigms. An enterprise optical network deployment utilized unsupervised clustering to identify distinct operational regimes and normal behavior patterns from unlabeled monitoring data. These patterns then informed supervised classification models that predict component failures by detecting deviations from established baselines. The hybrid approach improved prediction accuracy by 40% compared to single-paradigm implementations while reducing false positive alerts by 55%.

Machine Learning Algorithm Selection Matrix

Figure 2: Comprehensive ML algorithm classification showing paradigms, specific algorithms, and their primary applications in optical network predictive maintenance.

Effects & Impacts

Operational Excellence Through Proactive Maintenance

The transition from reactive to predictive maintenance fundamentally transforms operational efficiency in optical networks. Traditional reactive approaches result in extended outages as teams diagnose failures, source replacement components, and dispatch field technicians. Predictive maintenance enables scheduled interventions during planned maintenance windows, minimizing service disruption and enabling more efficient resource allocation.

Cost Reduction

Quantifiable studies demonstrate that AI-driven automation delivers substantial operational expenditure savings. Proactive maintenance reduces emergency repair costs by eliminating premium charges for expedited component delivery and after-hours technician deployment. Labor efficiency improves as maintenance activities concentrate on predicted failure points rather than routine inspection of healthy equipment. Industry analyses indicate OpEx reductions ranging from 56% to 81% in specific network segments through intelligent automation.

Capital Efficiency

Optimized resource utilization enabled by predictive maintenance contributes to capital expenditure avoidance. Accurate failure forecasting enables just-in-time component procurement rather than maintaining excessive spare inventory. Network capacity can be utilized more efficiently as confidence in reliability reduces the need for excessive redundancy margins. Studies quantify capital expenditure savings approaching 30% through comprehensive network automation initiatives.

Service Quality and Reliability Enhancement

Customer satisfaction directly correlates with network reliability and service availability. Predictive maintenance dramatically improves key performance indicators including mean time between failures (MTBF), mean time to repair (MTTR), and overall network availability percentages. Service level agreement compliance improves as proactive interventions prevent service-affecting outages.

The ability to predict and prevent failures before they impact services represents a fundamental shift in service quality management. Financial services clients requiring ultra-reliable connectivity for high-frequency trading benefit from sub-millisecond latency consistency enabled by proactive performance optimization. Content delivery networks achieve higher throughput consistency by avoiding congestion through traffic prediction and dynamic resource allocation.

Self-Healing Network Capabilities: Integration of predictive maintenance with software-defined networking enables real-time fault recovery without human intervention. When degradation signatures indicate impending failure, automated systems can reroute traffic over alternative paths within milliseconds, ensuring continuous service delivery while maintenance teams address the underlying issue. Submarine cable operators have demonstrated particular success with self-healing architectures, maintaining service continuity for critical financial transactions during fiber repairs.

Strategic Business Impact

Beyond immediate operational benefits, predictive maintenance enables strategic advantages for network operators. Faster service provisioning becomes possible as AI-powered quality of transmission prediction eliminates extensive testing for new lightpath establishment. The reduction in time-to-market for new services enhances competitive positioning in dynamic telecommunications markets.

Data-driven insights derived from machine learning analysis inform strategic network planning decisions. Traffic pattern predictions guide capacity expansion investments, ensuring infrastructure deployment aligns with actual demand growth. Component reliability data aggregated across the network fleet informs vendor selection and procurement strategies, driving continuous improvements in network infrastructure quality.

Challenges and Considerations

Despite substantial benefits, AI-driven predictive maintenance presents implementation challenges. Deep learning models often function as "black boxes," making it difficult to understand why specific predictions were made. This opacity can hinder troubleshooting when models produce unexpected results and raises concerns about deployment in mission-critical infrastructure where explainability is paramount.

Data quality and availability constitute critical success factors. Machine learning models require substantial quantities of high-quality training data, which may be difficult to obtain in operational networks. Historical failure data may be incomplete or inconsistently labeled, limiting supervised learning effectiveness. Ensuring data accessibility across multi-vendor environments introduces additional complexity as standardized data formats and APIs may not exist across legacy equipment.

Integration with existing operational systems and workflows requires careful change management. Network operations teams must develop new skills to work effectively with AI-powered tools, understanding both their capabilities and limitations. Building organizational trust in automated decision-making processes takes time, particularly when initial deployments encounter prediction errors or false alarms.

Techniques & Solutions

Data Collection and Feature Engineering

Effective predictive maintenance begins with comprehensive data collection infrastructure. Modern optical networks generate telemetry from diverse sources including optical performance monitors measuring OSNR, power levels, and dispersion parameters; network management systems tracking configuration states and alarm histories; device logs capturing operational events and error messages; and environmental sensors monitoring temperature, humidity, and other external factors affecting equipment reliability.

Feature engineering transforms raw monitoring data into meaningful inputs for machine learning models. This process includes calculating derived metrics such as rates of change in performance parameters, statistical aggregations over relevant time windows, and correlation features capturing relationships between different measurements. Effective feature selection identifies the most informative variables while reducing dimensionality to improve model training efficiency and generalization performance.

Model Development Workflow

Implementing AI-driven predictive maintenance follows a structured development lifecycle. Initial exploratory data analysis reveals data distributions, identifies correlations between variables, and uncovers potential quality issues requiring remediation. Data preprocessing steps handle missing values, normalize measurements to consistent scales, and remove outliers that could distort model training.

Training and Validation

Historical data is partitioned into training, validation, and test sets to enable robust model development. Training data teaches the model to recognize patterns associated with equipment failures or performance degradation. Validation data guides hyperparameter tuning and architecture selection during development. Independent test data provides unbiased assessment of final model performance on previously unseen examples, estimating real-world prediction accuracy.

Performance Metrics

Evaluation metrics must align with operational objectives. For failure prediction classification, precision quantifies the proportion of positive predictions that are correct, while recall measures the proportion of actual failures successfully predicted. The F1-score balances these metrics. For regression tasks predicting continuous values, root mean square error (RMSE) and mean absolute error (MAE) quantify prediction accuracy. Time-to-failure predictions require specialized metrics accounting for censored data.

Deployment and Integration Strategies

Transitioning trained models from development to production requires careful architectural design. Real-time inference systems must process streaming telemetry data with minimal latency to enable timely interventions. Edge computing architectures can deploy models near data sources, reducing latency and bandwidth requirements compared to centralized cloud processing. Containerization using Docker and orchestration via Kubernetes facilitate scalable, resilient model deployment across distributed network infrastructure.

Integration with existing network management systems requires well-designed APIs and data exchange protocols. NETCONF and YANG provide standardized interfaces for network configuration and monitoring in modern optical systems. RESTful APIs enable flexible integration between AI prediction systems and operational support systems managing work orders, inventory, and workforce scheduling. Message queues like Apache Kafka handle high-volume telemetry ingestion and distribution to multiple consuming applications.

Continuous Learning: Production ML systems benefit from continuous model retraining as new operational data accumulates. Online learning techniques enable incremental model updates without complete retraining, adapting to evolving network conditions and emerging failure patterns. However, careful monitoring prevents model drift where performance gradually degrades as the data distribution shifts from original training conditions.

Alarm Correlation and Root Cause Analysis

Modern optical networks can generate thousands of alarms when issues occur. AI-powered alarm correlation systems filter noise and identify root causes by analyzing relationships between simultaneous alarms across network elements. Machine learning models learn which alarm combinations indicate specific failure types, enabling automated diagnosis that directs technicians to actual problems rather than symptomatic effects.

Sophisticated root cause analysis systems leverage graph neural networks and causal inference techniques to understand dependency structures in optical networks. These models can trace cascading failure effects through amplifier chains, identify single points of failure affecting multiple services, and distinguish between independent concurrent issues versus correlated problems sharing common root causes.

Practical Implementation: OSNR Degradation Prediction

A global service provider implemented LSTM-based time-series models to predict OSNR degradation trends across its 400G DWDM network. The system ingests hourly OSNR measurements along with environmental data and traffic load information. By learning normal degradation patterns associated with component aging versus anomalous degradation indicating impending failures, the model provides 72-hour advance warning of OSNR falling below operational thresholds. This lead time enables maintenance scheduling during low-traffic periods, reducing customer impact by 90% compared to reactive repairs.

OSNR Degradation Prediction Workflow

Figure 3: LSTM-based OSNR degradation prediction workflow showing data collection, preprocessing, model prediction, and automated maintenance scheduling with 72-hour advance warning.

Explainable AI Techniques

Addressing the black-box nature of complex models requires explainable AI techniques that provide insight into model decision-making. SHAP (SHapley Additive exPlanations) values quantify each input feature's contribution to individual predictions, enabling engineers to understand which monitoring parameters drove specific failure forecasts. Attention mechanisms in neural networks highlight which temporal patterns or spatial features models consider most relevant for predictions.

Model interpretability builds operator trust and facilitates troubleshooting when predictions prove inaccurate. Visualization dashboards presenting feature importance, decision trees approximating neural network behavior, and counterfactual explanations showing how input changes would alter predictions all contribute to transparency. Regulatory compliance in certain industries may mandate explainability for automated decision systems affecting service delivery.

Practical Applications

Amplifier Chain Optimization

Optical amplifiers represent critical components in long-haul networks, and their performance directly impacts overall system OSNR and transmission reach. Predictive maintenance systems monitor key amplifier parameters including gain flatness, noise figure evolution, and power consumption trends. Machine learning models trained on historical amplifier aging data can predict when erbium-doped fiber amplifiers (EDFAs) will experience gain degradation or excessive noise figure increase that compromises link performance.

Advanced implementations correlate amplifier performance with environmental factors including temperature variations, humidity levels, and input power fluctuations. Multi-variable regression models predict power excursions in amplifier chains, enabling proactive power level adjustments that maintain consistent OSNR while avoiding component stress. Deep learning architectures can learn complex nonlinear relationships between pump laser characteristics, optical gain profiles, and expected remaining useful life.

Coherent Transceiver Performance Management

Modern 400G and 800G coherent optical systems employ sophisticated digital signal processing to compensate for transmission impairments. Machine learning algorithms integrated within coherent transceivers optimize signal recovery by dynamically adapting to changing channel conditions. These systems adjust equalization parameters, modulation formats, and forward error correction strategies based on real-time measurements of chromatic dispersion, polarization mode dispersion, and fiber nonlinearities.

Adaptive Modulation

ML algorithms can dynamically select optimal modulation formats based on link characteristics and current channel quality. When OSNR margins are high, systems switch to higher-order modulation like 64-QAM to maximize spectral efficiency. As conditions degrade, automatic fallback to more robust formats like QPSK maintains connectivity while sacrificing capacity. This adaptive approach optimizes network capacity utilization while maintaining service reliability.

Power Optimization

Launch power optimization represents a complex tradeoff between achieving adequate receive power and avoiding nonlinear impairments. Reinforcement learning agents can learn optimal power control policies that maximize system margin while minimizing energy consumption. In long-haul networks spanning multiple amplifier spans, coordinated power management across the entire chain requires sophisticated optimization that ML techniques can provide.

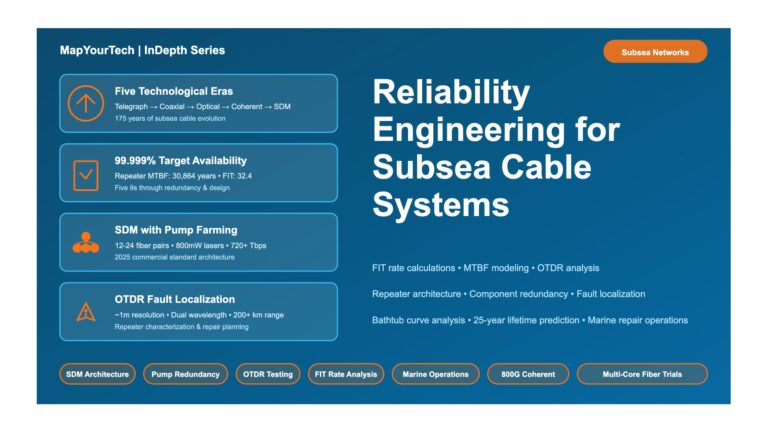

Submarine Cable System Reliability

Submarine optical cables present unique maintenance challenges due to their inaccessibility and the catastrophic impact of failures on international connectivity. Predictive maintenance assumes critical importance in these deployments where reactive repairs require ship mobilization costing millions of dollars and service outages lasting weeks.

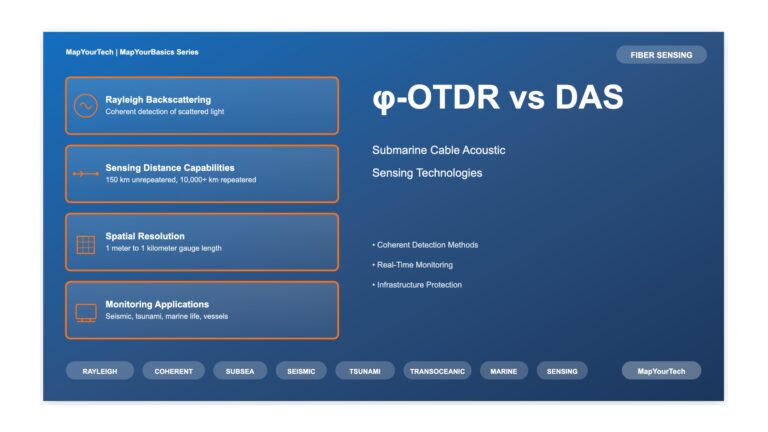

AI systems continuously monitor submarine systems for early warning signs of degradation. Subtle changes in OSNR profiles, asymmetric power evolution between fiber pairs, or anomalous temperature trends from submerged repeaters can indicate developing problems. Machine learning models trained on historical cable fault data recognize degradation signatures associated with water ingress, mechanical stress, or component aging, enabling proactive maintenance scheduling during planned cable ship operations.

Real-World Deployment: Metro Network Soft Failure Detection

A metropolitan optical network serving financial services clients deployed unsupervised learning models to detect soft failures characterized by gradual performance degradation. The system clusters normal operational states and flags deviations from established patterns. Over six months of operation, the system identified 23 soft failure events an average of 4.5 days before they would have triggered traditional threshold-based alarms. This early detection enabled preventive interventions that avoided service outages for latency-sensitive trading applications while reducing mean time to repair by 60%.

Data Center Interconnect Optimization

Data center interconnect (DCI) networks carrying cloud traffic between geographically distributed facilities benefit significantly from AI-driven optimization. Predictive traffic engineering anticipates capacity requirements based on application usage patterns, time of day, and seasonal trends. Machine learning models forecast bandwidth demands with sufficient lead time to enable proactive capacity augmentation before congestion impacts application performance.

Dynamic wavelength allocation in DCI networks leverages reinforcement learning to optimize spectrum utilization. As traffic patterns shift throughout the day, automated systems reallocate wavelengths to match demand geography, minimizing blocking probability while maintaining quality of service for latency-sensitive applications. Integration with software-defined networking controllers enables seamless coordination between optical and IP layers for comprehensive traffic optimization.

Passive Optical Network Monitoring

Fiber-to-the-home (FTTH) deployments based on passive optical network (PON) technology present distinct monitoring challenges due to passive splitter infrastructure. AI-powered optical time domain reflectometry (OTDR) analysis automatically interprets complex reflection traces to identify fiber degradation, splice quality issues, or customer premises equipment problems. Convolutional neural networks trained on labeled OTDR traces can classify event types and localize faults with higher accuracy than traditional rule-based systems.

Predictive maintenance in PON networks focuses on preventing customer service interruptions. Machine learning models analyze optical power measurements from optical line terminals to detect systematic degradation affecting subscriber optical network terminals. Early identification of fiber bending, connector contamination, or splitter failures enables proactive truck rolls during normal business hours rather than emergency repairs affecting customer satisfaction.

Future Directions: Emerging applications include quantum communication network monitoring where AI systems detect subtle perturbations affecting quantum key distribution, integration with 5G and beyond networks requiring ultra-reliable low-latency communications, and autonomous optical networks capable of self-optimization without human intervention. As optical transmission rates increase toward 1.6 Tbps per wavelength and beyond, AI-driven management becomes not merely advantageous but essential for maintaining reliable operations.

For educational purposes in optical networking and DWDM systems

Note: This guide is based on industry standards, best practices, and real-world implementation experiences. Specific implementations may vary based on equipment vendors, network topology, and regulatory requirements. Always consult with qualified network engineers and follow vendor documentation for actual deployments.

Unlock Premium Content

Join over 400K+ optical network professionals worldwide. Access premium courses, advanced engineering tools, and exclusive industry insights.

Already have an account? Log in here