It was always exciting discussing 50ms switching/restoration time perspective for telecom circuits for every engineer who belongs to some part of telecom services including, optical, voice, data, microwave, radio, etc. I was also seeking it since the start of my telecom career, and I believe still somewhere at some point in time, engineers or telecom professionals might be hearing this term and wonder about why (“WHY”) is this? So, I researched over available knowledge pools, and using my experience, I thought of putting it into words to enlighten some of my friends like me.

The 50 ms idea originated from Automatic Protection-based Switching subsystems during early digital transmission systems. It was not actually based on any particular service requirement. The value persists because it is not entirely based on technical considerations which could resolve it, but has roots in historical practices and past capabilities and has been a tool of certain marketing strategies.

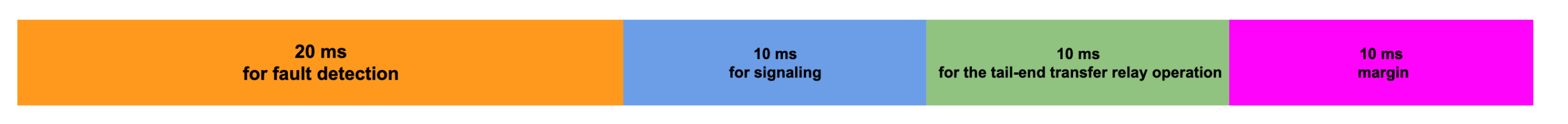

Initially, digital transmission systems based on 1:N APS typically required about 20 ms for fault detection, 10 ms for signaling, and 10 ms for the tail-end transfer relay operation, so the specification for APS switching times was reasonably set at 50 ms, allowing a 10 ms margin.

For information, early generations of DS1 channel banks (1970s era) also had a Carrier Group Alarm (CGA) threshold of about 230 ms. The CGA is a time threshold for the persistence of any alarm state on the transmission line side (such as loss of signal or frame synch loss) after which all trunk channels would be busied out. But the requirement for 50 ms APS switching stayed in place, mainly because this was still technically quite feasible at no extra cost in the design of APS subsystems.

The apparent sanctity of 50 ms was further entrenched in the 1990s by vendors who promoted only ring-based transport solutions and found it advantageous to insist on 50 ms as the requirement, effectively precluding distributed mesh restoration alternatives under equal consideration start of the SONET era.

As a marketing strategy, the 50 ms issue served as the “mesh killer” for the 1990s as more traditional telcos were bought into this as reference.

On the other hand, there was also real urgency in the early 1990s to deploy some kind of fast automated restoration method relatively immediately. This lead to the quick adoption of ring-based solutions which had only incremental development requirements over 1+1 APS transmission systems. However, once rings were deployed, the effect was to only further reinforce the cultural assumption of 50 ms as the standard. Thus, as sometimes happens in engineering, what was initially a performance capability in one specific context (APS switching time) evolved into a perceived requirement in all other contexts.

But the “50 ms requirement” is undergoing serious challenges to its validity as a ubiquitous requirement, even being referred to as the “50 ms myth” by data-centric entrants to the field who see little actual need for such fast restoration from an IP services standpoint. Faster restoration is by itself always desirable as a goal, but restoration goals must be carefully set in light of corresponding costs that may be paid in terms of limiting the available choices of network architecture. In practice, insistence on “50 ms” means 1+1 dedicated APS or UPSR rings (to follow) are almost the only choices left for the operator to consider. But if something more like 200 ms is allowed, the entire scope of efficient shared-mesh architectures becomes available. So it is an issue of real importance as to whether there are any services that truly require 50 ms.

Sosnosky’s original study found no applications that require 50 ms restoration. However, the 50 ms requirement was still being debated in 2001 when Schallenburg, understanding the potential costs involved to his company, undertook a series of experimental trials with varying interruption times and measured various service degradations on voice circuits, SNA, ATM, X.25, SS7, DS1, 56 kb/s data, NTC digital video, SONET OC-12 access services, and OC-48. He tested with controlled-duration outages and found that 200 ms outages would not jeopardize any of these services and that, except for SS7 signaling links, all other services would in fact withstand outages of two to five seconds.

Thus, the supposed requirement for 50 ms restoration seems to be more of a techno-cultural myth than a real requirement—there are quite practical reasons to consider 2 seconds as an alternate goal for network restoration. This avoids the regime of connection and session time-outs and IP/MPLS layer reactions but gives a green light to the full consideration of far more efficient mesh-based survivable architectures.

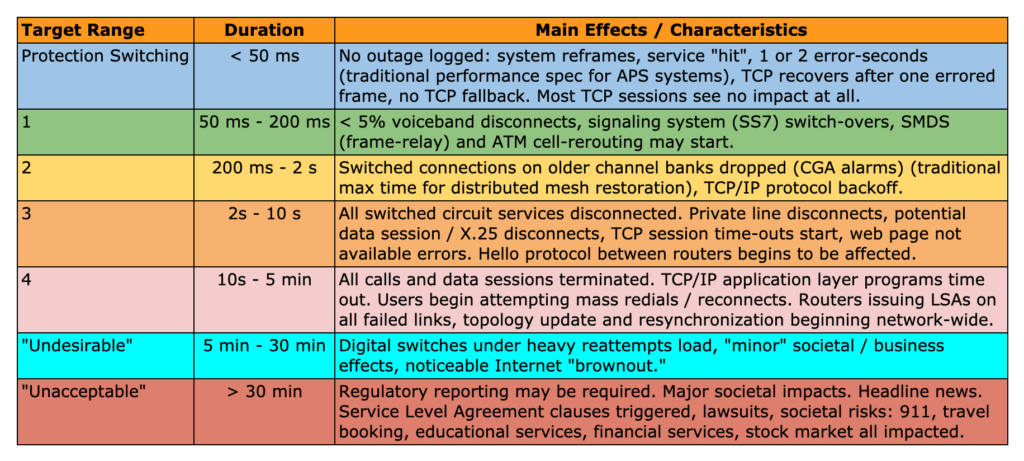

A study done by Sosnosky provides a summary of effects, based on a detailed technical analysis of various services and signal types. In this study, outages are classified by their duration and it is presented how with the given different outage time, main effects/characteristics change.

Conclusive Comment

Considering state-of-art technologies evolving overtimes in all aspects of telecommunication fields, switching speed is too fast, even hold-up-timer (HUT) and hold-down-timers or hold-off-timers are playing significant roles that can hold the consequent actions and avoids unavailability of service. Yes, there will definitely be some packet losses in the services which could be visible as some form of errors in the links or may increase latency sometimes but as we know it varies with the nature of services like voice, data, live stream, internet surfing, video buffering, etc. So we can say that in the recent world the networks are quite resistant to brief outages, although it could vary based on the architecture of the network and flow of the services. Even 50ms or 200ms outages would not jeopardize services (data, video, voice) and it will be based on network architecture and routing of services.

Would love to see viewers comment on this and further discussion.

Reference:

Mesh-Based Survivable Networks: Options and Strategies for Optical, MPLS, SONET, and ATM Networking By Wayne D. Grover

Unlock Premium Content

Join over 400K+ optical network professionals worldwide. Access premium courses, advanced engineering tools, and exclusive industry insights.

Already have an account? Log in here