In the world of optical communication, it is crucial to have a clear understanding of Bit Error Rate (BER). This metric measures the probability of errors in digital data transmission, and it plays a significant role in the design and performance of optical links. However, there are ongoing debates about whether BER depends more on data rate or modulation. In this article, we will explore the impact of data rate and modulation on BER in optical links, and we will provide real-world examples to illustrate our points.

Table of Contents

- Introduction

- Understanding BER

- The Role of Data Rate

- The Role of Modulation

- BER vs. Data Rate

- BER vs. Modulation

- Real-World Examples

- Conclusion

- FAQs

Introduction

Optical links have become increasingly essential in modern communication systems, thanks to their high-speed transmission, long-distance coverage, and immunity to electromagnetic interference. However, the quality of optical links heavily depends on the BER, which measures the number of errors in the transmitted bits relative to the total number of bits. In other words, the BER reflects the accuracy and reliability of data transmission over optical links.

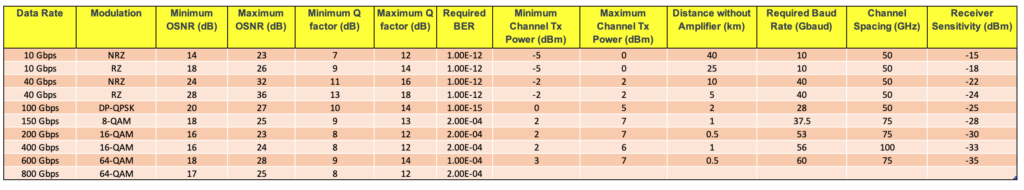

BER depends on various factors, such as the quality of the transmitter and receiver, the noise level, and the optical power. However, two primary factors that significantly affect BER are data rate and modulation. There have been ongoing debates about whether BER depends more on data rate or modulation, and in this article, we will examine both factors and their impact on BER.

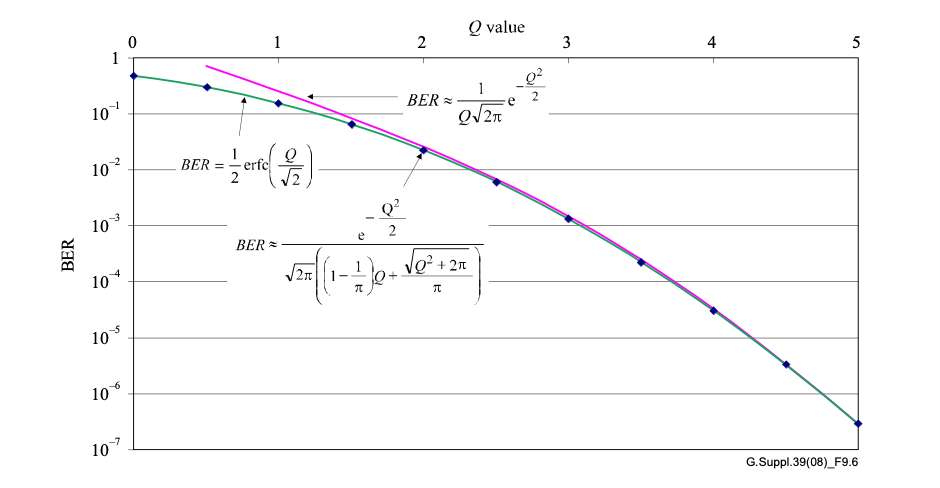

Understanding BER

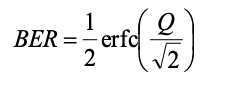

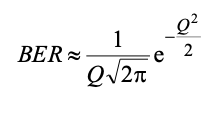

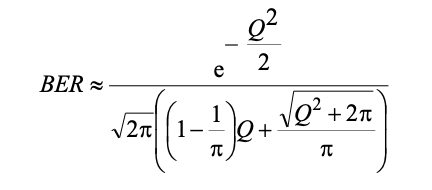

Before we delve into the impact of data rate and modulation, let’s first clarify what BER means and how it is calculated. BER is expressed as a ratio of the number of received bits with errors to the total number of bits transmitted. For example, a BER of 10^-6 means that one out of every million bits transmitted contains an error.

The BER can be calculated using the formula: BER = (Number of bits received with errors) / (Total number of bits transmitted)

The lower the BER, the higher the quality of data transmission, as fewer errors mean better accuracy and reliability. However, achieving a low BER is not an easy task, as various factors can affect it, as we will see in the following sections.

The Role of Data Rate

Data rate refers to the number of bits transmitted per second over an optical link. The higher the data rate, the faster the transmission speed, but also the higher the potential for errors. This is because a higher data rate means that more bits are being transmitted within a given time frame, and this increases the likelihood of errors due to noise, distortion, or other interferences.

As a result, higher data rates generally lead to a higher BER. However, this is not always the case, as other factors such as modulation can also affect the BER, as we will discuss in the following section.

The Role of Modulation

Modulation refers to the technique of encoding data onto an optical carrier signal, which is then transmitted over an optical link. Modulation allows multiple bits to be transmitted within a single symbol, which can increase the data rate and improve the spectral efficiency of optical links.

However, different modulation schemes have different levels of sensitivity to noise and other interferences, which can affect the BER. For example, amplitude modulation (AM) and frequency modulation (FM) are more susceptible to noise, while phase modulation (PM) and quadrature amplitude modulation (QAM) are more robust against noise.

Therefore, the choice of modulation scheme can significantly impact the BER, as some schemes may perform better than others at a given data rate.

BER vs. Data Rate

As we have seen, data rate and modulation can both affect the BER of optical links. However, the question remains: which factor has a more significant impact on BER? The answer is not straightforward, as both factors interact in complex ways and depend on the specific design and configuration of the optical link.

Generally speaking, higher data rates tend to lead to higher BER, as more bits are transmitted per second, increasing the likelihood of errors. However, this relationship is not linear, as other factors such as the quality of the transmitter and receiver, the signal-to-noise ratio, and the modulation scheme can all influence the BER. In some cases, increasing the data rate can improve the BER by allowing the use of more robust modulation schemes or improving the receiver’s sensitivity.

Moreover, different types of data may have different BER requirements, depending on their importance and the desired level of accuracy. For example, video data may be more tolerant of errors than financial data, which requires high accuracy and reliability.

BER vs. Modulation

Modulation is another critical factor that affects the BER of optical links. As we mentioned earlier, different modulation schemes have different levels of sensitivity to noise and other interferences, which can impact the BER. For example, QAM can achieve higher data rates than AM or FM, but it is also more susceptible to noise and distortion.

Therefore, the choice of modulation scheme should take into account the desired data rate, the noise level, and the quality of the transmitter and receiver. In some cases, a higher data rate may not be achievable or necessary, and a more robust modulation scheme may be preferred to improve the BER.

Real-World Examples

To illustrate the impact of data rate and modulation on BER, let’s consider two real-world examples.

In the first example, a telecom company wants to transmit high-quality video data over a long-distance optical link. The desired data rate is 1 Gbps, and the BER requirement is 10^-9. The company can choose between two modulation schemes: QAM and amplitude-shift keying (ASK).

QAM can achieve a higher data rate of 1 Gbps, but it is also more sensitive to noise and distortion, which can increase the BER. ASK, on the other hand, has a lower data rate of 500 Mbps but is more robust against noise and can achieve a lower BER. Therefore, depending on the noise level and the quality of the transmitter and receiver, the telecom company may choose ASK over QAM to meet its BER requirement.

In the second example, a financial institution wants to transmit sensitive financial data over a short-distance optical link. The desired data rate is 10 Mbps, and the BER requirement is 10^-12. The institution can choose between two data rates: 10 Mbps and 100 Mbps, both using PM modulation.

Although the higher data rate of 100 Mbps can achieve faster transmission, it may not be necessary for financial data, which requires high accuracy and reliability. Therefore, the institution may choose the lower data rate of 10 Mbps, which can achieve a lower BER and meet its accuracy requirements.

Conclusion

In conclusion, BER is a crucial metric in optical communication, and its value heavily depends on various factors, including data rate and modulation. Higher data rates tend to lead to higher BER, but other factors such as modulation schemes, noise level, and the quality of the transmitter and receiver can also influence the BER. Therefore, the choice of data rate and modulation should take into account the specific design and requirements of the optical link, as well as the type and importance of the transmitted data.

FAQs

- What is BER in optical communication?

BER stands for Bit Error Rate, which measures the probability of errors in digital data transmission over optical links.

- What factors affect the BER in optical communication?

Various factors can affect the BER in optical communication, including data rate, modulation, the quality of the transmitter and receiver, the signal-to-noise ratio, and the type and importance of the transmitted data.

- Does a higher data rate always lead to a higher BER in optical communication?

Not necessarily. Although higher data rates generally lead to a higher BER, other factors such as modulation schemes, noise level, and the quality of the transmitter and receiver can also influence the BER.

- What is the role of modulation in optical communication?

Modulation allows data to be encoded onto an optical carrier signal, which is then transmitted over an optical link. Different modulation schemes have different levels of sensitivity to noise and other interferences, which can impact the BER.

- How do real-world examples illustrate the impact of data rate and modulation on BER?

Real-world examples can demonstrate the interaction and trade-offs between data rate and modulation in achieving the desired BER and accuracy requirements for different types of data and applications. By considering specific scenarios and constraints, we can make informed decisions about the optimal data rate and modulation scheme for a given optical link.