Exploring the C+L Bands in DWDM Network

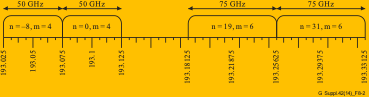

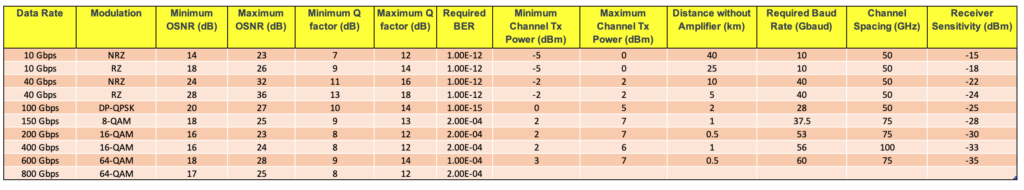

DWDM networks have traditionally operated within the C-band spectrum due to its lower dispersion and the availability of efficient Erbium-Doped Fiber Amplifiers (EDFAs). Initially, the C-band supported a spectrum of 3.2 terahertz (THz), which has been expanded to 4.8 THz to accommodate increased data traffic. While the Japanese market favored the L-band early on, this preference is now expanding globally as the L-band’s ability to double the spectrum capacity becomes crucial. The integration of the L-band adds another 4.8 THz, resulting in a total of 9.6 THz when combined with the C-band.

What Does C+L Mean?

C+L band refers to two specific ranges of wavelengths used in optical fiber communications: the C-band and the L-band. The C-band ranges from approximately 1530 nm to 1565 nm, while the L-band covers from about 1565 nm to 1625 nm. These bands are crucial for transmitting signals over optical fiber, offering distinct characteristics in terms of attenuation, dispersion, and capacity.

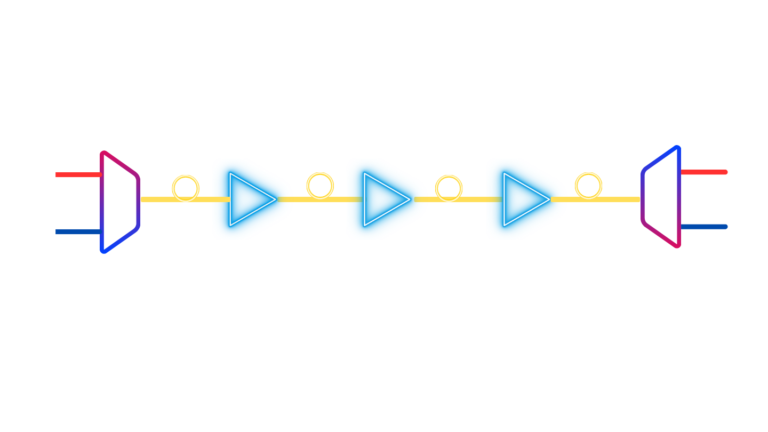

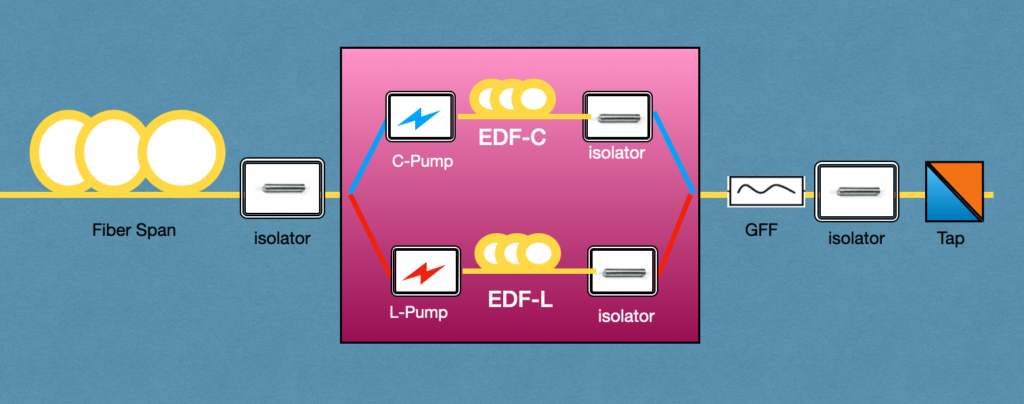

C+L Architecture

The Advantages of C+L

The adoption of C+L bands in fiber optic networks comes with several advantages, crucial for meeting the growing demands for data transmission and communication services:

- Increased Capacity: One of the most significant advantages of utilizing both C and L bands is the dramatic increase in network capacity. By essentially doubling the available spectrum for data transmission, service providers can accommodate more data traffic, which is essential in an era where data consumption is soaring due to streaming services, IoT devices, and cloud computing.

- Improved Efficiency: The use of C+L bands makes optical networks more efficient. By leveraging wider bandwidths, operators can optimize their existing infrastructure, reducing the need for additional physical fibers. This efficiency not only cuts costs but also accelerates the deployment of new services.

- Enhanced Flexibility: With more spectrum comes greater flexibility in managing and allocating resources. Network operators can dynamically adjust bandwidth allocations to meet changing demand patterns, improving overall service quality and user experience.

- Reduced Attenuation and Dispersion: Each band has its own set of optical properties. By carefully managing signals across both C and L bands, it’s possible to mitigate issues like signal attenuation and chromatic dispersion, leading to longer transmission distances without the need for signal regeneration.

Challenges in C+L Band Implementation:

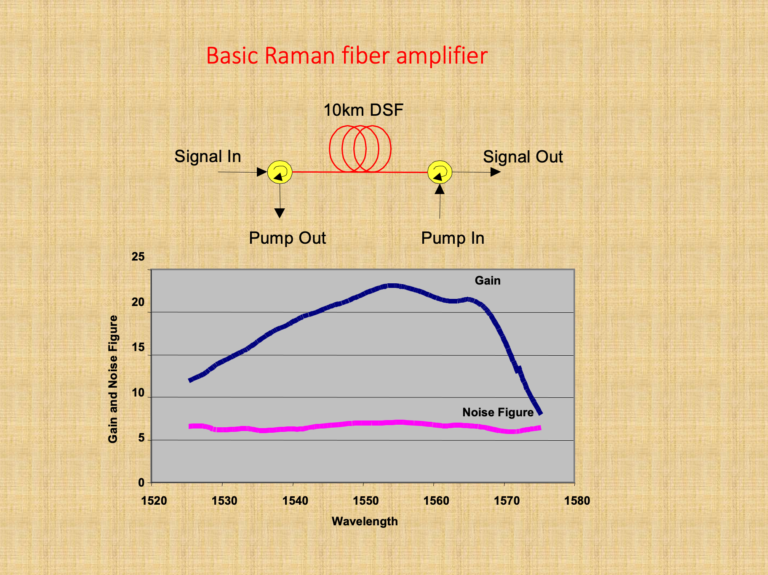

- Stimulated Raman Scattering (SRS): A significant challenge in C+L band usage is SRS, which causes a tilt in power distribution from the C-band to the L-band. This effect can create operational issues, such as longer recovery times from network failures, slow and complex provisioning due to the need to manage the power tilt between the bands, and restrictions on network topologies.

- Cost: The financial aspect is another hurdle. Doubling the components, such as amplifiers and wavelength-selective switches (WSS), can be costly. Network upgrades from C-band to C+L can often mean a complete overhaul of the existing line system, a deterrent for many operators if the L-band isn’t immediately needed.

- C+L Recovery Speed: Network recovery from failures can be sluggish, with times hovering around the 10-minute mark.

- C+L Provisioning Speed and Complexity: The provisioning process becomes more complicated, demanding careful management of the number of channels across bands.

The Future of C+L

The future of C+L in optical communications is bright, with several trends and developments on the horizon:

- Integration with Emerging Technologies: As 5G and beyond continue to roll out, the integration of C+L band capabilities with these new technologies will be crucial. The increased bandwidth and efficiency will support the ultra-high-speed, low-latency requirements of future mobile networks and applications.

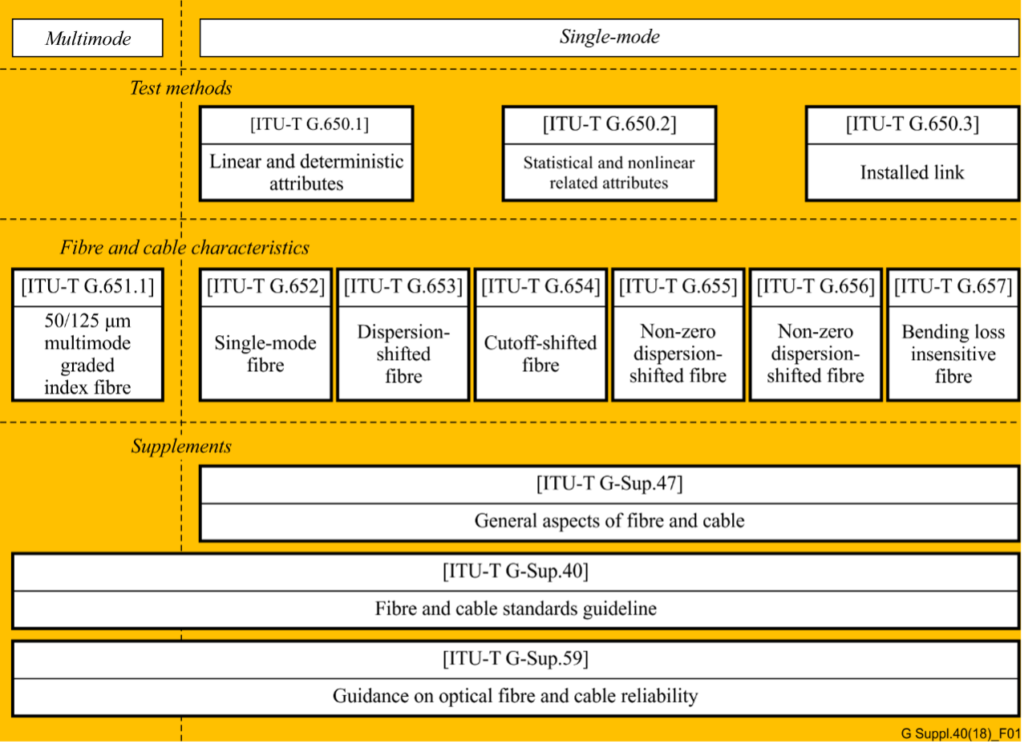

- Innovations in Fiber Optic Technology: Ongoing research in fiber optics, including new types of fibers and advanced modulation techniques, promises to further unlock the potential of the C+L bands. These innovations could lead to even greater capacities and more efficient use of the optical spectrum.

- Sustainability Impacts: With an emphasis on sustainability, the efficiency improvements associated with C+L band usage could contribute to reducing the energy consumption of data centers and network infrastructure, aligning with global efforts to minimize environmental impacts.

- Expansion Beyond Telecommunications: While currently most relevant to telecommunications, the benefits of C+L band technology could extend to other areas, including remote sensing, medical imaging, and space communications, where the demand for high-capacity, reliable transmission is growing.

In conclusion, the adoption and development of C+L band technology represent a significant step forward in the evolution of optical communications. By offering increased capacity, efficiency, and flexibility, C+L bands are well-positioned to meet the current and future demands of our data-driven world. As we look to the future, the continued innovation and integration of C+L technology into broader telecommunications and technology ecosystems will be vital in shaping the next generation of global communication networks.