Why Engineers Choose MapYourTech

Professional written content from industry engineers. Learn 3× faster with searchable articles you can reference anytime.

Read at Your Own Pace

Scan headings, jump to what matters, absorb content 3× faster than videos. Skip what you know, deep-dive where you need.

Find Answers Instantly

Search 666+ articles like Google. Find formulas, commands, troubleshooting steps in 30 seconds — not 30 minutes of video scrubbing.

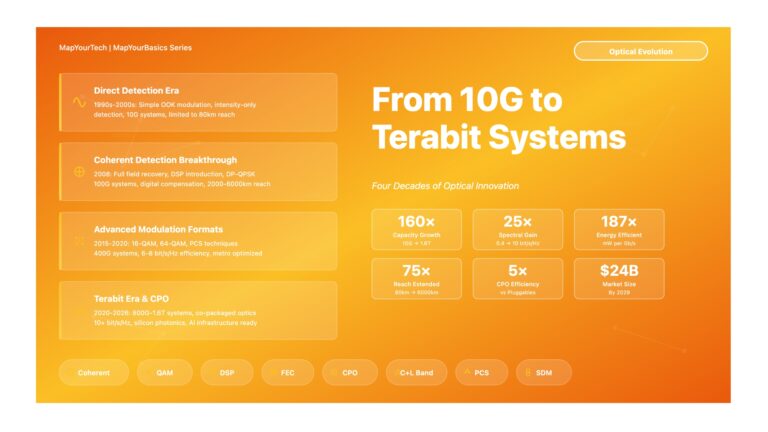

Updated Weekly Since 2011

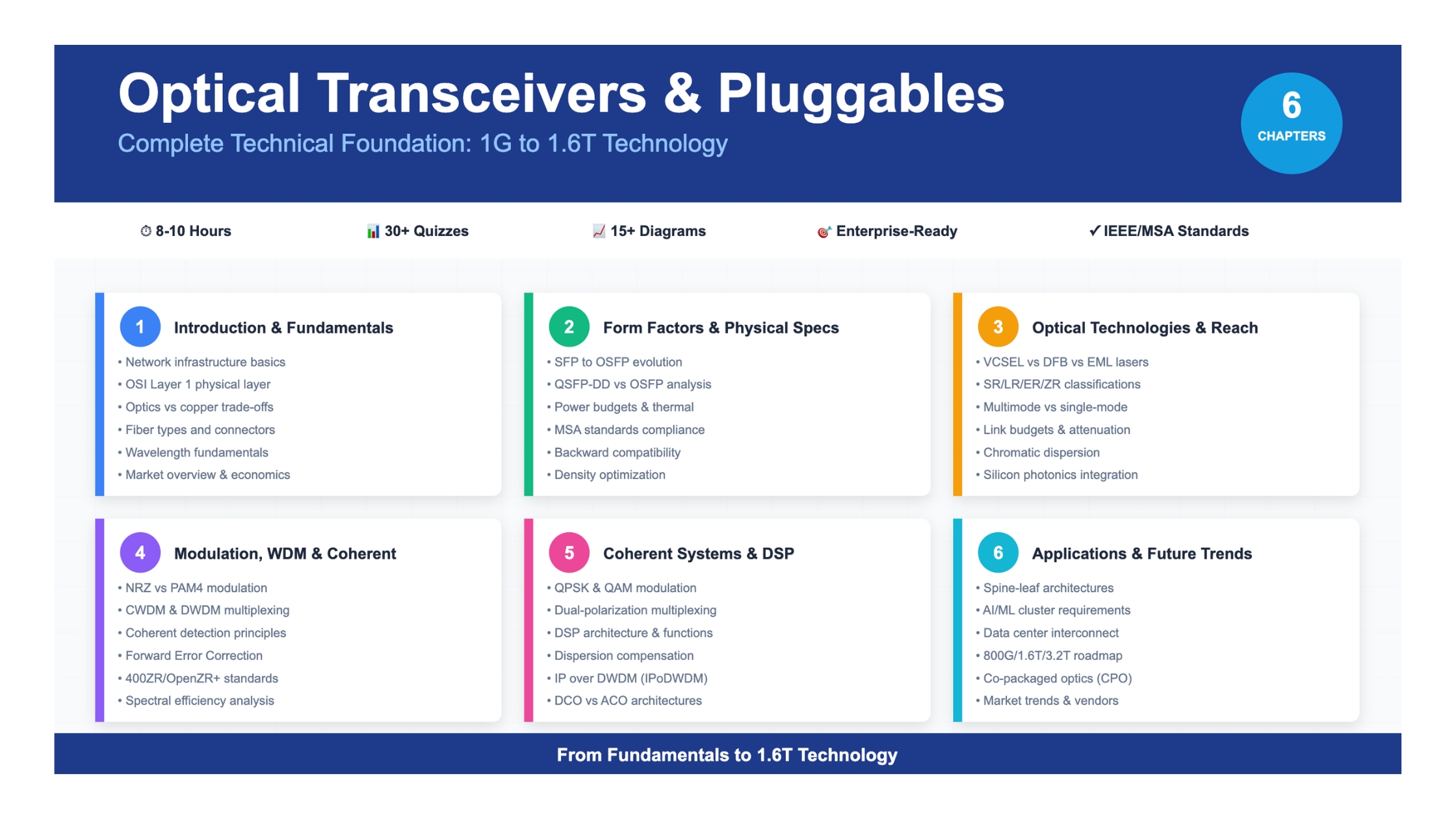

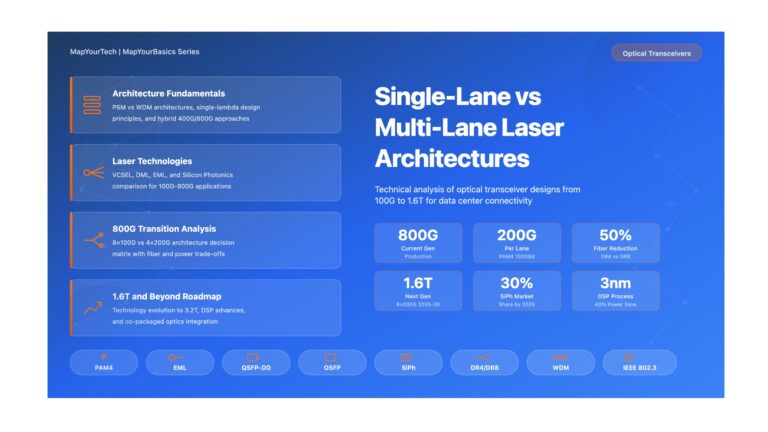

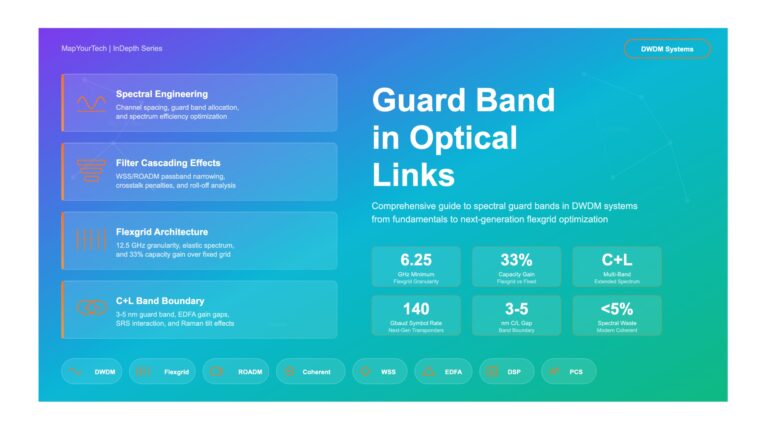

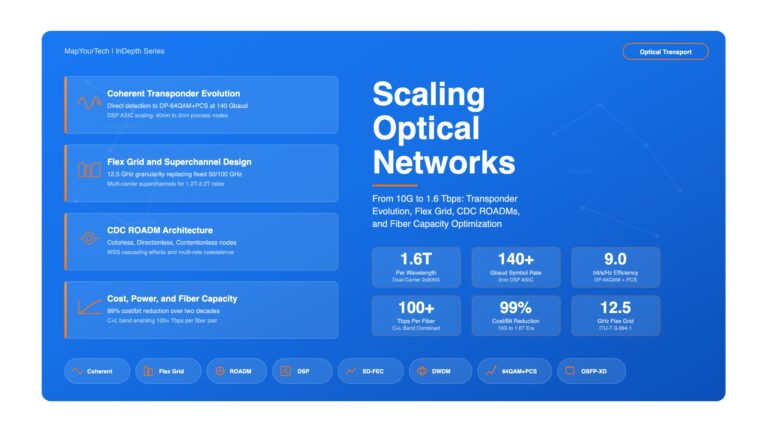

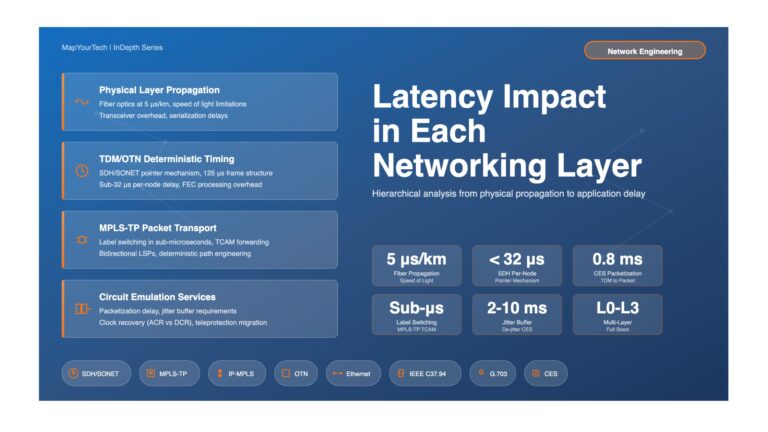

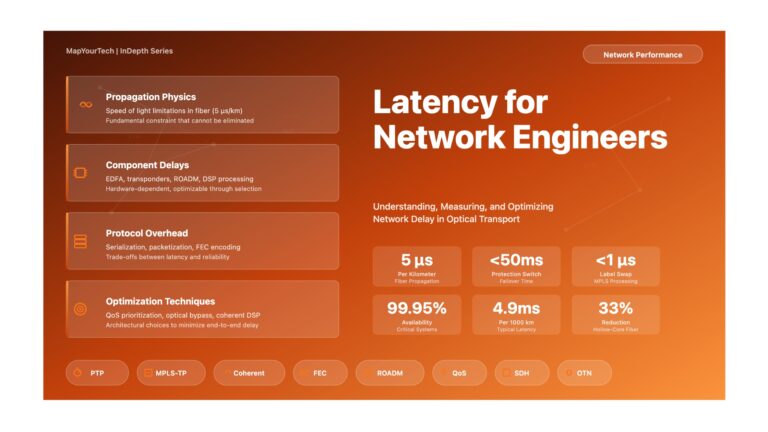

Learn 400G/800G/1.6T technologies as they're deployed. ITU-T updates, vendor capabilities — content reflects 2026 standards.

Interactive Tools & Simulators

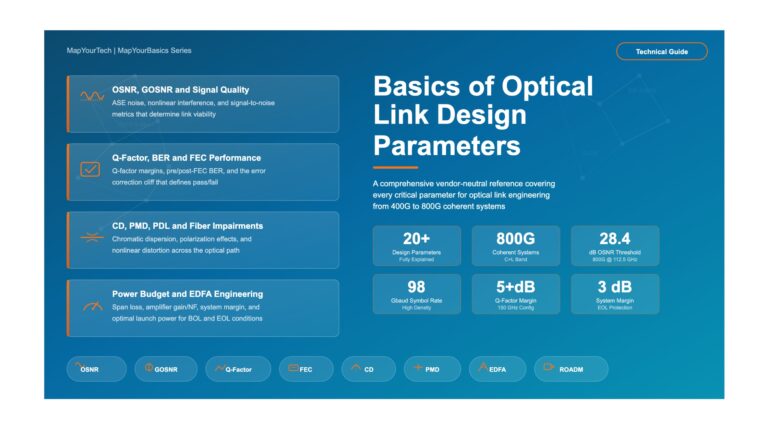

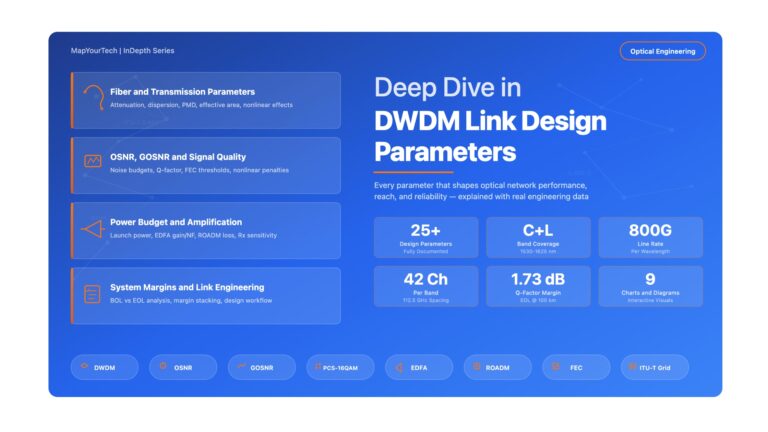

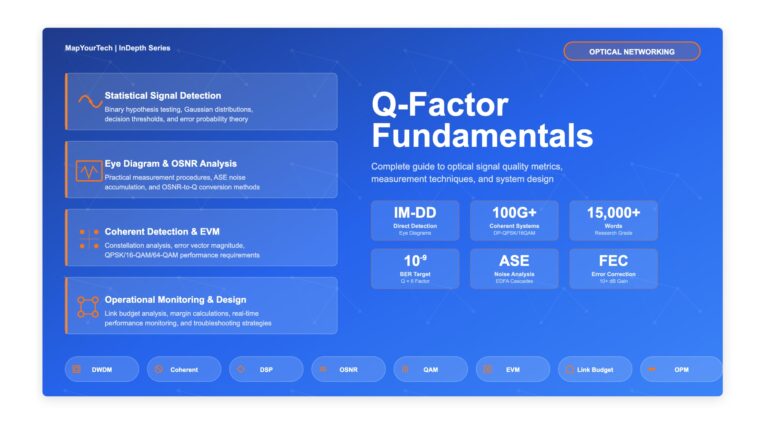

OSNR calculators, link budget planners, dispersion tools. Input your parameters, get instant results you can apply in real network planning.

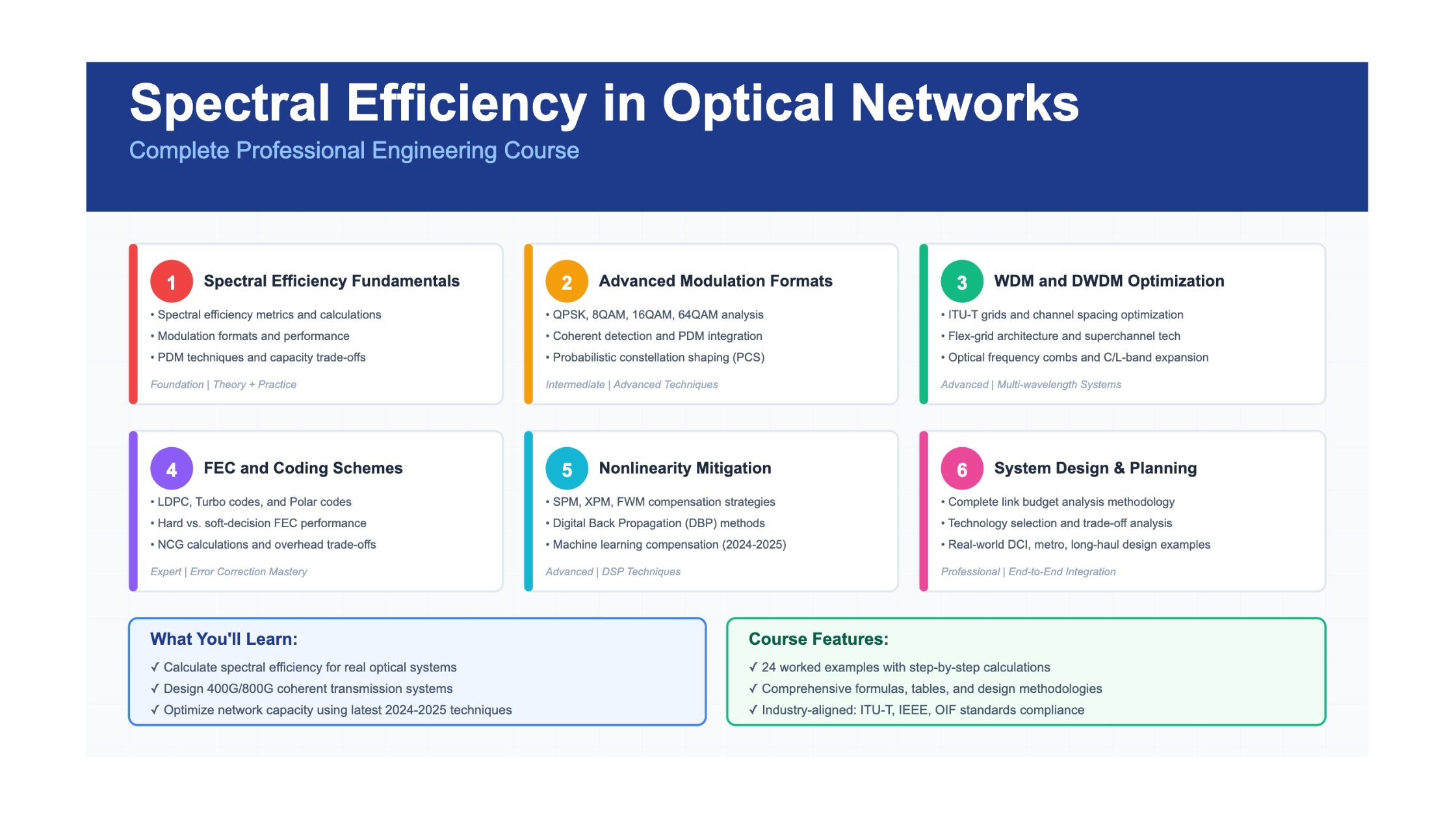

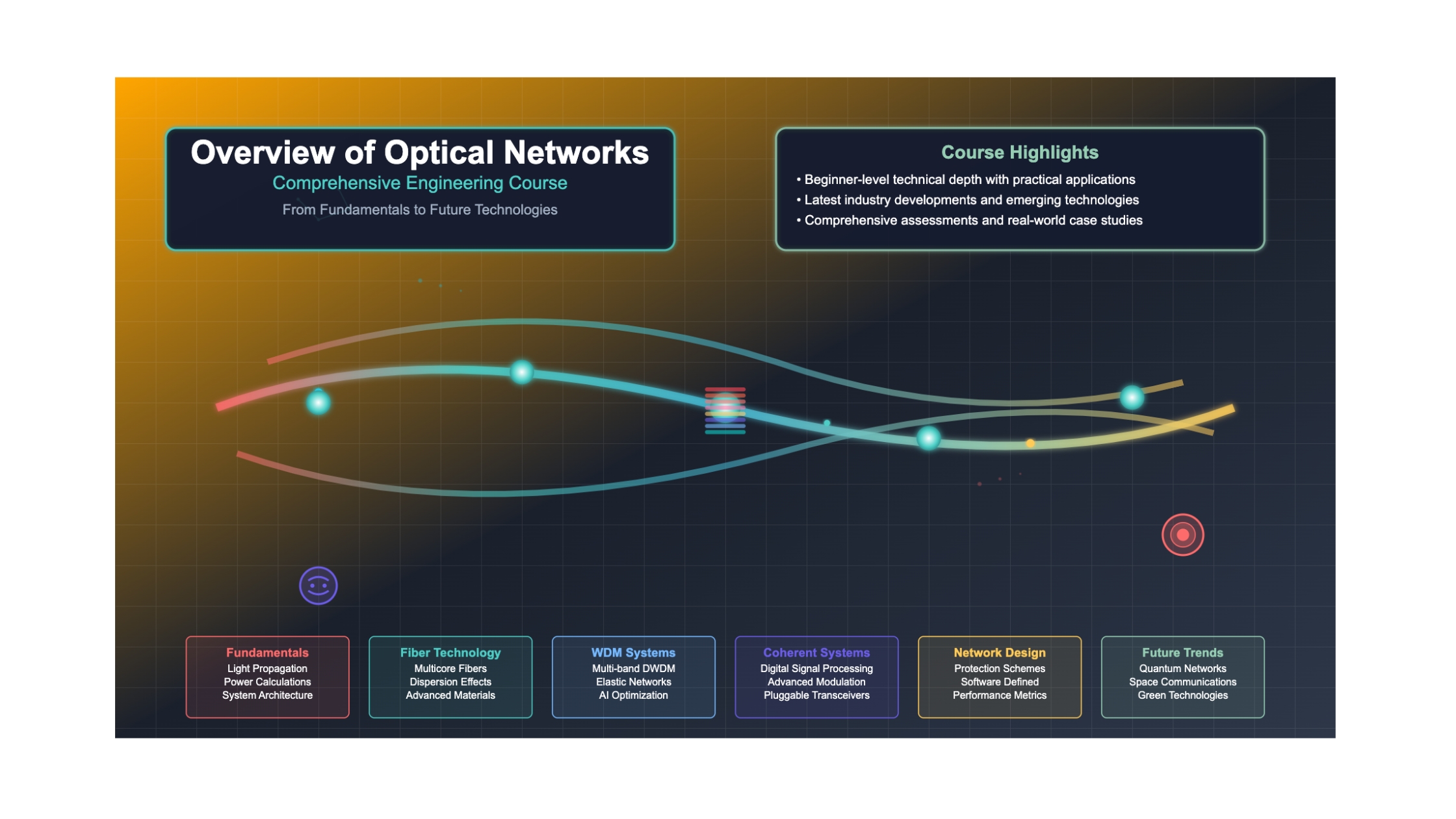

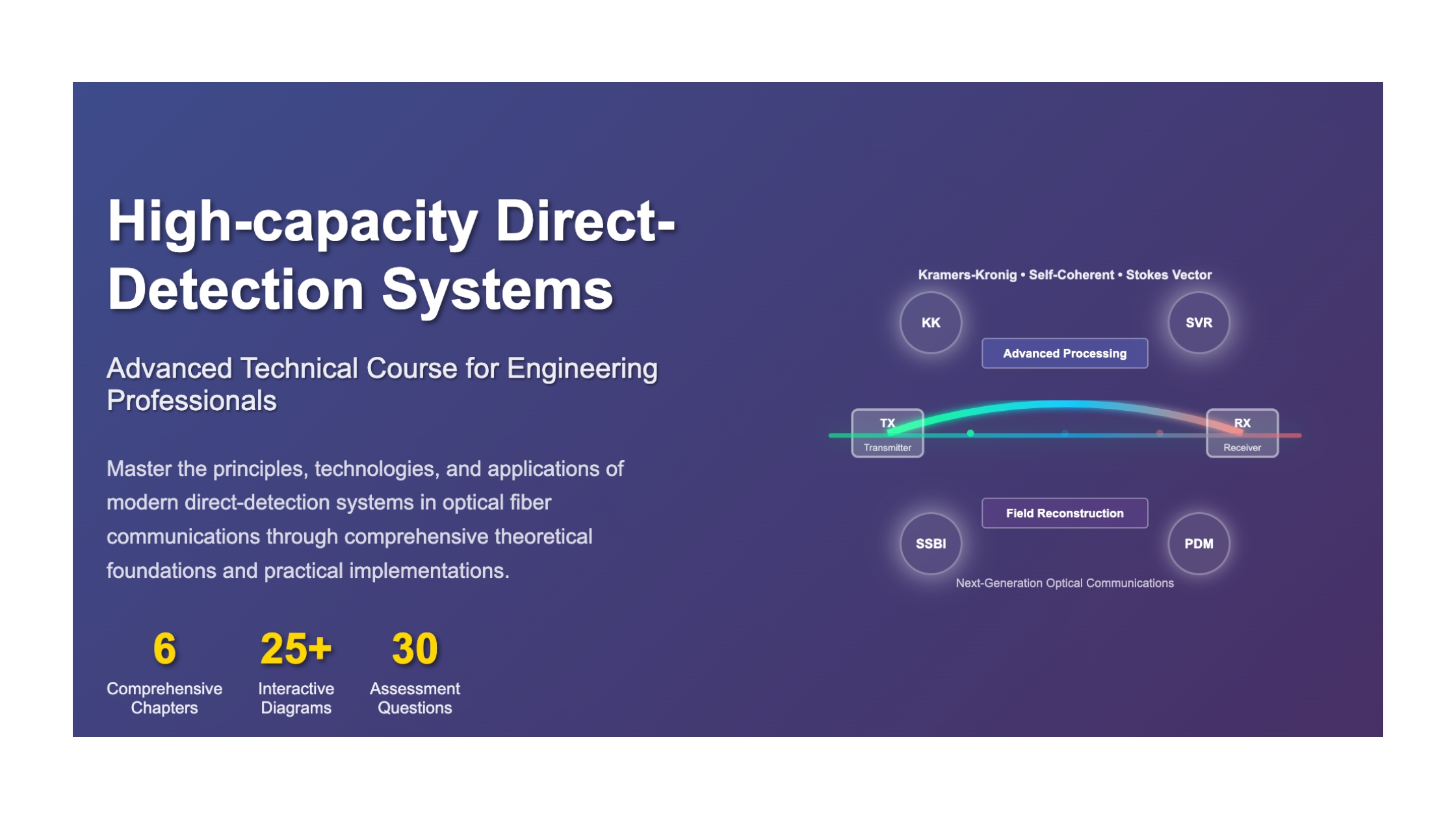

Books Written from Experience

Comprehensive books on optical communications, Python automation, interview prep. Written by engineers who've deployed networks at scale.

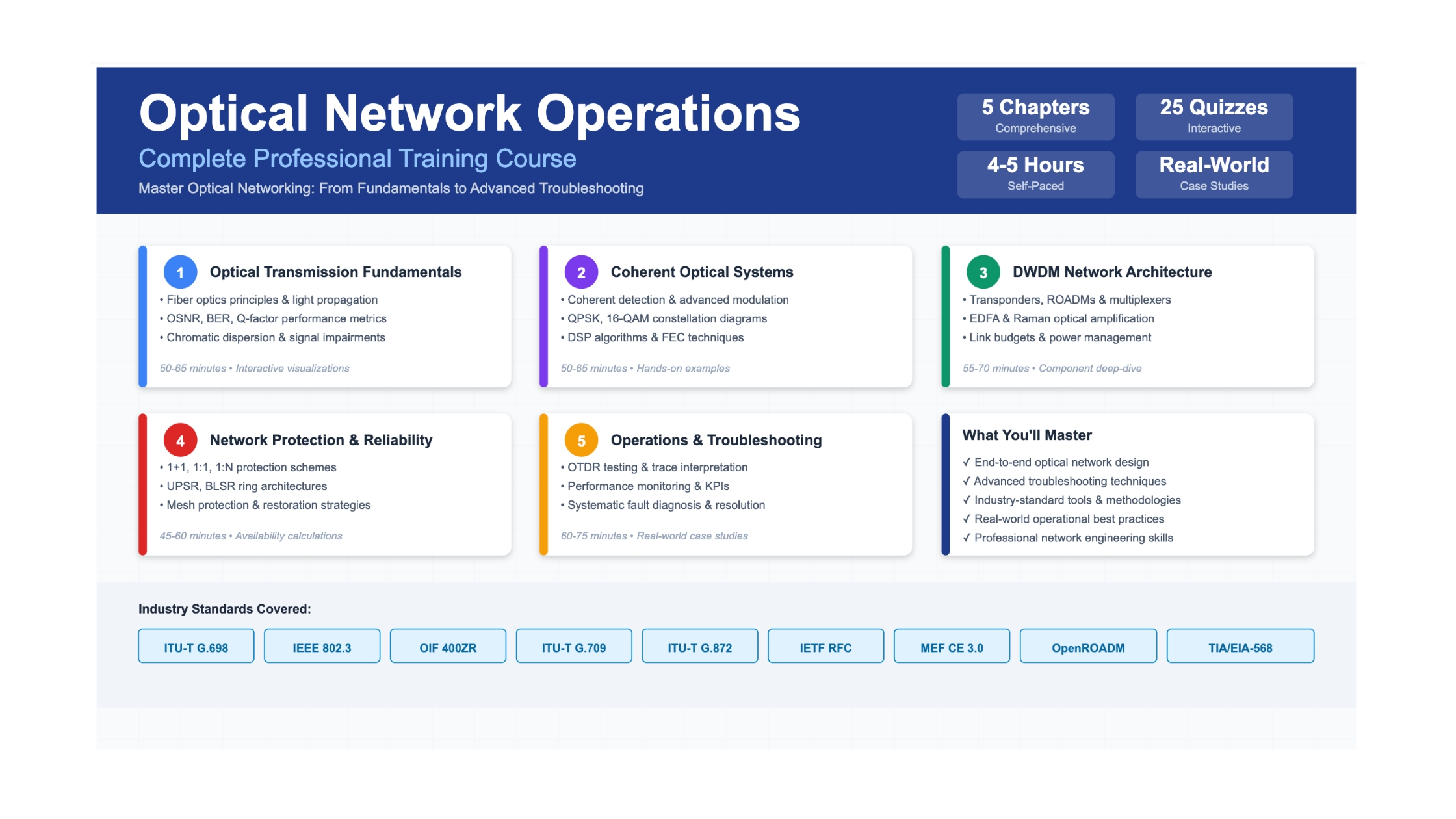

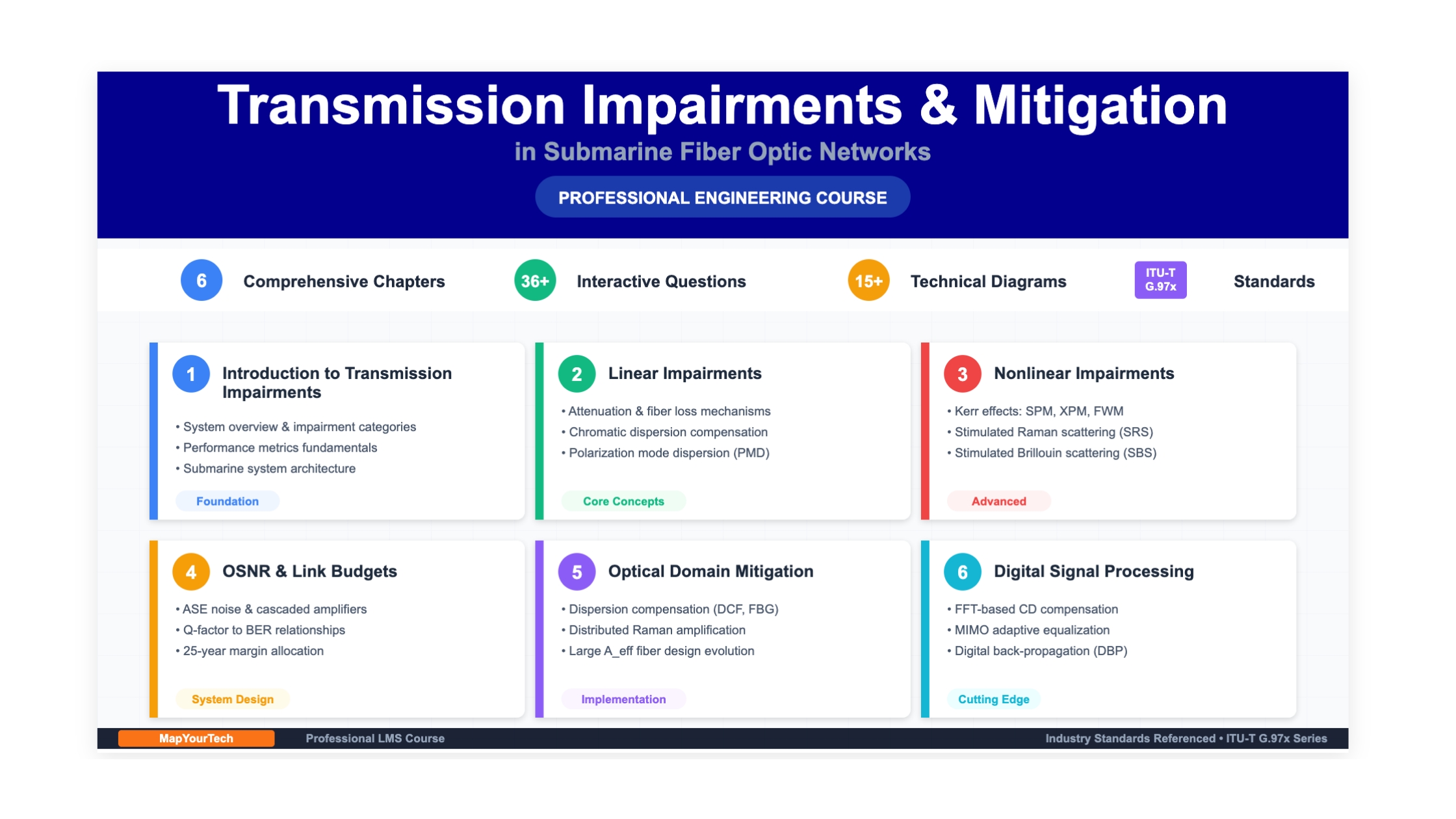

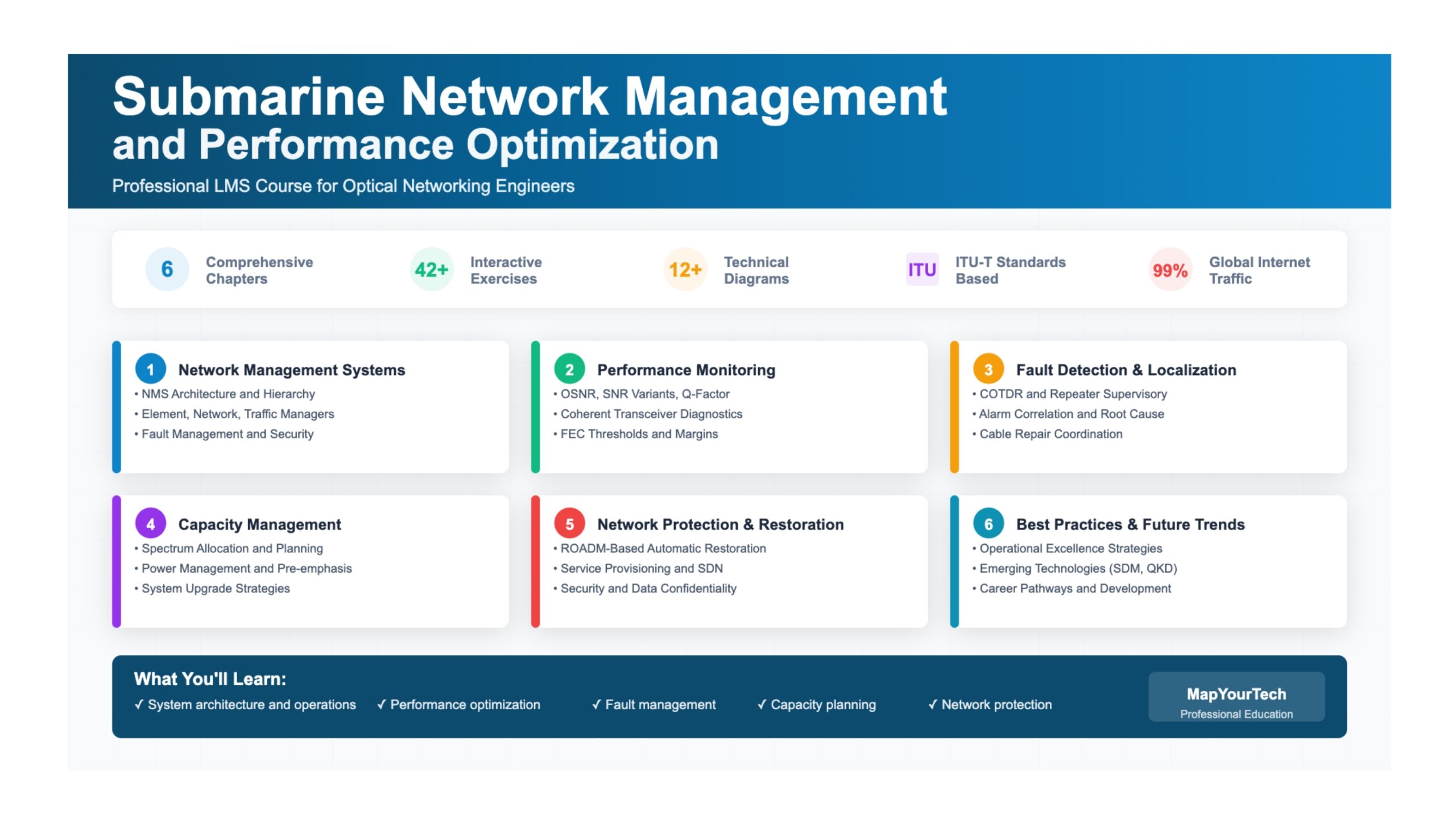

Earn Professional Certificates

Complete 50+ courses and earn certificates. Share on LinkedIn, add to resume, showcase your optical networking expertise.