9 min read

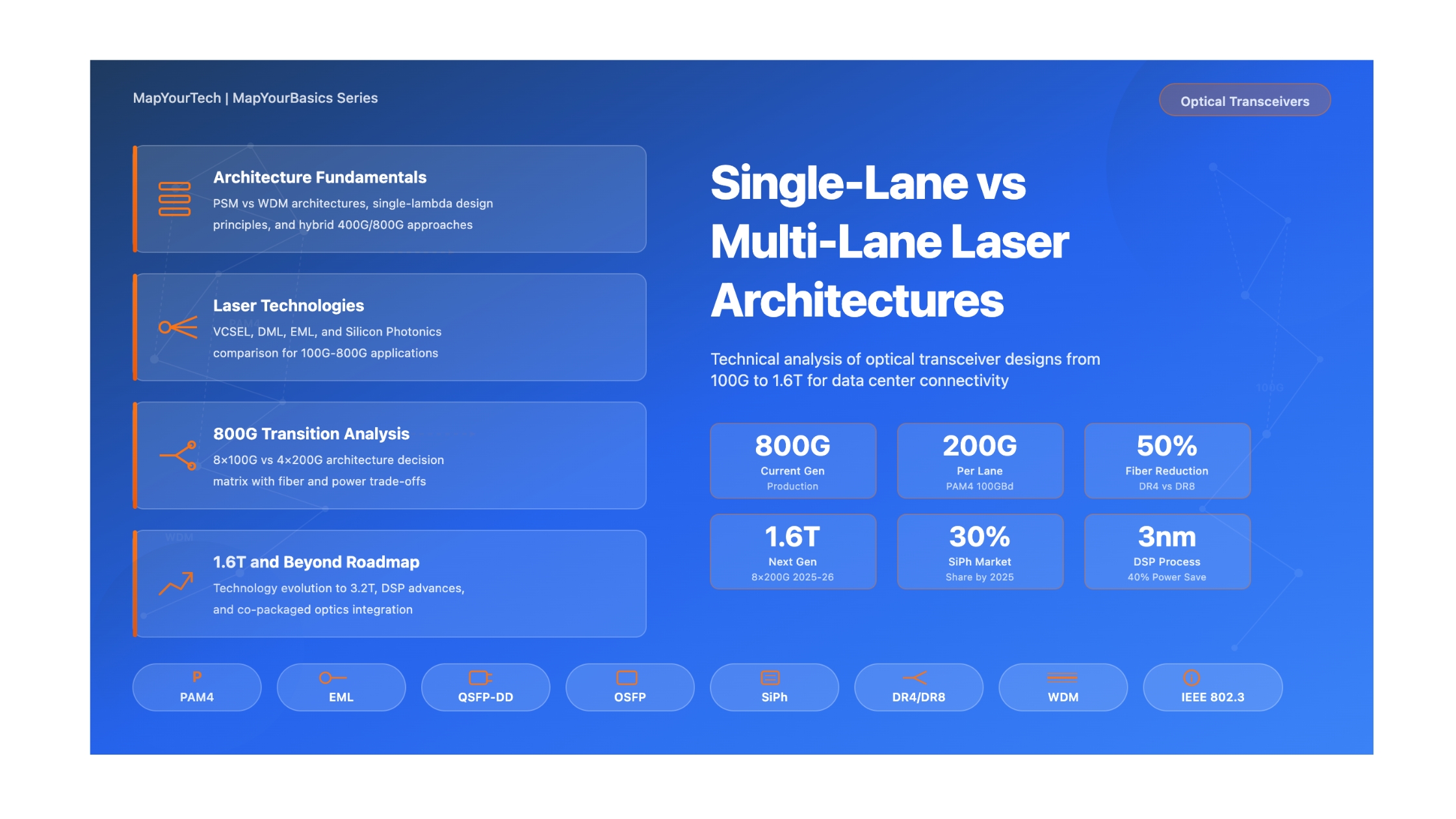

Single-Lane vs Multi-Lane Laser Architectures in Optical Transceivers

A comprehensive technical analysis of parallel optics, wavelength multiplexing, and single-lambda technologies driving 100G to 1.6T data center connectivity

1. Introduction

The optical transceiver industry stands at an inflection point. As data centers scale to meet the demands of artificial intelligence workloads, cloud computing, and hyperscale infrastructure, engineers face a fundamental architectural decision: how should aggregate bandwidth be divided across optical lanes? This choice between single-lane (single-lambda) and multi-lane approaches affects everything from module cost and power consumption to fiber infrastructure requirements and future upgrade paths.

The question appears deceptively simple. Should a 400G transceiver use four lasers transmitting 100G each, or pursue alternative configurations? Should 800G be achieved through eight 100G lanes, four 200G lanes, or something entirely different like coherent detection? The answer depends on a complex interplay of laser technology maturity, Digital Signal Processor (DSP) capabilities, target reach requirements, thermal constraints, and total cost of ownership. Understanding these trade-offs is essential for network architects designing the next generation of data center interconnects.

This article provides a detailed technical examination of single-lane and multi-lane laser architectures in modern optical transceivers. We explore the underlying physics that govern each approach, analyze the component technologies that enable them, compare their performance characteristics across different deployment scenarios, and chart the industry trajectory from current 400G/800G solutions toward emerging 1.6T and 3.2T standards. The goal is to equip optical networking professionals with the knowledge needed to make informed architectural decisions as data rates continue their relentless climb.

Scope of This Analysis

This article focuses on pluggable transceiver modules in the QSFP-DD, OSFP, and emerging OSFP-XD form factors. We examine direct-detect (intensity modulation) systems covering short-reach (SR), medium-reach (DR/FR), and long-reach (LR) applications, with particular attention to the 100m to 10km range where architectural choices have the greatest impact. Coherent transceivers for data center interconnect (ZR/ZR+) are discussed where they represent alternative approaches to achieving similar bandwidth goals.

2. Historical Evolution of Multi-Lane Optics

Understanding today's architectural landscape requires context on how the industry arrived at its current state. The evolution from early single-lane systems through parallel optics to modern hybrid approaches reflects the interplay between laser technology advancement and the insatiable demand for bandwidth.

2.1 The Birth of Parallel Optics (2010-2015)

When the IEEE ratified the 802.3ba standard in June 2010, it marked a watershed moment for 100 Gigabit Ethernet. The standard included 100GBASE-LR4, which distributed 100G across four 25G wavelength channels using Coarse Wavelength Division Multiplexing (CWDM) around 1310nm. This multi-lane approach was not merely a design preference but a necessity dictated by the technology limitations of that era.

In 2010, switch and router ASICs operated with 10G SerDes interfaces. No commercially viable single-laser technology could modulate at 100G rates. The solution was elegant in its simplicity: combine four 25G channels, each using mature Non-Return-to-Zero (NRZ) modulation with established laser and photodetector technology. The CFP (C Form-Factor Pluggable) modules that implemented LR4 were large by today's standards, but they delivered 100G connectivity using proven components.

The parallel approach extended to multimode fiber with 100GBASE-SR4, which used four 25G VCSEL (Vertical-Cavity Surface-Emitting Laser) lanes over parallel multimode fibers with MPO connectors. This architecture established the pattern that would dominate the next decade: achieve higher aggregate rates by adding parallel lanes rather than increasing per-lane speed.

2.2 The QSFP28 Era and Cost Optimization (2015-2020)

As 100G technology matured, the industry sought smaller, lower-cost modules for data center deployment. The QSFP28 form factor, roughly the size of a highlighter marker, represented a dramatic size reduction from CFP. This miniaturization was enabled by improved CMOS fabrication technology that allowed more functionality in smaller silicon area, combined with the introduction of KR4 Forward Error Correction (FEC) that relaxed optical performance requirements.

The Multi-Source Agreement (MSA) organizations responded to data center demand with cost-optimized variants. The 100G CWDM4 MSA reduced costs compared to IEEE LR4 by using wider wavelength spacing (20nm versus 4.5nm in LR4), enabling less expensive optical multiplexers and demultiplexers. The PSM4 MSA used parallel single-mode fibers with four 25G lanes at a single wavelength, trading fiber count for component simplicity.

IEEE 802.3ba - 100G Ethernet Standard

100GBASE-LR4 (4x25G CWDM) and 100GBASE-SR4 (4x25G parallel MMF) established multi-lane architecture as the industry norm for high-speed optical transmission.

QSFP28 Form Factor Adoption

Smaller modules with 4x25G electrical interface enable higher port density. CWDM4 and PSM4 MSAs provide cost-optimized alternatives to IEEE specifications.

IEEE 802.3cd - Single-Lambda 100G

PAM4 modulation enables 100G on single wavelength. 100GBASE-DR, FR, and LR specifications mark transition toward single-lane architecture for 100G.

400G Production Ramp

QSFP-DD and OSFP form factors with 8x50G electrical interface. 400GBASE-DR4 uses 4x100G single-lambda lanes over parallel SMF.

800G Deployment Begins

8x100G and 4x200G architectures compete. AI data center demand accelerates adoption. Silicon photonics emerges as mainstream technology option.

1.6T Qualification and Early Deployment

Hyperscalers qualify 1.6T DR8 modules. 200G/lane EMLs enter production. OSFP-XD form factor standardized for next-generation density.

2.3 The PAM4 Revolution (2018-Present)

The introduction of PAM4 (4-level Pulse Amplitude Modulation) fundamentally changed the architectural calculus. PAM4 encodes two bits per symbol rather than the single bit of NRZ, effectively doubling spectral efficiency. A 25 GBaud PAM4 signal carries 50 Gbps; a 50 GBaud PAM4 signal carries 100 Gbps.

This technology enabled single-lambda 100G for the first time. IEEE 802.3cd, ratified in 2018, specified 100GBASE-DR (500m reach), 100GBASE-FR (2km reach), and 100GBASE-LR (10km reach) using a single 50 GBaud PAM4 wavelength at 1310nm. The implications were profound: what previously required four lasers, four receivers, and wavelength multiplexing could now be achieved with a single laser-receiver pair.

The trade-off was signal integrity. PAM4's three "eye" openings are inherently smaller than NRZ's single eye, making PAM4 signals more susceptible to noise and dispersion. This necessitated stronger Forward Error Correction (KP4 FEC instead of KR4) and imposed reach limitations compared to NRZ systems operating at the same baud rate. However, the cost and complexity advantages of single-lambda designs proved compelling for many data center applications.

Figure 2: NRZ vs PAM4 Modulation - Eye Diagrams and Signal Characteristics

Engineering Insight

The transition from multi-lane NRZ to single-lane PAM4 represents a fundamental trade-off between optical and electronic complexity. Multi-lane NRZ systems require multiple lasers, receivers, and often wavelength multiplexers, but use simpler signal processing. Single-lane PAM4 systems reduce optical components but require sophisticated DSP for signal equalization, timing recovery, and forward error correction. The optimal choice depends on relative technology maturity, cost structures, and specific application requirements.

3. Architectural Fundamentals

To make informed decisions about single-lane versus multi-lane architectures, engineers must understand the component technologies, signal processing requirements, and system-level implications of each approach. This section examines the building blocks of modern optical transceivers and how they combine in different architectural configurations.

3.1 Multi-Lane Architecture: Parallel Paths to Bandwidth

Multi-lane architectures achieve aggregate bandwidth by operating multiple optical channels in parallel. These channels may use parallel fibers (parallel single-mode or multimode), wavelength division multiplexing over a single fiber pair, or combinations of both approaches. The fundamental advantage is that each lane operates at a lower data rate than the aggregate, relaxing requirements on individual components.

Parallel Single-Mode (PSM) Architecture

- Configuration 4 or 8 parallel fibers per direction

- Wavelength All lanes at 1310nm

- Connector MPO-12 or MPO-16

- Example Standards 400GBASE-DR4, 800GBASE-DR8

- Typical Reach 500m (DR), 2km (FR)

Wavelength Division Multiplexing (WDM)

- Configuration 4 or 8 wavelengths on duplex fiber

- Wavelength LAN-WDM or CWDM around 1310nm

- Connector Duplex LC

- Example Standards 100GBASE-LR4, 400GBASE-LR8

- Typical Reach 10km (LR)

Parallel Single-Mode (PSM) Systems

PSM architectures use multiple fibers to carry multiple optical lanes, all operating at the same wavelength. The 400GBASE-DR4 standard exemplifies this approach: four lanes of 100G PAM4 at 1310nm transmit over four single-mode fibers to the far end, where four separate receivers detect the signals. An MPO-12 connector houses the fiber array (4 transmit, 4 receive, 4 unused).

The primary advantage of PSM is component simplicity. All lasers operate at the same wavelength, eliminating the need for wavelength multiplexers and demultiplexers. Manufacturing tolerances are relaxed because wavelength accuracy is not critical for channel isolation. The laser array can use a single laser source split to multiple modulators (as in some silicon photonics implementations) or discrete lasers, depending on cost and performance targets.

The trade-off is fiber consumption. A 400G-DR4 link requires 8 fibers (4 per direction). An 800G-DR8 link requires 16 fibers. In data centers with structured cabling, this fiber count may be acceptable, especially for short reaches where fiber cost is a small fraction of total link cost. However, for longer runs or environments with limited fiber plant, the fiber requirement becomes a significant consideration.

Figure 1: Parallel Single-Mode (PSM) vs Wavelength Division Multiplexing (WDM) Architectures

Wavelength Division Multiplexing (WDM) Systems

WDM architectures multiplex multiple wavelengths onto a single fiber pair, reducing fiber consumption at the cost of optical complexity. The 100GBASE-LR4 standard uses four wavelengths around 1310nm, spaced approximately 4.5nm apart according to the LAN-WDM grid. Each wavelength carries 25G NRZ, and optical multiplexers combine them for transmission over duplex fiber.

WDM systems require precise wavelength control to ensure proper channel separation. Each laser must operate within its designated wavelength band, and the multiplexer/demultiplexer filters must align with these wavelengths. This imposes tighter manufacturing tolerances and typically requires temperature stabilization (via thermoelectric coolers) for the laser sources, adding cost and power consumption.

3.2 Single-Lane Architecture: Maximum Bandwidth Per Wavelength

Single-lane (single-lambda) architectures concentrate the full aggregate bandwidth onto a single wavelength. This approach became viable with the maturation of PAM4 technology and high-speed Digital Signal Processors capable of managing the resulting signal integrity challenges.

100G Single-Lambda (100G-LR1/FR1/DR1)

- Configuration Single wavelength, duplex fiber

- Modulation 50 GBaud PAM4 = 100 Gbps

- Connector Duplex LC

- DSP Requirement Integrated gearbox + FEC

- Typical Reach 500m/2km/10km

200G Single-Lambda (Emerging)

- Configuration Single wavelength, duplex fiber

- Modulation 100+ GBaud PAM4 = 200 Gbps

- Connector Duplex LC

- DSP Requirement Advanced 3nm DSP

- Status Early production for 800G FR4

The Single-Lambda Value Proposition

Single-lambda designs offer compelling advantages in component count and manufacturing complexity. A 100G single-lambda module contains one laser, one driver, one photodetector, one transimpedance amplifier (TIA), and one DSP channel for the optical path (plus electrical interface circuitry). Compare this to a 100G LR4 module requiring four lasers, four drivers, four photodetectors, four TIAs, a wavelength multiplexer, and a wavelength demultiplexer.

The reduction in optical components translates to smaller module footprints, lower assembly cost, and potentially higher manufacturing yield. It also eliminates wavelength alignment requirements, simplifying production testing. These advantages scale with increasing data rates: a 400G-DR4 module requires four complete optical channels, while a hypothetical 400G single-lambda would require only one (though 400G single-lambda direct-detect is not yet practical).

PAM4 Signal-to-Noise Ratio Penalty

// PAM4 requires approximately 9.5 dB higher SNR than NRZ for equivalent BER

SNRPAM4 = SNRNRZ + 9.5 dB

// For BER = 10-12 (typical pre-FEC target):

Required SNRNRZ = ~17 dB

Required SNRPAM4 = ~26.5 dB

// KP4 FEC allows operation at pre-FEC BER of 2.4x10-43.3 Hybrid Architectures: The Current Industry Approach

Modern high-speed transceivers rarely use pure single-lane or pure multi-lane architectures. Instead, they combine single-lane PAM4 technology with parallelism to achieve aggregate rates. The result is a hybrid architecture where each lane operates at the highest practical single-lane rate, and multiple such lanes combine for the total bandwidth.

Consider 400GBASE-DR4: it uses four lanes of 100G PAM4 (each at 50 GBaud), transmitted over four parallel single-mode fibers. Each lane is a "single-lambda" 100G channel, but the aggregate 400G requires four such lanes. Similarly, 800GBASE-DR8 uses eight 100G PAM4 lanes, while emerging 800G-DR4 implementations use four 200G PAM4 lanes (each at approximately 100 GBaud).

4. Key Laser Technologies

The laser source is often the most critical and costly component in an optical transceiver. Different laser technologies suit different applications based on their speed capability, output power, spectral characteristics, and cost.

4.1 VCSEL (Vertical-Cavity Surface-Emitting Laser)

VCSELs emit light perpendicular to the semiconductor surface, enabling wafer-scale testing and low-cost manufacturing. They dominate short-reach multimode applications (SR standards) where their 850nm wavelength matches the optimal transmission window of OM4/OM5 multimode fiber. Modern 100G VCSELs support PAM4 modulation at 50+ GBaud for 100G-per-lane multimode transmission.

The primary limitation of VCSELs is reach. As single-lane data rates increase, VCSEL bandwidth limitations and multimode fiber dispersion combine to reduce achievable distance. The 400GBASE-SR8 standard specifies 100m reach on OM4 fiber with eight 50G PAM4 VCSEL lanes. Moving to 100G per lane reduces reach further, and 200G VCSELs remain in development with reach likely limited to tens of meters.

4.2 DML (Directly Modulated Laser)

DMLs modulate the laser output by varying the injection current, creating a simple, low-cost design. The modulation process inherently causes wavelength chirp (frequency variation during modulation), which interacts with fiber chromatic dispersion to limit reach. DMLs at 1310nm suit short-to-medium reach single-mode applications where dispersion is minimized.

DML technology has advanced to support 100G PAM4 for 800G-DR8 and similar applications. However, the chirp penalty makes DMLs unsuitable for longer reaches or higher baud rates where dispersion accumulation becomes significant.

4.3 EML (Electro-Absorption Modulated Laser)

EMLs integrate a laser source with an electro-absorption modulator (EAM) on a single chip. The laser operates continuously at constant current, while the EAM modulates the light by varying optical absorption. This separation eliminates the chirp associated with direct modulation, enabling longer reaches at high data rates.

EMLs are the workhorse of single-mode, medium-to-long reach transceivers. 100G EMLs (50 GBaud PAM4) enable 100G single-lambda for DR/FR/LR applications. 200G EMLs (approximately 100 GBaud PAM4) are now entering production for 800G-FR4 and 800G-LR4 applications. Coherent Corporation's 212 Gbps LWDM EMLs, for example, achieve TDECQ below 2.0 dB, meeting the stringent requirements of 800G LR4 transmission.

4.4 Silicon Photonics (SiPh)

Silicon photonics represents an alternative architectural approach that has gained significant traction in recent years. Rather than using discrete Indium Phosphide (InP) lasers with III-V modulators, SiPh integrates optical functions onto silicon wafers using CMOS-compatible fabrication processes.

In a SiPh transceiver, a separate continuous-wave (CW) laser provides the light source, which couples into a silicon chip containing Mach-Zehnder modulators, waveguides, and other optical components. The CW laser can be shared across multiple modulator channels, potentially reducing component count. Current 800G SiPh solutions use dual CW lasers to drive eight modulator channels, with single-laser solutions expected to reach mass production by late 2025.

| Technology | Wavelength | Max Single-Lane Rate | Reach | Relative Cost | Best Application |

|---|---|---|---|---|---|

| VCSEL | 850nm | 100G (emerging) | ~100m (OM4) | Low | SR, intra-rack |

| DML | 1310nm | 100G | ~500m | Medium | DR, short SMF |

| EML | 1310nm | 200G (production) | 2-10km | High | FR/LR, long SMF |

| SiPh + CW | 1310nm | 100-200G | ~500m | Medium-High | DR, scalable |

| Coherent | C/L-band | 800G+ | 40-120km+ | Very High | ZR, DCI |

5. Performance Comparison

With the architectural foundations established, we can now directly compare single-lane and multi-lane approaches across the key performance dimensions that matter to network operators.

5.1 Power Consumption Analysis

Module power consumption determines the thermal design requirements for switches and limits port density. As data center operators push toward higher faceplate bandwidth, power efficiency (measured in milliwatts per gigabit, mW/Gb) becomes a critical differentiator.

Power Efficiency Comparison

// 400G Module Power Examples

400G-DR4 (4x100G PSM)

Typical Power: 10-12W

Efficiency: 25-30 mW/Gb

400G-FR4 (4x100G CWDM)

Typical Power: 12-14W

Efficiency: 30-35 mW/Gb

// 800G Module Power Examples

800G-DR8 (8x100G PSM)

Typical Power: 14-18W

Efficiency: 17.5-22.5 mW/Gb

800G-DR4 (4x200G PSM)

Typical Power: 12-15W (projected)

Efficiency: 15-18.75 mW/Gb

800G LPO (Linear)

Typical Power: 6-8W

Efficiency: 7.5-10 mW/GbThe data reveals several trends. First, the transition from 8x100G to 4x200G for 800G offers approximately 15-20% power reduction, demonstrating the efficiency gains from higher per-lane rates. Second, LPO architectures achieve dramatically better efficiency by eliminating DSP power, representing a potential paradigm shift for power-constrained deployments.

5.2 Reach and Signal Integrity

Achievable transmission distance depends on the balance between transmit power, receiver sensitivity, and accumulated impairments (loss, dispersion, noise). Single-lane architectures face greater signal integrity challenges because higher baud rates are more susceptible to chromatic dispersion and require better signal-to-noise ratios for PAM4 detection.

| Standard | Architecture | Per-Lane Rate | Reach | Fiber Type | Connector |

|---|---|---|---|---|---|

| 100G-SR4 | 4-lane parallel | 25G NRZ | 100m | OM4 MMF | MPO-12 |

| 100G-DR1 | Single-lane | 100G PAM4 | 500m | SMF | Duplex LC |

| 100G-LR4 | 4-lane WDM | 25G NRZ | 10km | SMF | Duplex LC |

| 100G-LR1 | Single-lane | 100G PAM4 | 10km | SMF | Duplex LC |

| 400G-DR4 | 4-lane parallel | 100G PAM4 | 500m | SMF | MPO-12 |

| 800G-DR8 | 8-lane parallel | 100G PAM4 | 500m | SMF | MPO-16 |

| 800G-DR4 | 4-lane parallel | 200G PAM4 | 500m | SMF | MPO-12 |

| 800G-FR4 | 4-lane WDM | 200G PAM4 | 2km | SMF | Duplex LC |

6. The 800G Transition: 8x100G vs 4x200G

The 800G generation represents a critical inflection point where the industry must choose between scaling existing 100G-per-lane technology (8x100G) or advancing to 200G-per-lane (4x200G). This decision has far-reaching implications for fiber infrastructure, thermal management, and the roadmap to 1.6T and beyond.

6.1 8x100G Architecture (800G-DR8/SR8)

The 8x100G approach extends proven 100G single-lambda technology to achieve 800G aggregate. Eight 100G PAM4 lanes, each operating at approximately 53 GBaud, transmit over eight parallel fibers (for DR8) or eight wavelengths (for LR8). This architecture leverages mature 100G EML and DSP technology with well-understood reliability characteristics.

The primary drawback of 8x100G is fiber consumption. An 800G-DR8 link requires 16 fibers (8 Tx, 8 Rx), doubling the fiber count compared to 400G-DR4. Thermal management for 8x100G modules is challenging but achievable within OSFP and QSFP-DD form factors with typical power consumption ranging from 14-18W.

6.2 4x200G Architecture (800G-DR4/FR4)

The 4x200G approach doubles per-lane data rate to reduce lane count. Four 200G PAM4 lanes at approximately 100+ GBaud achieve 800G over four parallel fibers (DR4) or four CWDM wavelengths (FR4/LR4). This architecture maintains fiber parity with 400G-DR4 while doubling capacity.

Enabling 4x200G requires advances across the optical and electronic stack. 200G EMLs must operate at 100+ GBaud with adequate bandwidth and extinction ratio. DSPs must process 200G PAM4 signals with sufficient equalization capability. Both technologies have reached production maturity in late 2024 and early 2025.

8x100G (DR8) Advantages

- Mature 100G component technology

- Proven reliability track record

- Available in production today

- Supports 8x100G breakout to servers

- Lower per-lane signal integrity challenge

8x100G (DR8) Challenges

- 16 fibers required per link

- Higher component count (8 lasers)

- Near thermal limit for air cooling

- No path to 800G on duplex fiber

- Complex MPO-16 cabling infrastructure

4x200G (DR4/FR4) Advantages

- Maintains 8-fiber infrastructure from 400G

- Enables 800G on duplex fiber (FR4/LR4)

- Fewer optical components (4 lasers)

- Better thermal efficiency potential

- Direct path to 1.6T via 8x200G

4x200G (DR4/FR4) Challenges

- 200G EMLs still ramping production

- Higher dispersion penalty at 100+ GBaud

- Does not support breakout to 100G devices

- Reliability data still accumulating

- Higher initial module cost

6.3 Silicon Photonics for 800G

Silicon photonics has emerged as a significant technology option for 800G, offering an alternative to discrete EML-based designs. Current SiPh 800G modules typically use dual CW (continuous-wave) lasers coupled to a silicon chip containing eight Mach-Zehnder modulators. This architecture delivers 8x100G equivalent performance with potential manufacturing advantages.

Development of single-laser SiPh solutions is advancing rapidly. By driving eight modulators from one high-power CW laser (with optical amplification if needed), component count and cost decrease further. Industry experts project single-laser SiPh 800G-DR8 reaching mass production in 2025, with penetration potentially reaching 20-30% of the 800G market for short-reach applications.

Figure 3: 800G Transceiver Architectures - 8×100G vs 4×200G Comparison

7. Future Trajectory: 1.6T and Beyond

Figure 4: Optical Transceiver Technology Evolution Roadmap (2020-2028)

Understanding single-lane versus multi-lane trade-offs is essential for planning next-generation networks. The 1.6T and 3.2T generations will extend current architectural patterns while introducing new technologies.

7.1 The Path to 1.6T

1.6T transceivers achieve twice the bandwidth of 800G primarily through the 8x200G architecture, extending the 200G-per-lane technology maturing for 800G-DR4/FR4 to a doubled lane count. An 8-lane electrical interface connects to eight optical lanes, each carrying 200G PAM4 at approximately 100 GBaud.

The OSFP-XD (eXtra Density) form factor is emerging as the preferred module interface for 1.6T. OSFP-XD uses a vertical card-edge connector that reduces electrical trace length compared to traditional OSFP, improving signal integrity for 224G SerDes lanes. Timeline projections show 1.6T qualification with hyperscalers occurring throughout 2025, with volume production ramping in 2026.

7.2 DSP Evolution

DSP technology advancement is critical for enabling higher per-lane rates. The transition from 5nm to 3nm process nodes for 1.6T DSPs delivers approximately 30-40% power reduction for equivalent functionality, enabling 1.6T modules to operate within practical thermal envelopes.

Broadcom's Sian3 (BCM83628) 3nm DSP, entering production for 1.6T transceivers, supports 8x200G optical lanes with advanced equalization algorithms. Power efficiency improvements allow 1.6T modules to target sub-20W operation, comparable to current 800G modules.

7.3 Roadmap Summary

| Generation | Aggregate Rate | Per-Lane Rate | Lanes | Form Factor | Volume Production |

|---|---|---|---|---|---|

| 400G (current) | 400 Gbps | 100G PAM4 | 4 | QSFP-DD, OSFP | 2020-present |

| 800G Gen1 | 800 Gbps | 100G PAM4 | 8 | QSFP-DD, OSFP | 2023-present |

| 800G Gen2 | 800 Gbps | 200G PAM4 | 4 | QSFP-DD, OSFP | 2024-2025 |

| 1.6T | 1.6 Tbps | 200G PAM4 | 8 | OSFP-XD, QSFP-DD | 2025-2026 |

| 3.2T | 3.2 Tbps | 200-400G | 8-16 | OSFP-XD, CPO | 2027-2028 |

8. Practical Recommendations

Translating technical understanding into deployment decisions requires balancing multiple factors specific to each organization's circumstances.

8.1 Infrastructure Planning

Fiber infrastructure decisions made today will constrain options for the next 15-20 years. Architects should plan for at least two technology generations beyond current deployment. Single-mode fiber should be the default choice for new installations, even for short reaches where multimode remains viable. Single-mode supports all future speed grades and WDM expansion.

Structured cabling with MPO-based trunk cables provides flexibility for both parallel and WDM architectures. Base-8 fiber infrastructure (MPO-8 or MPO-12 using 8 fibers) aligns with 400G-DR4 and future 800G-DR4 requirements.

8.2 Selection Guidelines

| Application | Reach | Recommended 400G | Recommended 800G | Key Considerations |

|---|---|---|---|---|

| Intra-rack | <30m | SR4/SR8 or DR4 | DR8 or SR8 | MMF vs SMF infrastructure |

| Leaf-Spine | 100-500m | DR4 | DR8 now, DR4 later | Fiber count, breakout need |

| Campus | 500m-2km | FR4 | 2FR4 or FR4 | Fiber efficiency priority |

| DCI (short) | 2-10km | LR4 | 2LR4 or LR4 | Dispersion management |

| DCI (metro) | 40-80km | ZR | ZR/ZR+ | DWDM integration |

Breakout Applications

Parallel architectures enable breakout topologies where a single high-speed port connects to multiple lower-speed devices. A 400G-DR4 port can break out to four 100G-DR servers using passive MPO-to-LC fiber assemblies. Similarly, 800G-DR8 can break out to eight 100G connections. This flexibility allows gradual migration where spine switches operate at 800G while leaf switches use 100G server connections. Single-lambda modules do not support breakout without active demultiplexing equipment.

9. Conclusion

The choice between single-lane and multi-lane laser architectures in optical transceivers reflects fundamental engineering trade-offs that evolve with technology advancement. Multi-lane architectures dominated early high-speed optical networking because component technology could not support the required per-lane data rates. As laser, modulator, and DSP capabilities have advanced, single-lane (single-lambda) approaches have become viable and increasingly attractive for their reduced complexity and component count.

Today's 400G and 800G transceivers employ hybrid architectures that combine single-lane PAM4 technology with parallelism. Each lane carries 100G or 200G, and multiple lanes aggregate to the total module capacity. The industry trajectory clearly favors increasing per-lane rates: the transition from 8x100G to 4x200G for 800G, and the path to 8x200G for 1.6T, reflects continuous advancement in single-lane capability.

For network architects, the practical implications are significant. Fiber infrastructure planning must accommodate both parallel and WDM architectures, with single-mode fiber providing the most flexible foundation. Module selection should consider not only current requirements but also upgrade paths to higher speeds. Thermal and power planning must account for increasing module power consumption, particularly as lane rates increase.

Looking forward, the boundaries of pluggable module architecture may eventually give way to co-packaged optics for the most demanding applications. However, the fundamental trade-off between optical parallelism and per-lane data rate will persist regardless of packaging approach. Understanding these trade-offs, and how they shift with technology advancement, enables informed decisions that optimize network performance, cost, and flexibility for years to come.

Key Takeaways

Single-lane architectures reduce optical complexity but require advanced DSP for signal processing. Multi-lane architectures leverage proven technology but consume more fiber and components. Modern transceivers use hybrid approaches combining single-lane PAM4 with parallelism. The industry trajectory favors higher per-lane rates (100G to 200G to potentially 400G), reducing lane count for a given aggregate. Infrastructure planning should prioritize single-mode fiber with flexible structured cabling to support multiple architectural options across technology generations.

Unlock Premium Content

Join over 400K+ optical network professionals worldwide. Access premium courses, advanced engineering tools, and exclusive industry insights.

Already have an account? Log in here