15 min read

ML-Based Routing and Wavelength Assignment (RWA)

Fundamentals and Core Concepts

Understanding RWA in Optical Networks

Routing and Wavelength Assignment represents one of the most critical optimization challenges in Wavelength Division Multiplexing networks. At its core, RWA addresses the fundamental question of how to establish optical connections, known as lightpaths, between source and destination nodes while efficiently utilizing the limited wavelength resources available across the network infrastructure.

In Dense WDM systems, multiple optical signals can propagate simultaneously through a single fiber by using different wavelengths or channels. The network must determine both the physical route through the topology and assign appropriate wavelengths to each connection request. This dual optimization problem becomes increasingly complex as networks scale in size and traffic demands grow dynamically.

The Wavelength Continuity Constraint

A fundamental principle governing traditional RWA is the wavelength continuity constraint, which mandates that an optical signal must maintain the same wavelength across all links in its path from source to destination. This constraint significantly impacts network efficiency and resource utilization. The mathematical representation of this constraint can be expressed as:

This constraint means that if a lightpath uses wavelength λ5 on the first link of its route, it must continue using λ5 on all subsequent links until reaching the destination. Without wavelength converters, this requirement creates blocking situations where available wavelengths exist on individual links but no single wavelength is free across the entire path.

Network Capacity and Blocking Probability

The efficiency of RWA algorithms directly impacts two critical network performance metrics. First, the link capacity constraint ensures that the total number of wavelengths assigned to any fiber link cannot exceed the available wavelength channels:

Where W represents the number of wavelengths per fiber. Second, blocking probability measures the likelihood that a connection request cannot be established due to resource unavailability. Traditional heuristic approaches often struggle to minimize blocking while maintaining high network utilization, especially under dynamic traffic conditions.

Key Insight: The RWA problem is proven to be NP-complete in its general form, meaning that finding optimal solutions becomes computationally intractable as network size increases. This complexity motivates the application of machine learning techniques that can learn near-optimal policies through experience rather than exhaustive search.

Quality of Transmission Considerations

Modern RWA must account for physical layer impairments that affect signal quality. Parameters such as Optical Signal-to-Noise Ratio, chromatic dispersion, polarization mode dispersion, and nonlinear effects accumulate along the transmission path. The relationship between OSNR and system performance can be understood through the Q-factor metric:

Where B0 represents the optical bandwidth and Bc is the electrical bandwidth. A Q-factor above 6 typically corresponds to acceptable performance with bit error rates below 10-9. For reliable optical communication, OSNR values typically need to exceed 20 dB, with higher-order modulation formats requiring OSNR values of 25 dB or greater.

Mathematical Framework

Problem Formulation

The RWA problem can be formally expressed as an optimization framework with multiple objectives and constraints. Given a network topology represented as a graph G = (V, E), where V denotes the set of nodes and E represents the fiber links, the goal is to minimize blocking probability while maximizing network throughput and ensuring quality of service requirements.

The optimization objective seeks to maximize the number of successfully established connections while considering path length, wavelength availability, and signal quality constraints. For a set of connection requests, the formulation must satisfy wavelength continuity, link capacity limits, and physical layer feasibility conditions.

Markov Decision Process Framework

Machine learning approaches, particularly reinforcement learning algorithms, model the RWA problem as a Markov Decision Process. In this framework, the state space encompasses the current network configuration including wavelength availability on each link, active connections, and quality metrics. The action space consists of routing decisions and wavelength assignments for incoming requests.

The reward function incentivizes successful connection establishment while penalizing blocking events and suboptimal resource utilization. The transition dynamics capture how network state evolves based on connection arrivals, departures, and management actions. This probabilistic framework enables learning optimal policies through interaction with the network environment.

OSNR Accumulation and Link Budget

Physical layer modeling requires accurate prediction of signal quality along candidate paths. The OSNR at the receiver depends on amplifier characteristics, span losses, and the number of cascaded amplifiers. For a system with N amplifiers, each with noise figure NF and output power Pout, preceded by span loss Lspan, the OSNR can be calculated as:

This equation demonstrates that OSNR degrades logarithmically with the number of amplified spans, highlighting the importance of careful amplifier placement and power budget optimization. Machine learning models can learn to predict OSNR based on path characteristics, enabling proactive quality-aware routing decisions.

Nonlinear Impairment Modeling

High-power transmission introduces nonlinear effects including four-wave mixing, cross-phase modulation, and self-phase modulation. These impairments depend on channel power, fiber properties, and the nonlinear coefficient. The maximum allowable per-channel launch power to restrict nonlinear penalties can be expressed through accumulated nonlinear phase shift calculations across amplifier spans.

Neural network architectures excel at learning complex nonlinear relationships between launch power, fiber parameters, modulation format, and resulting signal quality. This capability enables ML-based RWA systems to make informed decisions that balance OSNR requirements against nonlinear penalty constraints.

Types and Components

Static vs Dynamic RWA

RWA algorithms are classified based on the traffic pattern they address. Static RWA, also known as offline RWA, handles a predefined set of connection requests where all demands are known in advance. This scenario allows for global optimization techniques that can explore the entire solution space to find near-optimal configurations.

Dynamic RWA operates in real-time, processing connection requests as they arrive without knowledge of future demands. This online scenario requires algorithms that make immediate decisions with incomplete information. Machine learning techniques, particularly reinforcement learning, demonstrate significant advantages in dynamic environments by learning from historical patterns to make intelligent real-time decisions.

Traditional Approaches

Fixed heuristics like First-Fit and Random wavelength assignment combined with shortest path routing. Limited adaptability to changing network conditions and traffic patterns.

Supervised Learning Methods

Classification models predict connection feasibility, while regression models estimate quality metrics like OSNR and BER for candidate paths before establishment.

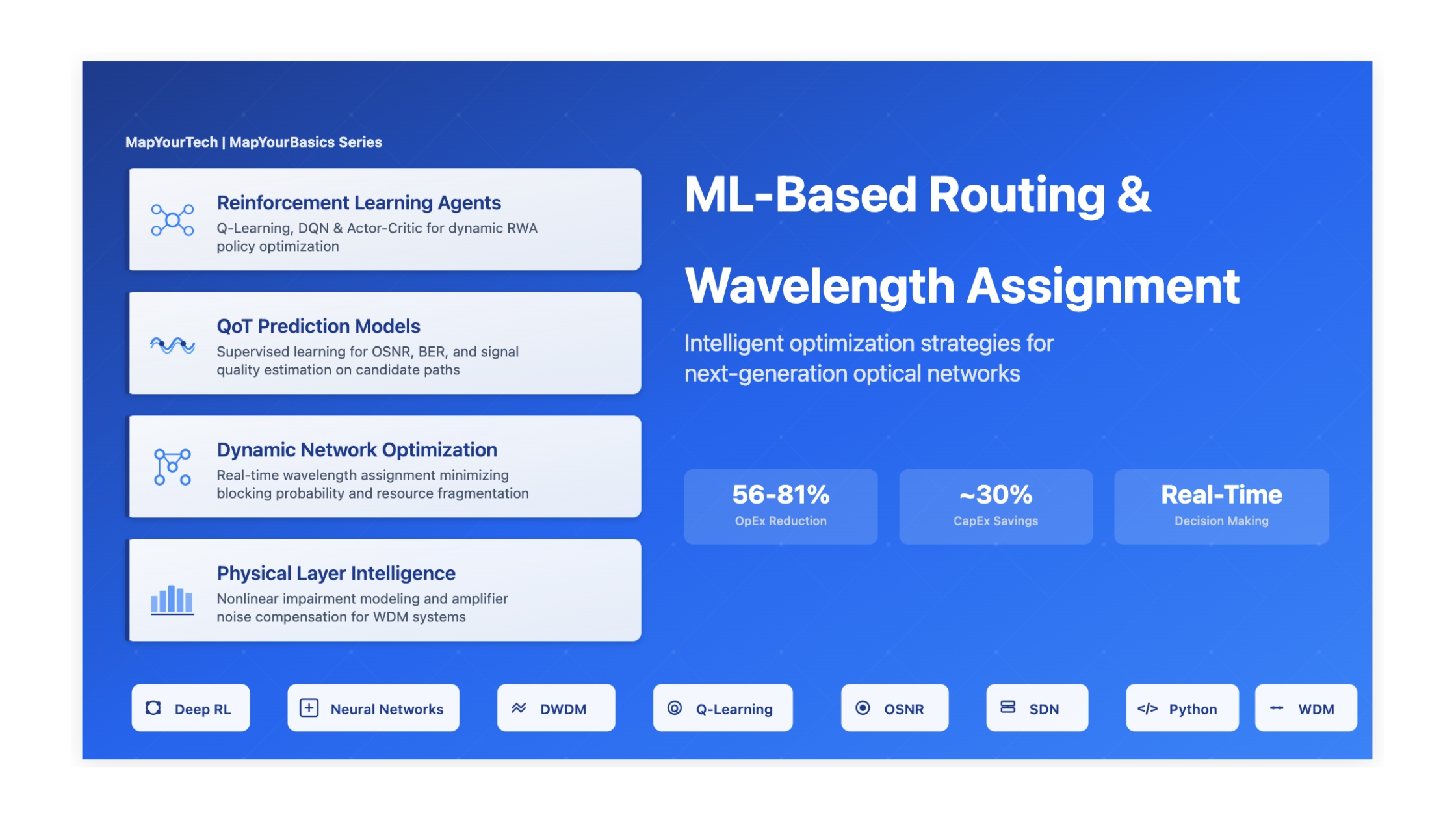

Reinforcement Learning Agents

Q-Learning and Deep Q-Networks learn optimal routing and assignment policies through trial-and-error interaction with the network environment.

ML Algorithm Categories for RWA

Machine learning approaches to RWA span multiple paradigms, each suited to different aspects of the problem. Supervised learning algorithms train on historical connection data to predict quality of transmission metrics. These models learn mappings between path characteristics and resulting OSNR, BER, or Q-factor values, enabling proactive quality assessment.

Reinforcement learning algorithms excel at dynamic resource allocation and routing optimization. Q-Learning has been extensively applied to WDM networks for learning optimal wavelength selection policies. Deep reinforcement learning methods, including Deep Q-Networks and Actor-Critic architectures, handle complex state spaces and can discover sophisticated strategies that outperform traditional heuristics.

Unsupervised learning techniques contribute through anomaly detection for network monitoring and clustering for traffic pattern analysis. These methods identify unusual network behaviors and segment traffic into classes that can be handled with specialized routing strategies.

Neural Network Architectures

Artificial Neural Networks and deep learning models demonstrate particular effectiveness for RWA applications. Multi-Layer Perceptrons serve as general-purpose function approximators for QoT prediction tasks. Convolutional Neural Networks can process spatial network topology information, while Recurrent Neural Networks and LSTM units capture temporal traffic patterns for improved demand forecasting.

These architectures can learn highly complex, nonlinear relationships directly from raw network data, including physical layer impairments, traffic statistics, and topology information. The ability to handle high-dimensional input spaces makes them suitable for comprehensive RWA solutions that integrate multiple decision factors.

Effects and Impacts

Wavelength Blocking and Fragmentation

Inefficient RWA decisions lead to wavelength blocking, where connection requests fail despite available capacity existing in the network. This occurs when no single wavelength remains free across an entire path, even though different wavelengths are available on individual links. The blocking probability directly impacts network revenue and service quality, making it a critical performance metric.

Wavelength fragmentation compounds this problem over time as connections arrive and depart. The random pattern of wavelength usage creates situations where capacity becomes stranded and unusable. Machine learning approaches can learn to recognize fragmentation patterns and make assignment decisions that maintain better wavelength continuity, reducing future blocking events.

Quality of Transmission Degradation

Physical layer impairments accumulate along transmission paths, degrading signal quality and potentially causing connection failures. Amplified Spontaneous Emission noise from Erbium-Doped Fiber Amplifiers accumulates with each amplification stage, reducing OSNR. The cascading of amplifiers creates an additive noise effect that limits the maximum transparent reach of optical signals.

Chromatic dispersion causes different wavelength components to travel at different velocities, spreading optical pulses and causing intersymbol interference. Polarization Mode Dispersion introduces additional signal spreading due to birefringence in the fiber. These effects compound with fiber nonlinearities at high power levels, creating complex interactions that traditional analytical models struggle to predict accurately.

Critical Impact: Studies have quantified OpEx savings of 56-81% and CapEx avoidance of approximately 30% through intelligent automation of network operations. ML-based RWA contributes to these improvements by optimizing resource utilization and enabling proactive maintenance strategies.

Network Scalability Challenges

As networks grow in size and complexity, traditional RWA approaches face exponential increases in computational requirements. The solution space expands rapidly with the number of nodes, links, and wavelengths, making exhaustive search methods impractical. Mesh topologies with multiple possible paths between node pairs further complicate the optimization landscape.

Machine learning models can scale more effectively by learning compressed representations of network state and policies that generalize across different topologies. Once trained, neural network inference operates in constant time regardless of network size, enabling real-time decision making even in large-scale deployments.

Dynamic Traffic Variations

Modern optical networks experience highly variable traffic patterns driven by data center workloads, video streaming, and cloud services. Traffic demands fluctuate on multiple timescales, from sub-second bursts to diurnal patterns and longer-term growth trends. Traditional static RWA solutions optimized for average conditions perform poorly during peak periods or unexpected traffic surges.

ML-based approaches can learn temporal traffic patterns and adapt routing decisions accordingly. Time-series forecasting models predict future demands, enabling proactive resource allocation. Reinforcement learning agents discover dynamic policies that respond effectively to varying network conditions without requiring manual reconfiguration.

Techniques and Solutions

QoT-Aware Routing with Supervised Learning

Quality of Transmission prediction forms a cornerstone of intelligent RWA systems. Supervised learning models train on historical data correlating path characteristics with measured quality metrics. Input features include path length, number of hops, fiber types, amplifier configurations, and channel spacing. Target outputs may be continuous values like OSNR or BER, or binary classifications indicating path feasibility.

Support Vector Machines, Random Forests, and Artificial Neural Networks have all demonstrated effectiveness for QoT classification and regression tasks. These models achieve prediction accuracies sufficient to enable proactive path selection based on anticipated signal quality. By avoiding paths likely to fail quality thresholds, blocking probability decreases while maintaining high network utilization.

Deep Reinforcement Learning for Dynamic RWA

Deep Q-Networks represent a powerful approach for learning optimal RWA policies in dynamic environments. The agent observes network state including available wavelengths, active connections, and incoming requests. At each decision point, the DQN selects routing and wavelength assignment actions to maximize long-term cumulative reward, typically defined as successful connections minus blocking events.

The deep neural network approximates the Q-function, mapping state-action pairs to expected future rewards. Through repeated interaction with the network environment, the agent discovers sophisticated strategies that balance immediate blocking minimization with long-term resource efficiency. Experience replay and target networks stabilize the learning process, enabling convergence to near-optimal policies.

Actor-Critic methods provide an alternative reinforcement learning architecture particularly suited to continuous action spaces. The actor network learns a policy for selecting routes and wavelengths, while the critic network estimates the value of state-action pairs to guide policy improvement. This separation of policy and value estimation often improves training stability and sample efficiency.

Hybrid Analytical-ML Approaches

Combining traditional analytical models with machine learning creates robust solutions that leverage complementary strengths. The Gaussian Noise model provides physics-based OSNR estimation using known fiber parameters. Machine learning components can learn correction factors to improve model accuracy based on empirical measurements, or identify scenarios where analytical predictions become unreliable.

This hybrid approach maintains interpretability and physical consistency while achieving the adaptive performance of data-driven methods. Network operators gain confidence in automated decisions when underlying physics remains visible and explainable, addressing trust concerns that impede ML adoption in mission-critical infrastructure.

Transfer Learning and Few-Shot Adaptation

Training effective ML models typically requires substantial amounts of labeled data, which may be costly or impractical to collect in optical networks. Transfer learning addresses this challenge by pre-training models on simulated data or related network domains, then fine-tuning with limited real-world measurements. This approach accelerates deployment and reduces data collection burdens.

Few-shot learning techniques enable models to adapt to new network topologies or equipment with minimal additional training examples. Meta-learning algorithms discover learning strategies that generalize across diverse network configurations, facilitating rapid adaptation when deploying to new sites or upgrading infrastructure.

Multi-Objective Optimization

Real-world RWA scenarios involve multiple competing objectives beyond simple blocking minimization. Energy efficiency considerations favor shorter paths and reduced amplification. Load balancing distributes traffic evenly to prevent congestion hotspots. Protection requirements may mandate diverse routing for critical services. Fair resource allocation ensures equitable service across all users.

Multi-objective reinforcement learning frameworks incorporate weighted reward functions that balance these various objectives. The weights can be adjusted based on operational priorities or learned automatically through meta-optimization. Pareto-optimal solutions provide network operators with explicit tradeoff curves between competing goals, enabling informed policy decisions.

Practical Applications

Data Center Interconnection

High-capacity DCI networks require efficient wavelength utilization to support massive east-west traffic flows. ML-based RWA optimizes path selection considering latency requirements and load distribution across multiple parallel links. Dynamic bandwidth allocation responds to varying workload patterns between data center facilities.

Metro Networks

Metropolitan area networks face diverse service requirements ranging from low-latency financial trading to high-bandwidth video distribution. Intelligent RWA systems learn service-specific routing policies that prioritize latency for sensitive applications while maximizing capacity utilization for bulk data transfers.

Long-Haul Transport

Ultra-long-haul optical systems spanning thousands of kilometers demand careful QoT management. ML models predict OSNR accumulation along candidate paths, enabling proactive selection of routes that maintain acceptable signal quality. Amplifier gain optimization and modulation format selection adapt dynamically to path characteristics.

Elastic Optical Networks

Flexible grid systems allow variable bandwidth allocation using frequency slots rather than fixed wavelengths. ML-based Routing and Spectrum Assignment extends RWA principles to handle continuous spectrum optimization. Deep learning models learn efficient slot allocation policies that minimize fragmentation while meeting heterogeneous bandwidth demands.

Software-Defined Optical Networks

SDN architectures with centralized controllers provide ideal platforms for ML-based RWA deployment. Controllers collect comprehensive network telemetry enabling rich training datasets. Learned policies execute rapidly to provision connections in response to orchestrator requests, supporting network slicing and dynamic service instantiation.

5G Transport Networks

Mobile network evolution drives stringent latency and reliability requirements for fronthaul and backhaul connectivity. Intelligent RWA ensures deterministic performance for critical services while efficiently multiplexing elastic traffic. Predictive models anticipate capacity demands based on subscriber mobility patterns and application usage.

Integration with Network Management Systems

Practical deployment requires seamless integration between ML-based RWA engines and existing network management infrastructure. Standard interfaces like NETCONF and YANG data models enable interoperability with multi-vendor equipment. RESTful APIs expose ML model predictions and decisions to orchestration platforms and operational support systems.

Continuous learning pipelines collect performance telemetry to retrain models and adapt to evolving network conditions. Anomaly detection algorithms identify scenarios where model predictions diverge from observations, triggering model updates or human review. This closed-loop automation enables networks to self-optimize while maintaining operational oversight.

Performance Metrics and Validation

Evaluating ML-based RWA systems requires comprehensive metrics beyond simple blocking probability. Connection setup time measures responsiveness to service requests. Wavelength utilization efficiency indicates how effectively available resources are consumed. Path length distribution reveals whether routing decisions minimize resource usage or unnecessarily consume capacity on longer paths.

Quality metrics including average OSNR margin and worst-case BER across all connections assess the system's ability to maintain acceptable signal quality. Fairness measures ensure equitable service delivery across different connection types and priorities. Simulation studies using realistic traffic models validate performance before production deployment, while A/B testing in operational networks quantifies real-world improvements.

Deployment Considerations: Successful ML-based RWA implementation requires attention to model explainability and operational trust. Visualization tools that display learned routing policies and decision rationales help network operators understand and validate automated actions. Fallback mechanisms ensure graceful degradation to traditional heuristics if ML components fail or produce questionable outputs.

Future Directions and Emerging Trends

Advanced research explores probabilistic models that quantify prediction uncertainty, enabling risk-aware routing decisions. Graph neural networks leverage network topology structure directly, learning representations that transfer across different network configurations. Multi-agent reinforcement learning coordinates decisions across distributed network elements, discovering emergent cooperation strategies.

Federated learning approaches enable model training across multiple network domains without sharing sensitive operational data. This privacy-preserving technique facilitates collaborative learning among network operators while maintaining competitive confidentiality. As optical networks continue to scale in capacity and complexity, ML-based RWA will become increasingly essential for achieving optimal performance and operational efficiency.

For educational purposes in optical networking and DWDM systems

Note: This guide is based on industry standards, best practices, and real-world implementation experiences. Specific implementations may vary based on equipment vendors, network topology, and regulatory requirements. Always consult with qualified network engineers and follow vendor documentation for actual deployments.

Unlock Premium Content

Join over 400K+ optical network professionals worldwide. Access premium courses, advanced engineering tools, and exclusive industry insights.

Already have an account? Log in here