Standards

Showing 11 - 20 of 271 results

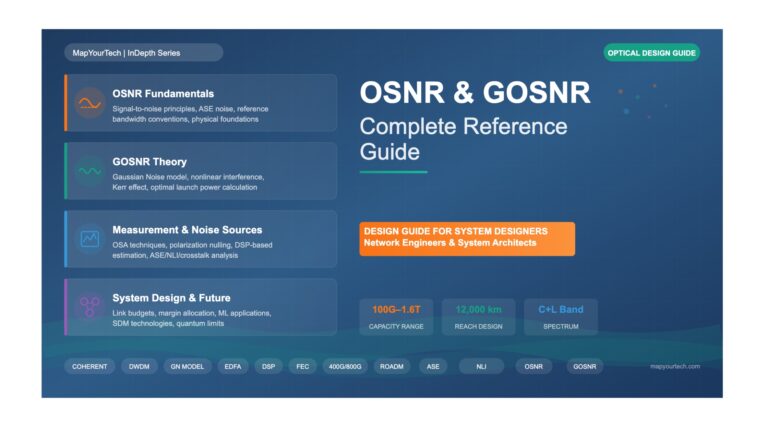

OSNR and GOSNR Complete Reference Guide – Fundamentals OSNR and GOSNR Fundamentals of Optical Signal-to-Noise Ratio 1.1 Introduction to OSNR...

-

Free

-

December 12, 2025

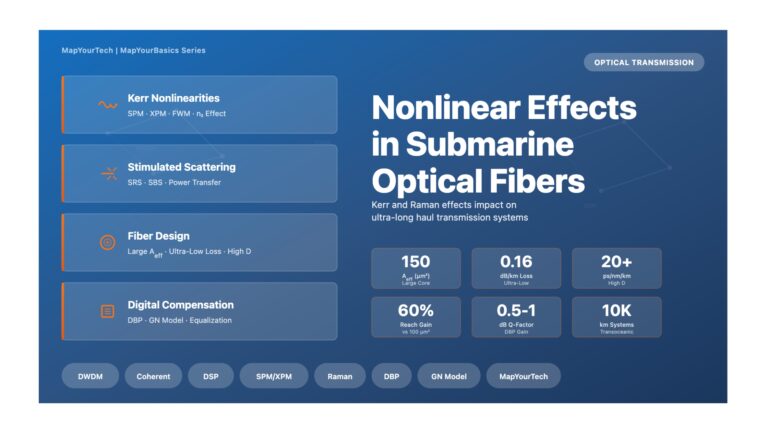

Nonlinear Effects in Submarine Optical Fibers Optical Transmission Nonlinear Effects in Submarine Optical Fibers Understanding Kerr and Raman nonlinearities and...

-

Free

-

December 7, 2025

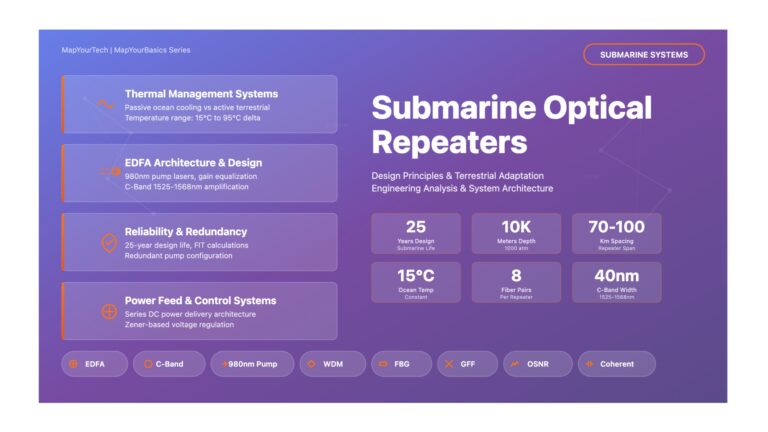

Submarine Optical Repeaters: Design Principles and Terrestrial Adaptation Challenges Submarine Optical Repeaters: Design Principles and Terrestrial Adaptation Challenges Challenges and...

-

Free

-

December 7, 2025

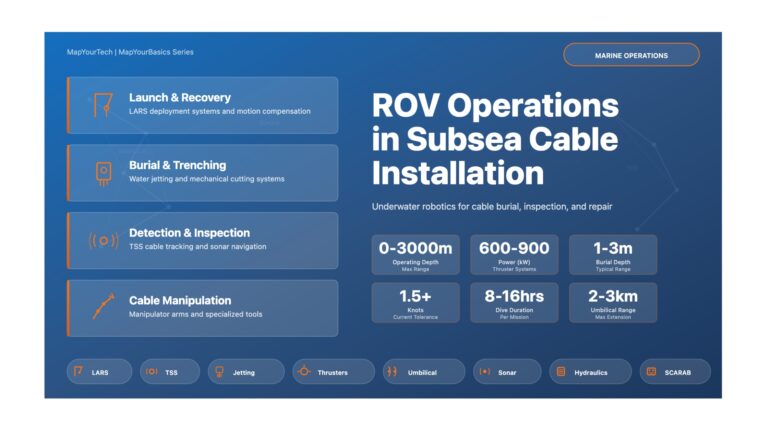

ROV Operations in Subsea Cable Installation MARINE OPERATIONS ROV Operations in Subsea Cable Installation Underwater Robotics for Cable Burial, Inspection,...

-

Free

-

December 7, 2025

Chromatic Dispersion Management in Subsea Systems Subsea Transmission Technology Chromatic Dispersion Management in Subsea Systems From Optical Compensation to Digital...

-

Free

-

December 7, 2025

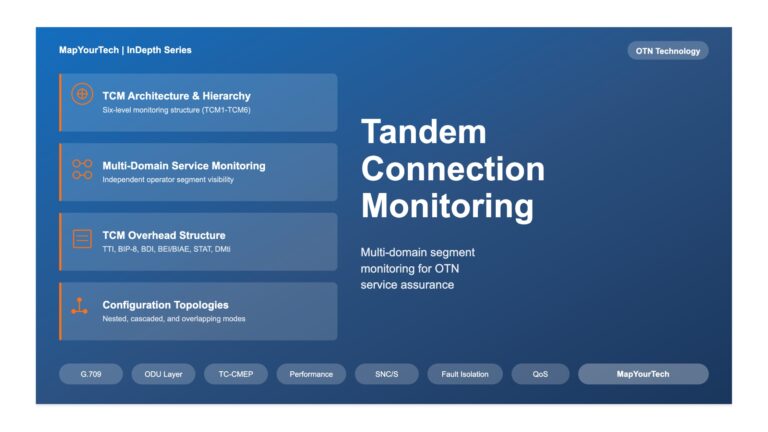

Tandem Connection Monitoring in OTN Networks | MapYourTech Tandem Connection Monitoring in Optical Transport Networks Comprehensive guide to multi-domain service...

-

Free

-

December 3, 2025

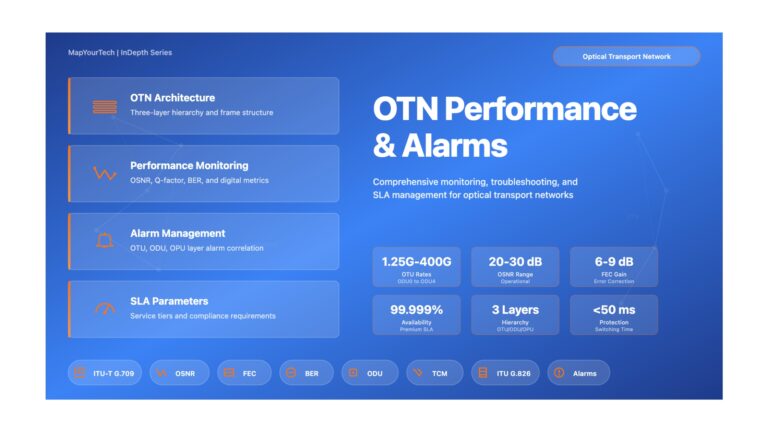

OTN Performance and Alarms – Foundations & Architecture (Enhanced) OTN Performance and Alarms A comprehensive technical guide for optical network...

-

Free

-

December 2, 2025

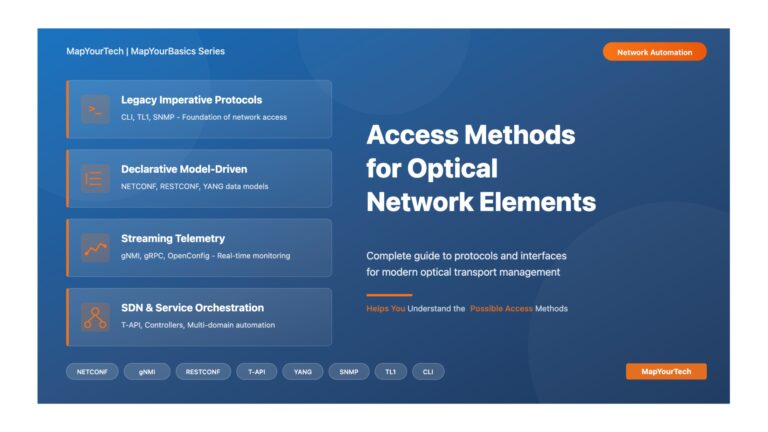

Access Methods for Optical Network Elements | MapYourTech Optical Network Automation Access Methods for Optical Network Elements A Comprehensive Guide...

-

Free

-

December 1, 2025

NBI and SBI Protocols at Different Layers – Part 1: Foundation & Core Concepts | MapYourTech NBI and SBI Protocols...

-

Free

-

December 1, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal amplification

Signal integrity

Signal quality

Slider

submarine

submarine communication

submarine optical networking

Telecommunications

Ticker