Technical

Showing 61 - 70 of 573 results

800G ZR/ZR+ Coherent Optics: Comprehensive Technical Guide 800G ZR/ZR+ Coherent Optics Comprehensive Technical Guide to Next-Generation High-Speed Optical Networking Introduction...

-

Free

-

November 13, 2025

400G ZR/ZR+ Coherent Optical Technology – Comprehensive Guide | MapYourTech 400G ZR/ZR+ Coherent Optical Technology Comprehensive Guide to Next-Generation Pluggable...

-

Free

-

November 13, 2025

Inter-DC vs Intra-DC for Optical Professionals: Complete Guide Inter-DC vs Intra-DC for Optical Professionals A comprehensive guide to understanding data...

-

Free

-

November 13, 2025

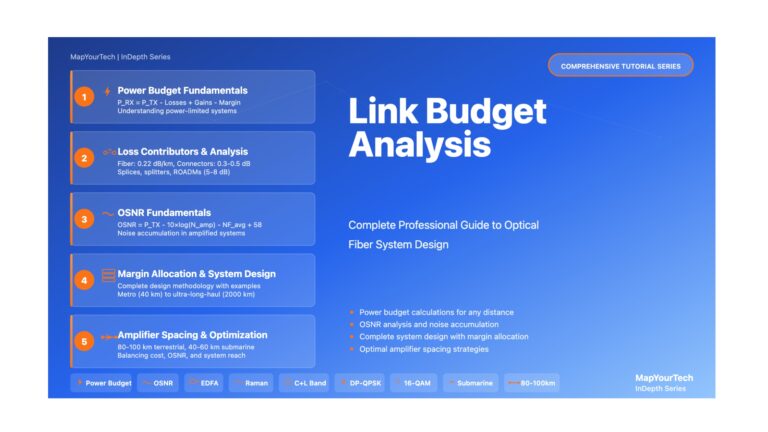

Part 1: Introduction to Link Budget Analysis – MapYourTech Tutorial Series Introduction to Link Budget Analysis Master the fundamentals of...

-

Free

-

November 13, 2025

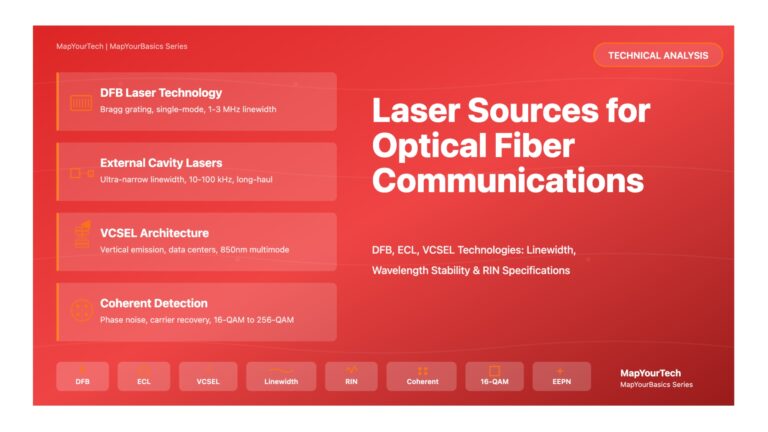

Laser Sources for Optical Fiber Communications – Comprehensive Technical Analysis Laser Sources for Optical Fiber Communications A Comprehensive Research-Grade Analysis...

-

Free

-

November 12, 2025

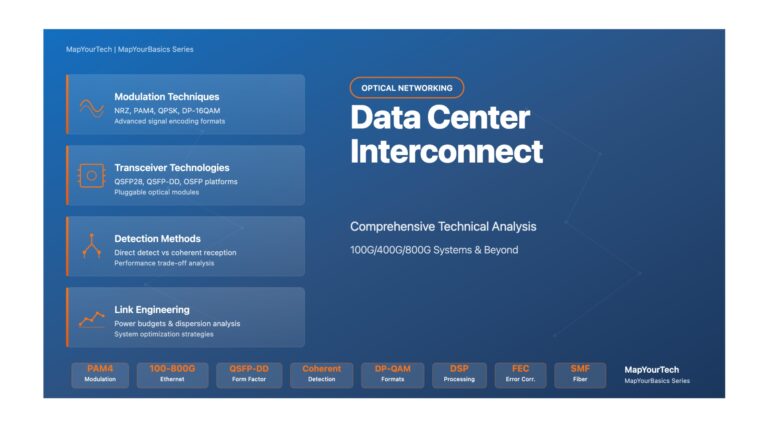

Data Center Interconnect: Comprehensive Technical Analysis Data Center Interconnect Technology A Comprehensive Technical Analysis of 100G/400G/800G Systems, PAM4 Modulation, and...

-

Free

-

November 12, 2025

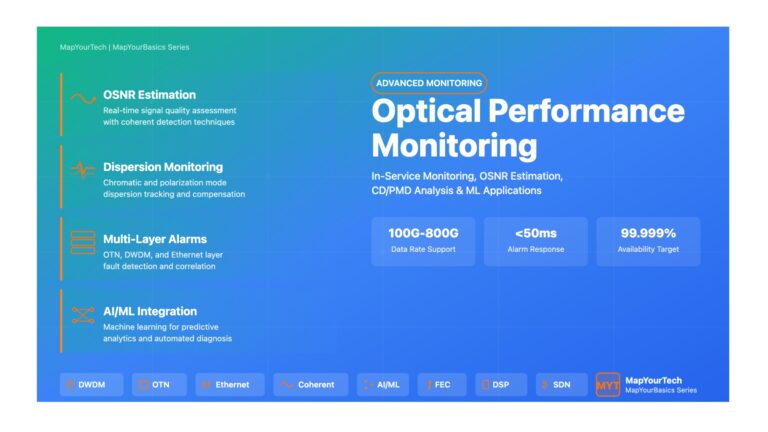

Optical Performance Monitoring: Advanced In-Service Techniques and Machine Learning Applications Optical Performance Monitoring: Advanced In-Service Techniques and Machine Learning Applications...

-

Free

-

November 12, 2025

EDFA (Erbium Doped Fiber Amplifier): Everything You Need to Know EDFA: Erbium Doped Fiber Amplifier A comprehensive guide to the...

-

Free

-

November 9, 2025

Advanced Deep Dive: Raman Amplifier – Everything About It Advanced Deep Dive: Raman Amplifiers Comprehensive Expert-Level Analysis of Stimulated Raman...

-

Free

-

November 9, 2025

EDFA (Erbium Doped Fiber Amplifier): Complete Technical Guide – Part 1 EDFA: Erbium Doped Fiber Amplifier A Comprehensive Technical Guide...

-

Free

-

November 9, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal amplification

Signal integrity

Signal quality

Slider

submarine

submarine communication

submarine optical networking

Telecommunications

Ticker