HomePosts tagged “ber”

ber

Showing 1 - 10 of 10 results

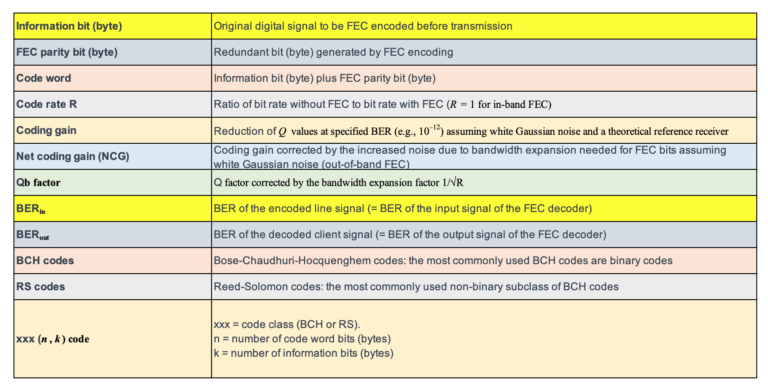

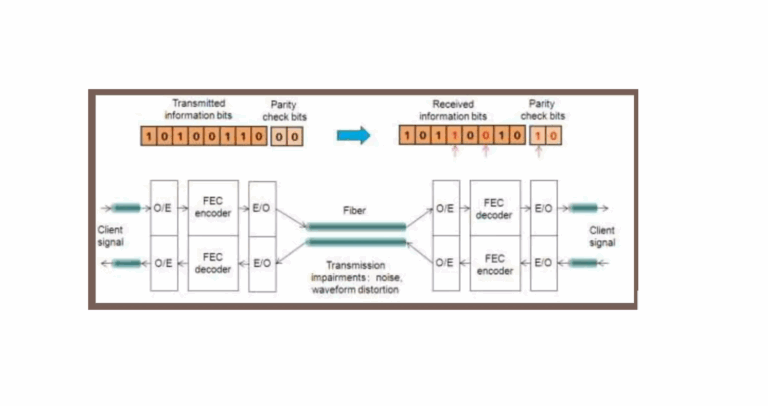

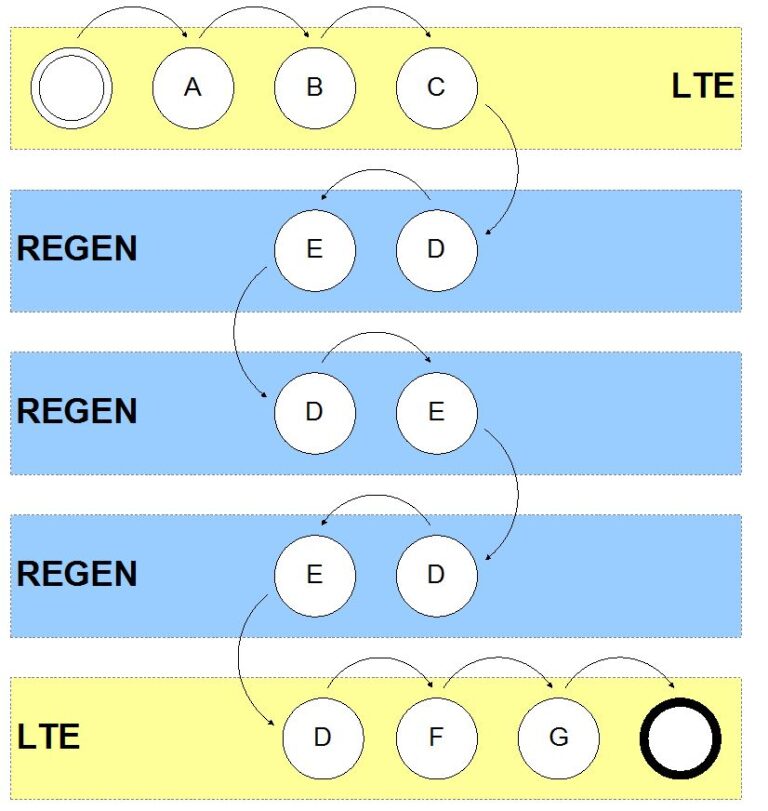

In the pursuit of ever-greater data transmission capabilities, forward error correction (FEC) has emerged as a pivotal technology, not just...

-

Free

-

March 26, 2025

Forward Error Correction (FEC) has become an indispensable tool in modern optical communication, enhancing signal integrity and extending transmission distances....

-

Free

-

March 26, 2025

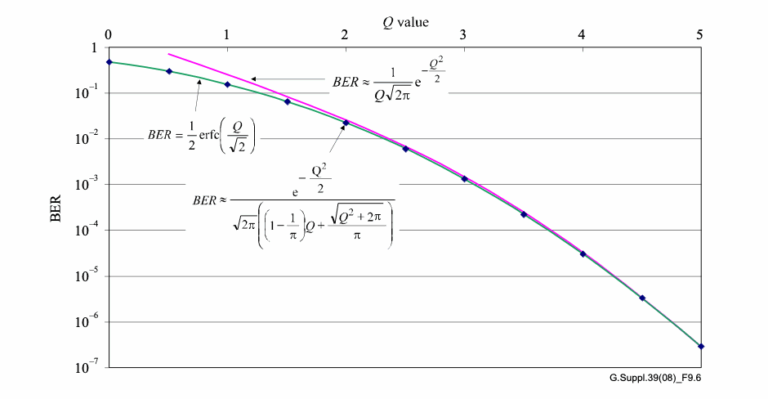

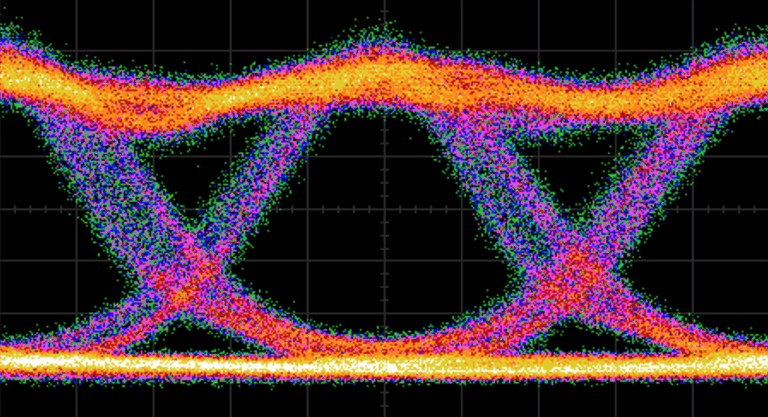

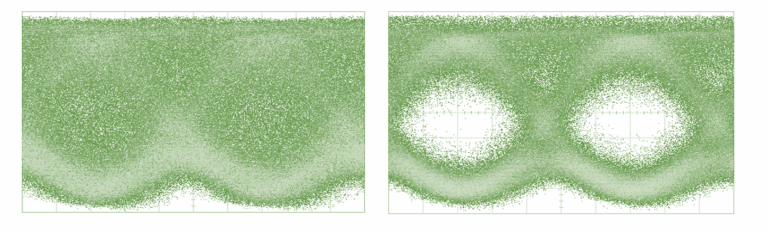

Signal integrity is the cornerstone of effective fiber optic communication. In this sphere, two metrics stand paramount: Bit Error Ratio...

-

Free

-

March 26, 2025

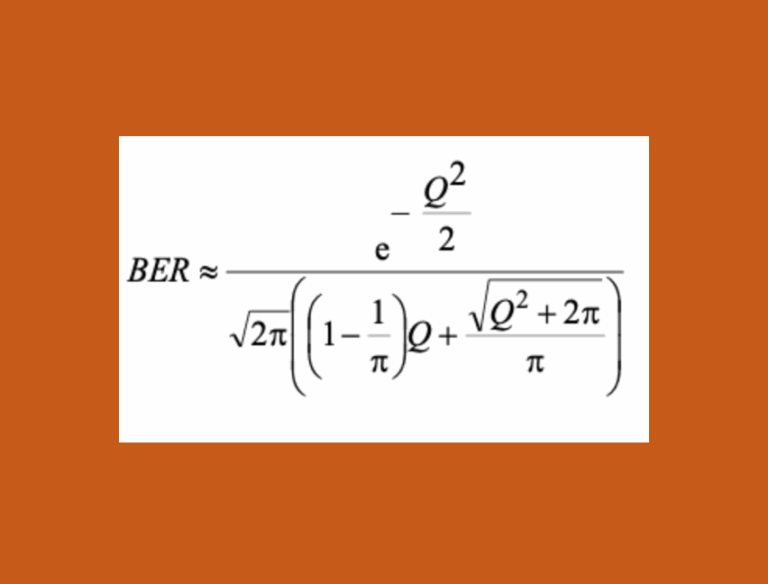

In the world of optical communication, it is crucial to have a clear understanding of Bit Error Rate (BER). This...

-

Free

-

March 26, 2025

FEC codes in optical communications are based on a class of codes know as Reed-Solomon. A Reed-Solomon code is specified as RS (n, k), which means...

-

Free

-

March 26, 2025

“In analog world the standard test message is the sine wave, followed by the two-tone signal for more rigorous tests. ...

-

Free

-

March 26, 2025

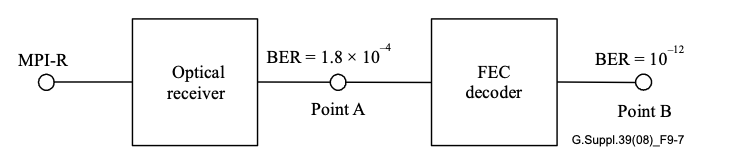

The Bit Error Ratio (BER) is often specified as a performance parameter of a transmission system, which needs to be...

-

Free

-

March 26, 2025

Bit error rate, BER is a key parameter that is used in assessing systems that transmit digital data from one...

-

Free

-

March 26, 2025

The first thing to note is that for each frame there are two sets of 20 parity bits. One set...

-

Free

-

March 26, 2025

The Bit Error Rate (BER) of a digital optical receiver indicates the probability of an incorrect bit identification. In other...

-

Free

-

March 26, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal integrity

Signal quality

Slider

submarine

submarine cable systems

submarine communication

submarine optical networking

Telecommunications

Ticker