Optical Fiber technology is a game-changer in the world of telecommunication. It has revolutionized the way we communicate and share information. Fiber optic cables are used in most high-speed internet connections, telephone networks, and cable television systems.

What is Fiber Optic Technology?

Fiber optic technology is the use of thin, transparent fibers of glass or plastic to transmit light signals over long distances. These fibers are used in telecommunications to transmit data, video, and voice signals at high speeds and over long distances.

What are Fiber Optic Cables Made Of?

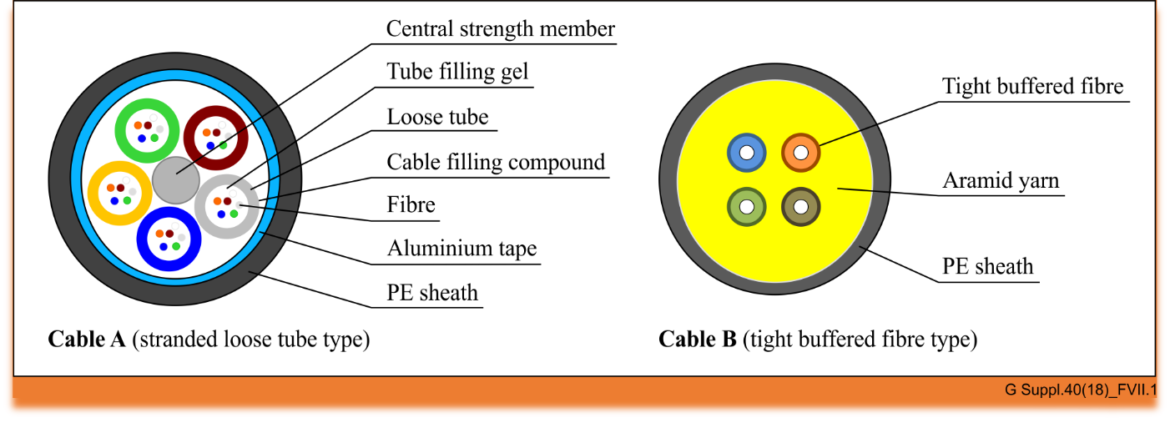

Fiber optic cables are made of thin strands of glass or plastic called fibers. These fibers are surrounded by protective coatings, which make them resistant to moisture, heat, and other environmental factors.

How Does Fiber Optic Technology Work?

Fiber optic technology works by sending pulses of light through the fibers in a cable. These light signals travel through the cable at very high speeds, allowing data to be transmitted quickly and efficiently.

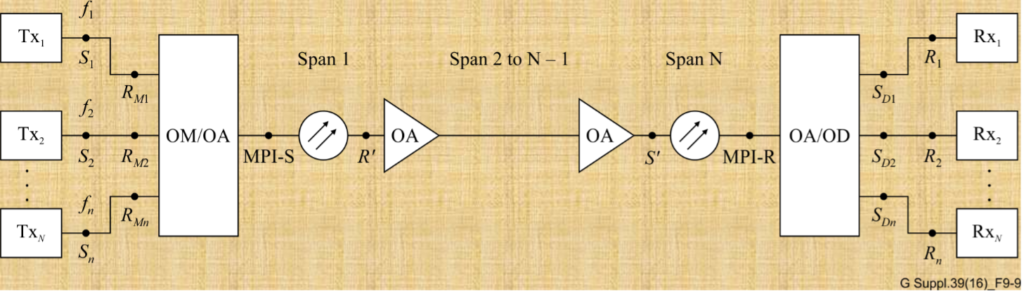

What is an Optical Network?

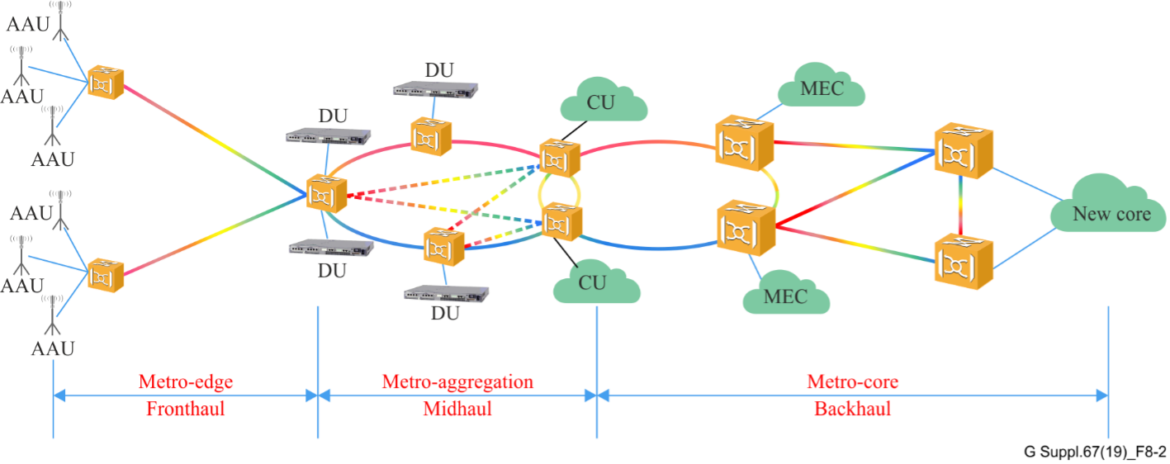

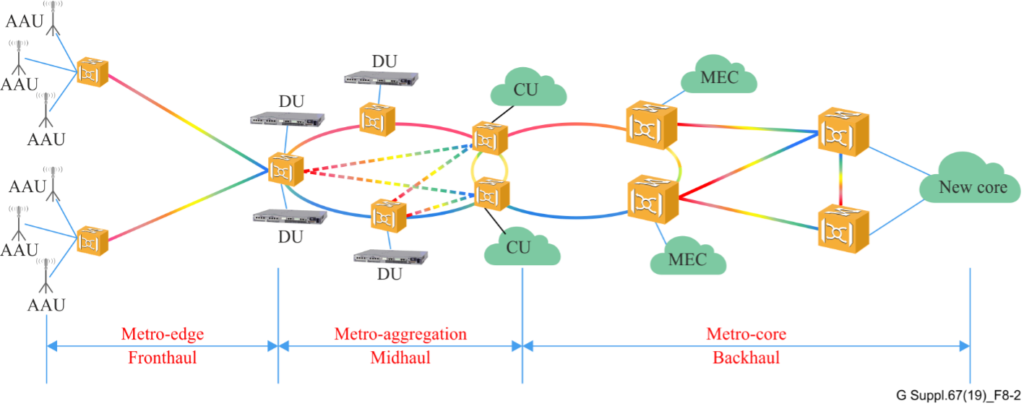

An optical network is a communication network that uses optical fibers as the primary transmission medium. Optical networks are used for high-speed internet connections, telephone networks, and cable television systems.

What are the Benefits of Fiber Optic Technology?

Fiber optic technology offers several benefits over traditional copper wire technology, including:

- Faster data transfer speeds

- Greater bandwidth capacity

- Less signal loss

- Resistance to interference from electromagnetic sources

- Greater reliability

- Longer lifespan

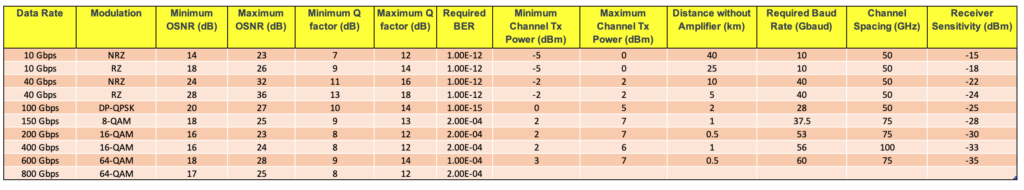

How Fast is Fiber Optic Internet?

Fiber optic internet can provide download speeds of up to 1 gigabit per second (Gbps) and upload speeds of up to 1 Gbps. This is much faster than traditional copper wire internet connections.

How is Fiber Optic Internet Installed?

Fiber optic internet is installed by running fiber optic cables from a central hub to the homes or businesses that need internet access. The installation process involves digging trenches to bury the cables or running the cables overhead on utility poles.

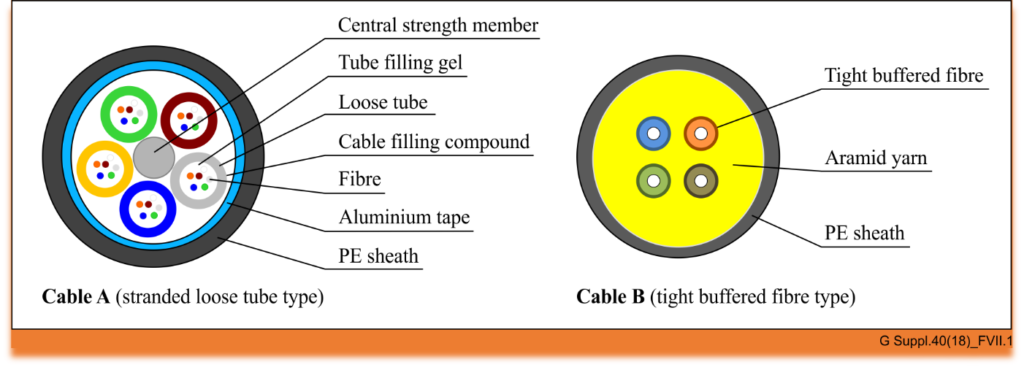

What are the Different Types of Fiber Optic Cables?

There are two main types of fiber optic cables:

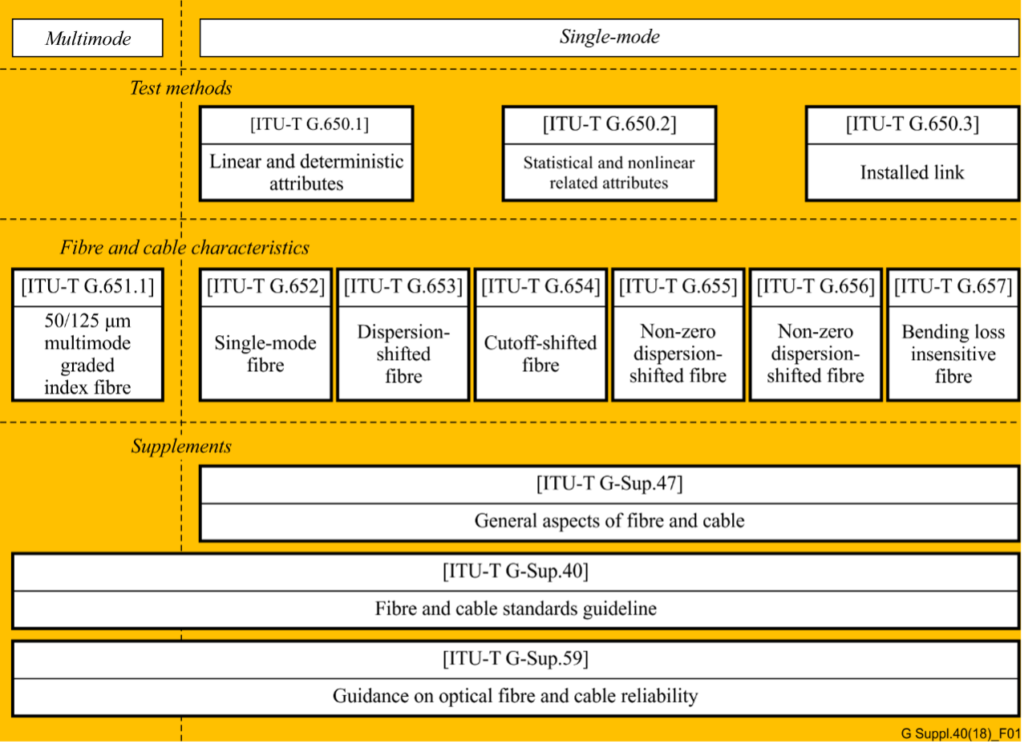

Single-Mode Fiber

Single-mode fiber has a smaller core diameter than multi-mode fiber, which allows it to transmit light signals over longer distances with less attenuation.

Multi-Mode Fiber

Multi-mode fiber has a larger core diameter than single-mode fiber, which allows it to transmit light signals over shorter distances at a lower cost.

What is the Difference Between Single-Mode and Multi-Mode Fiber?

The main difference between single-mode and multi-mode fiber is the size of the core diameter. Single-mode fiber has a smaller core diameter, which allows it to transmit light signals over longer distances with less attenuation. Multi-mode fiber has a larger core diameter, which allows it to transmit light signals over shorter distances at a lower cost.

What is the Maximum Distance for Fiber Optic Cables?

The maximum distance for fiber optic cables depends on the type of cable and the transmission technology used. In general, single-mode fiber can transmit light signals over distances of up to 10 kilometers without the need for signal regeneration, while multi-mode fiber is limited to distances of up to 2 kilometers.

What is Fiber Optic Attenuation?

Fiber optic attenuation refers to the loss of light signal intensity as it travels through a fiber optic cable. Attenuation is caused by factors such as absorption, scattering, and bending of the light signal.

What is Fiber Optic Dispersion?

Fiber optic dispersion refers to the spreading of a light signal as it travels through a fiber optic cable. Dispersion is caused by factors such as the wavelength of the light signal and the length of the cable.

What is Fiber Optic Splicing?

Fiber optic splicing is the process of joining two fiber optic cables together. Splicing is necessary when extending the length of a fiber optic cable or when repairing a damaged cable.

What is the Difference Between Fusion Splicing and Mechanical Splicing?

Fusion splicing is a process in which the two fibers to be joined are fused together using heat. Mechanical splicing is a process in which the two fibers to be joined are aligned and held together using a mechanical splice.

What is Fiber Optic Termination?

Fiber optic termination is the process of connecting a fiber optic cable to a device or equipment. Termination involves attaching a connector to the end of the cable so that it can be plugged into a device or equipment.

What is an Optical Coupler?

An optical coupler is a device that splits or combines light signals in a fiber optic network. Couplers are used to distribute signals from a single source to multiple destinations or to combine signals from multiple sources into a single fiber.

What is an Optical Splitter?

optical splitter is a type of optical coupler that splits a single fiber into multiple fibers. Splitters are used to distribute signals from a single source to multiple destinations.

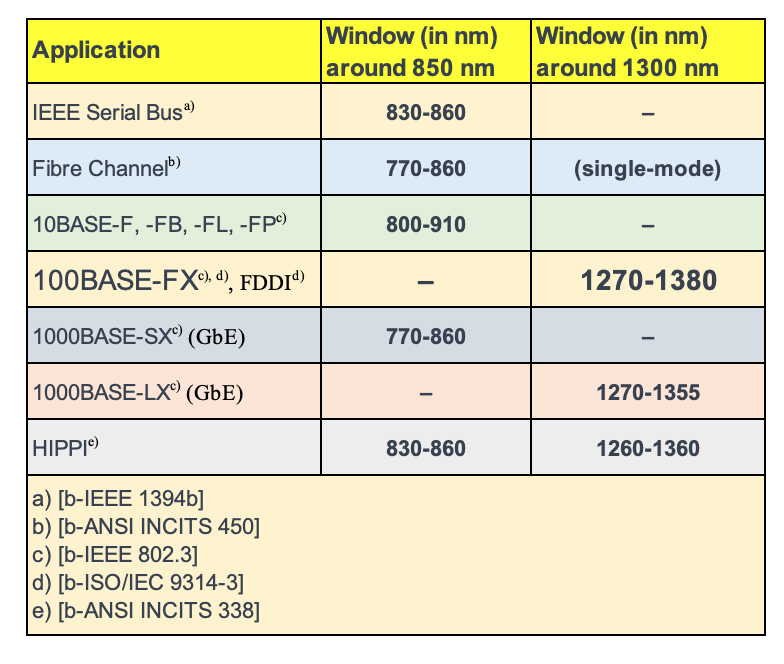

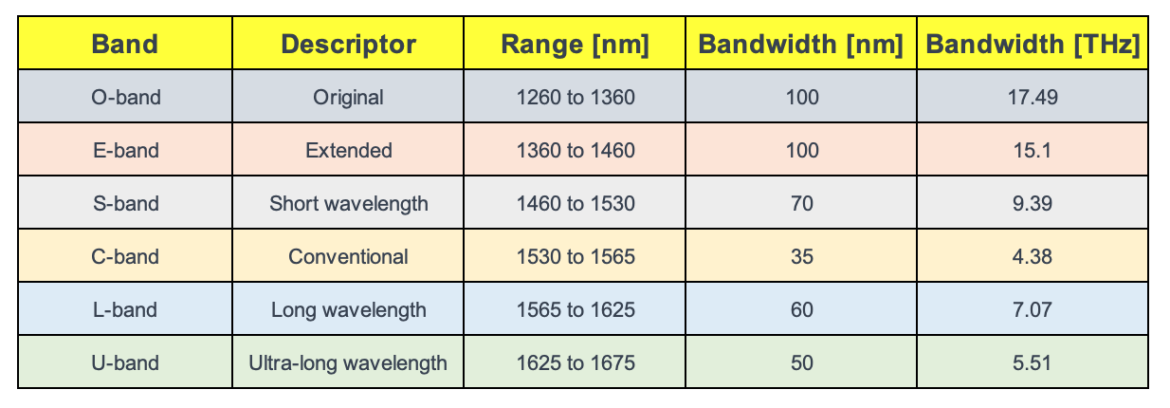

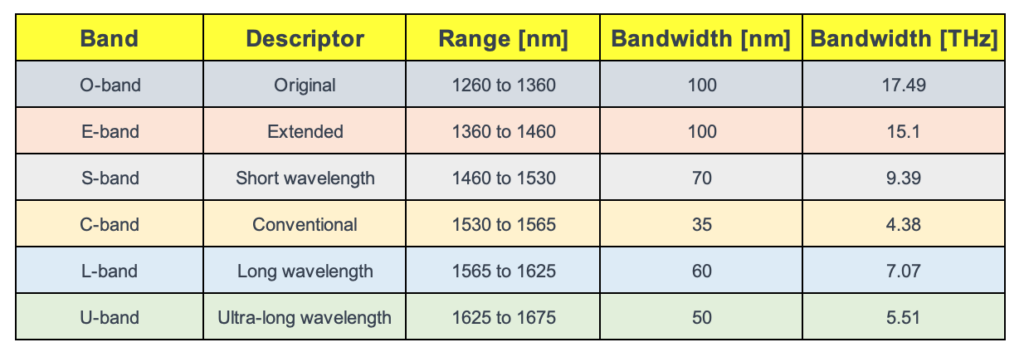

What is Wavelength-Division Multiplexing?

Wavelength-division multiplexing is a technology that allows multiple signals of different wavelengths to be transmitted over a single fiber. Each signal is assigned a different wavelength, and a multiplexer is used to combine the signals into a single fiber.

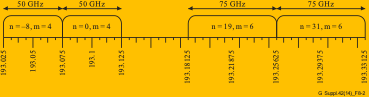

What is Dense Wavelength-Division Multiplexing?

Dense wavelength-division multiplexing is a technology that allows multiple signals to be transmitted over a single fiber using very closely spaced wavelengths. DWDM is used to increase the capacity of fiber optic networks.

What is Coarse Wavelength-Division Multiplexing?

Coarse wavelength-division multiplexing is a technology that allows multiple signals to be transmitted over a single fiber using wider-spaced wavelengths than DWDM. CWDM is used for shorter distance applications and lower bandwidth requirements.

What is Bidirectional Wavelength-Division Multiplexing?

Bidirectional wavelength-division multiplexing is a technology that allows signals to be transmitted in both directions over a single fiber. BIDWDM is used to increase the capacity of fiber optic networks.

What is Fiber Optic Testing?

Fiber optic testing is the process of testing the performance of fiber optic cables and components. Testing is done to ensure that the cables and components meet industry standards and to troubleshoot problems in the network.

What is Optical Time-Domain Reflectometer?

An optical time-domain reflectometer is a device used to test fiber optic cables by sending a light signal into the cable and measuring the reflections. OTDRs are used to locate breaks, bends, and other faults in fiber optic cables.

What is Optical Spectrum Analyzer?

An optical spectrum analyzer is a device used to measure the spectral characteristics of a light signal. OSAs are used to analyze the output of fiber optic transmitters and to measure the characteristics of fiber optic components.

What is Optical Power Meter?

An optical power meter is a device used to measure the power of a light signal in a fiber optic cable. Power meters are used to measure the output of fiber optic transmitters and to test the performance of fiber optic cables and components.

What is Fiber Optic Connector?

A fiber optic connector is a device used to attach a fiber optic cable to a device or equipment. Connectors are designed to be easily plugged and unplugged, allowing for easy installation and maintenance.

What is Fiber Optic Adapter?

A fiber optic adapter is a device used to connect two fiber optic connectors together. Adapters are used to extend the length of a fiber optic cable or to connect different types of fiber optic connectors.

What is Fiber Optic Patch Cord?

A fiber optic patch cord is a cable with connectors on both ends used to connect devices or equipment in a fiber optic network. Patch cords are available in different lengths and connector types to meet different network requirements.

What is Fiber Optic Pigtail?

A fiber optic pigtail is a short length of fiber optic cable with a connector on one end and a length of exposed fiber on the other. Pigtails are used to connect fiber optic cables to devices or equipment that require a different type of connector.

What is Fiber Optic Coupler?

A fiber optic coupler is a device used to split or combine light signals in a fiber optic network. Couplers are used to distribute signals from a single source to multiple destinations or to combine signals from multiple sources into a single fiber.

What is Fiber Optic Attenuator?

A fiber optic attenuator is a device used to reduce the power of a light signal in a fiber optic network. Attenuators are used to prevent

signal overload or to match the power levels of different components in the network.

What is Fiber Optic Isolator?

A fiber optic isolator is a device used to prevent light signals from reflecting back into the source. Isolators are used to protect sensitive components in the network from damage caused by reflected light.

What is Fiber Optic Circulator?

A fiber optic circulator is a device used to route light signals in a specific direction in a fiber optic network. Circulators are used to route signals between multiple devices in a network.

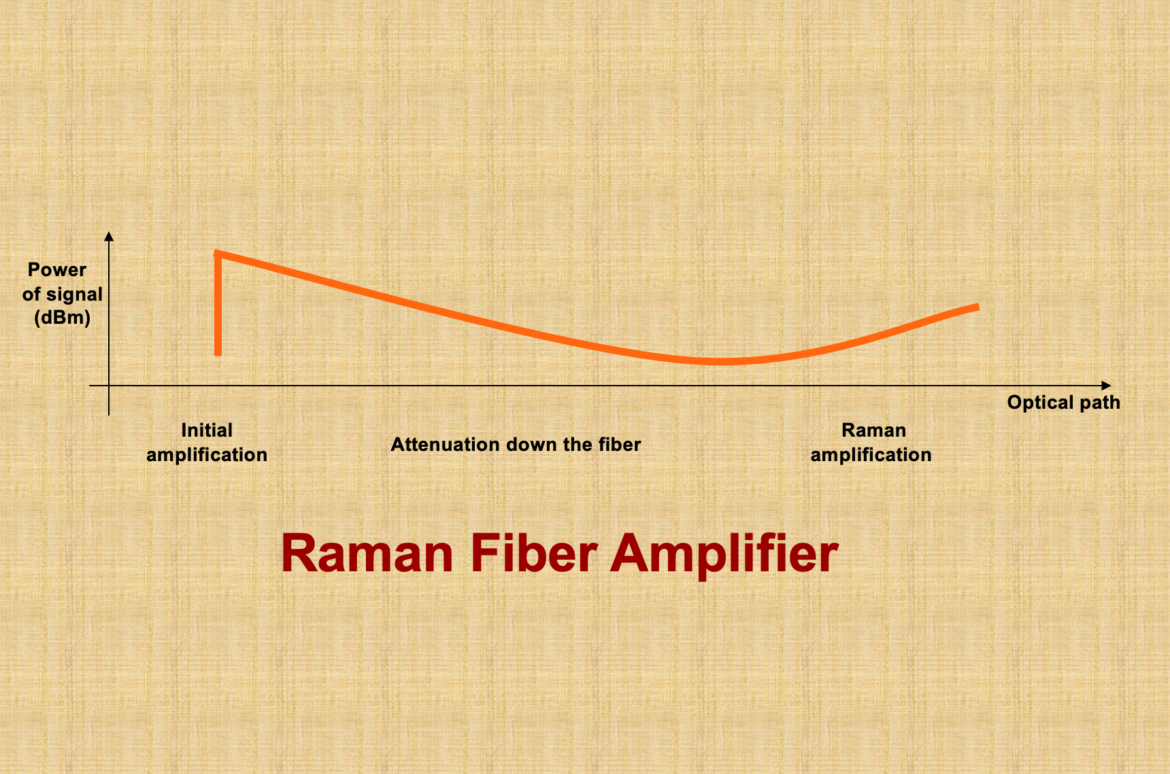

What is Fiber Optic Amplifier?

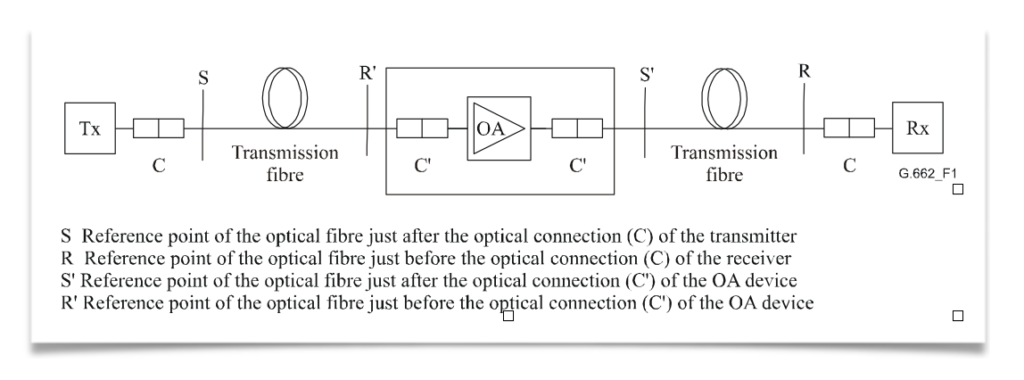

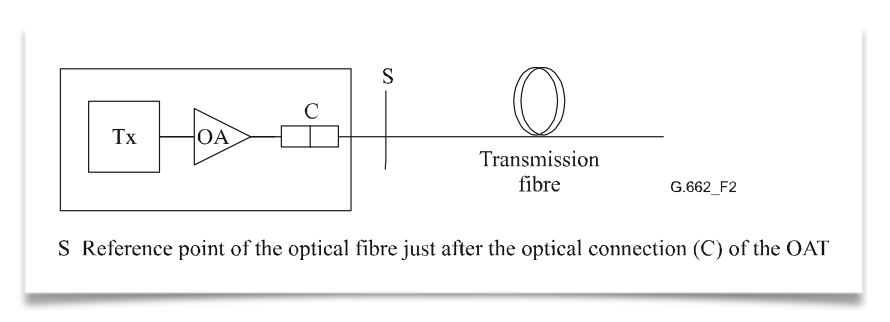

A fiber optic amplifier is a device used to boost the power of a light signal in a fiber optic network. Amplifiers are used to extend the distance that a signal can travel without the need for regeneration.

What is Fiber Optic Modulator?

A fiber optic modulator is a device used to modulate the amplitude or phase of a light signal in a fiber optic network. Modulators are used in applications such as fiber optic communication and sensing.

What is Fiber Optic Switch?

A fiber optic switch is a device used to switch light signals between different fibers in a fiber optic network. Switches are used to route signals between multiple devices in a network.

What is Fiber Optic Demultiplexer?

A fiber optic demultiplexer is a device used to separate multiple signals of different wavelengths that are combined in a single fiber. Demultiplexers are used in wavelength-division multiplexing applications.

What is Fiber Optic Multiplexer?

A fiber optic multiplexer is a device used to combine multiple signals of different wavelengths into a single fiber. Multiplexers are used in wavelength-division multiplexing applications.

What is Fiber Optic Transceiver?

A fiber optic transceiver is a device that combines a transmitter and a receiver into a single module. Transceivers are used to transmit and receive data over a fiber optic network.

What is Fiber Optic Media Converter?

A fiber optic media converter is a device used to convert a fiber optic signal to a different format, such as copper or wireless. Media converters are used to connect fiber optic networks to other types of networks.

What is Fiber Optic Splice Closure?

A fiber optic splice closure is a device used to protect fiber optic splices from environmental factors such as moisture and dust. Splice closures are used in outdoor fiber optic applications.

What is Fiber Optic Distribution Box?

A fiber optic distribution box is a device used to distribute fiber optic signals to multiple devices or equipment. Distribution boxes are used in fiber optic networks to route signals between multiple devices.

What is Fiber Optic Patch Panel?

A fiber optic patch panel is a device used to connect multiple fiber optic cables to a network. Patch panels are used to organize and manage fiber optic connections in a network.

What is Fiber Optic Cable Tray?

A fiber optic cable tray is a device used to support and protect fiber optic cables in a network. Cable trays are used to organize and route fiber optic cables in a network.

What is Fiber Optic Duct?

A fiber optic duct is a device used to protect fiber optic cables from environmental factors such as moisture and dust. Ducts are used in outdoor fiber optic applications.

What is Fiber Optic Raceway?

A fiber optic raceway is a device used to route and protect fiber optic cables in a network. Raceways are used to organize and manage fiber optic connections in a network.

What is Fiber Optic Conduit?

A fiber optic conduit is a protective tube used to house fiber optic cables in a network. Conduits are used in outdoor fiber optic applications to protect cables from environmental factors.