HomePosts tagged “Optical network”

Optical network

Showing 1 - 10 of 20 results

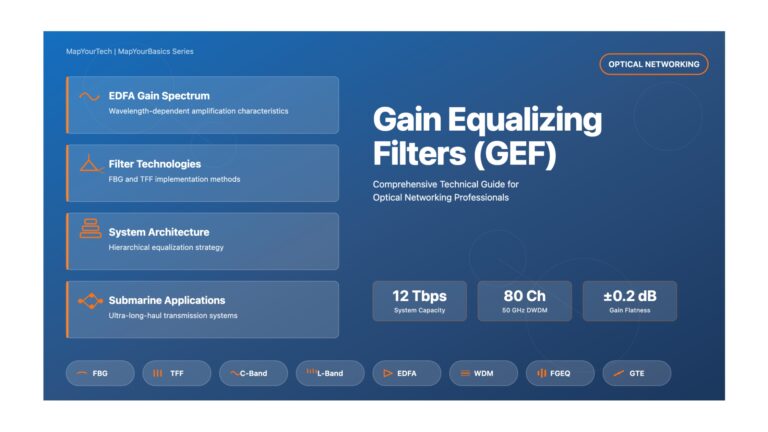

Gain Equalizing Filters (GEF) – Comprehensive Visual Guide | MapYourTech Overview of Gain Equalizing Filters (GEF) Comprehensive Technical Guide for...

-

Free

-

November 30, 2025

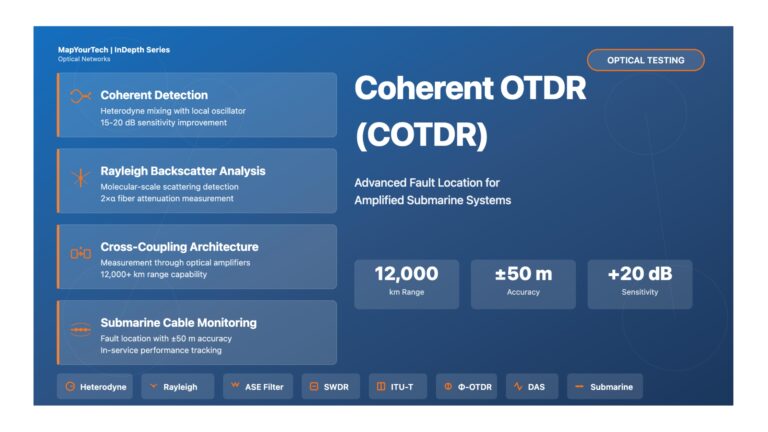

Coherent Optical Time Domain Reflectometry (COTDR) – Comprehensive Visual Guide Coherent Optical Time Domain Reflectometry (COTDR) Technical Guide for Optical...

-

Free

-

November 29, 2025

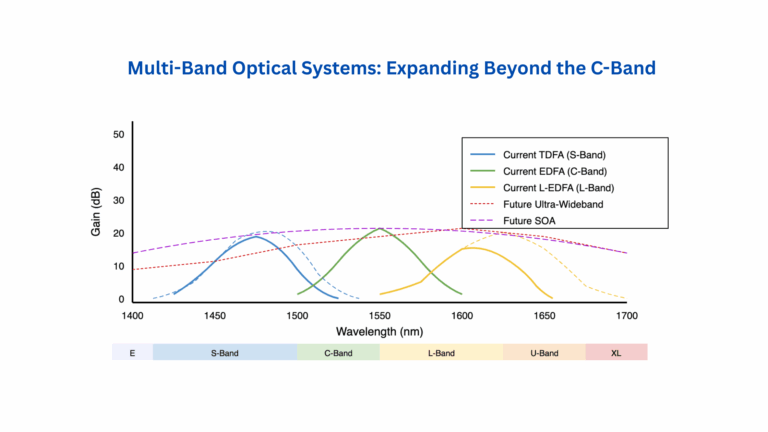

Methods to Pump More Bits in Less Spectrum in Optical Fiber Methods to Pump More Bits in Less Spectrum in...

-

Free

-

November 19, 2025

Membership Required You must be a member to access this content. View Membership Levels Already a member? Log in here......

-

Free

-

May 8, 2025

Syslog is one of the most widely used protocols for logging system events, providing network and optical device administrators with...

-

Free

-

March 26, 2025

A Digital Twin Network (DTN) is a virtual representation of a physical network, providing real-time analysis, diagnosis, and control of...

-

Free

-

March 26, 2025

Here we will discuss what are the advantages of OTN(Optical Transport Network) over SDH/SONET. The OTN architecture concept was developed...

-

Free

-

March 26, 2025

The maintenance signals defined in [ITU-T G.709] provide network connection status information in the form of payload missing indication (PMI), backward...

-

Free

-

March 26, 2025

The Optical Time Domain Reflectometer (OTDR) is useful for testing the integrity of fiber optic cables. An optical time-domain reflectometer (OTDR)...

-

Free

-

March 26, 2025

Understanding Q-Factor in Optical Communications Understanding Q-Factor in Optical Communications Signal Quality Metrics and BER Relationship What is Q-Factor? Q...

-

Free

-

March 26, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal integrity

Signal quality

Slider

submarine

submarine cable systems

submarine communication

submarine optical networking

Telecommunications

Ticker