HomePosts tagged “Optical networks”

Optical networks

Showing 1 - 7 of 7 results

Understanding OpenROADM: Architecture and Implementation Understanding OpenROADM: Revolutionizing Optical Network Architecture Introduction OpenROADM (Open Reconfigurable Optical Add/Drop Multiplexer) represents a...

-

Free

-

November 15, 2025

RESTCONF (RESTful Configuration Protocol) is a network management protocol designed to provide a simplified, REST-based interface for managing network devices...

-

Free

-

March 26, 2025

Simple Network Management Protocol (SNMP) is one of the most widely used protocols for managing and monitoring network devices in...

-

Free

-

March 26, 2025

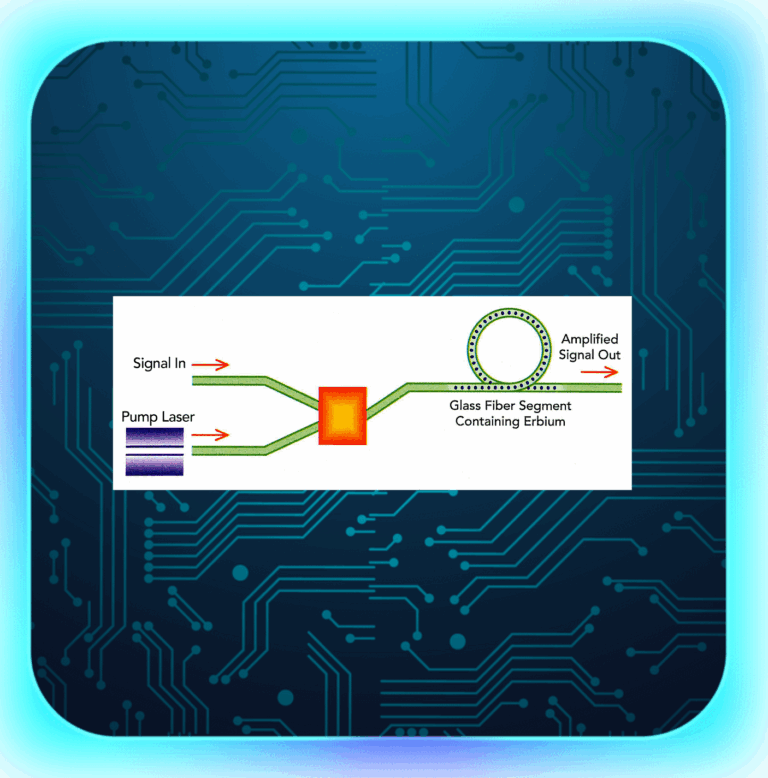

Optical Amplifiers (OAs) are key parts of today’s communication world. They help send data under the sea, land and even...

-

Free

-

March 26, 2025

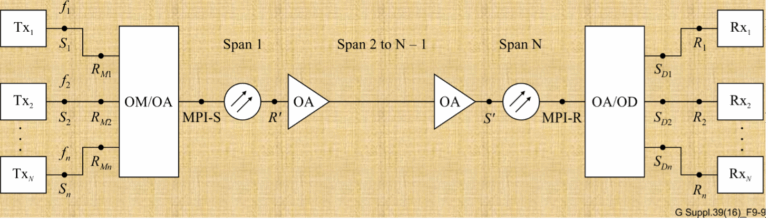

Optical networks are the backbone of the internet, carrying vast amounts of data over great distances at the speed of...

-

Free

-

March 26, 2025

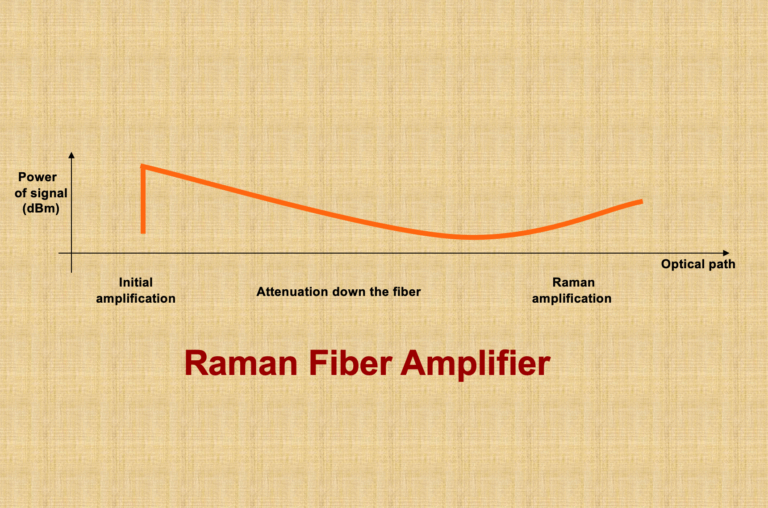

A: A Raman amplifier is a type of optical amplifier that utilizes stimulated Raman scattering (SRS) to amplify optical signals...

-

Free

-

March 26, 2025

1. Introduction A reboot is a process of restarting a device, which can help to resolve many issues that...

-

Free

-

March 26, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal integrity

Signal quality

Slider

submarine

submarine cable systems

submarine communication

submarine optical networking

Telecommunications

Ticker