HomePosts tagged “Slider”

Slider

Showing 1 - 10 of 24 results

Simple Network Management Protocol (SNMP) is one of the most widely used protocols for managing and monitoring network devices in...

-

Free

-

March 26, 2025

The world of optical communication is undergoing a transformation with the introduction of Hollow Core Fiber (HCF) technology. This revolutionary...

-

Free

-

March 26, 2025

A Digital Twin Network (DTN) is a virtual representation of a physical network, providing real-time analysis, diagnosis, and control of...

-

Free

-

March 26, 2025

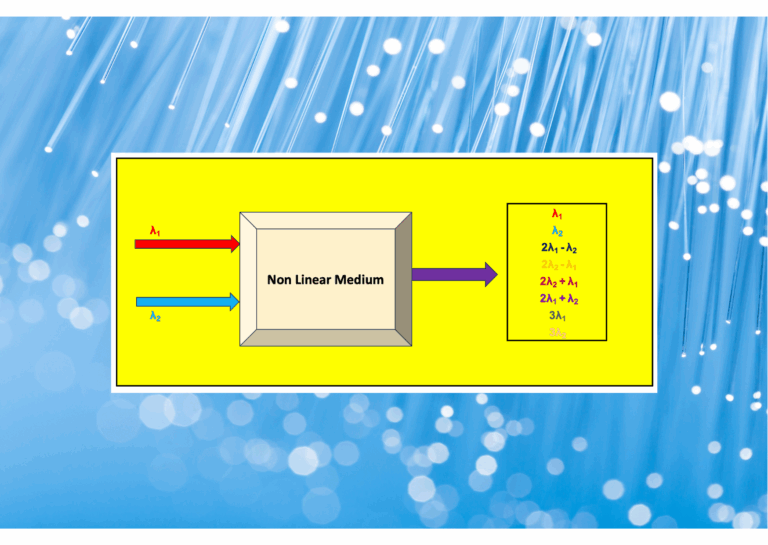

In optical fiber communications, a common assumption is that increasing the signal power will enhance performance. However, this isn't always...

-

Free

-

March 26, 2025

Mastering Job Interviews: A Strategic Guide Mastering Job Interviews A Strategic Guide to Showcasing Your Best Self and Landing Your...

-

Free

-

March 26, 2025

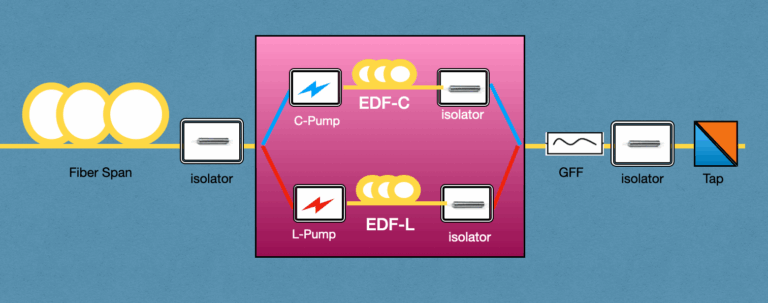

Exploring the C+L Bands in DWDM Network DWDM networks have traditionally operated within the C-band spectrum due to its lower...

-

Free

-

March 26, 2025

In the world of fiber-optic communication, the integrity of the transmitted signal is critical. As an optical engineers, our primary...

-

Free

-

March 26, 2025

In this comprehensive exploration of 400G ZR and ZR+ optical communication standards, we delve into the advanced world of Probabilistic...

-

Free

-

March 26, 2025

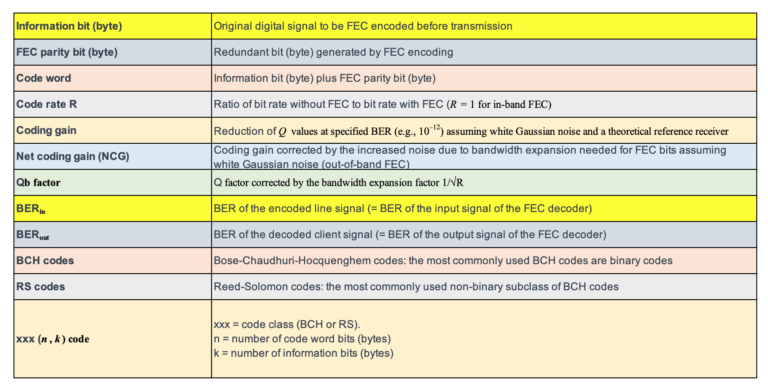

In the pursuit of ever-greater data transmission capabilities, forward error correction (FEC) has emerged as a pivotal technology, not just...

-

Free

-

March 26, 2025

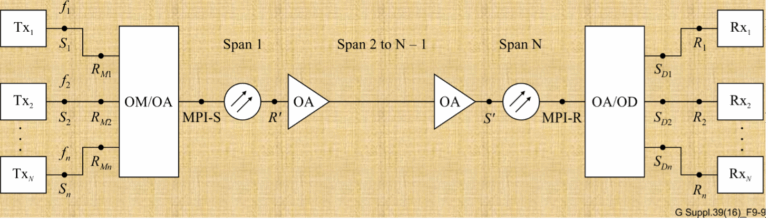

Optical networks are the backbone of the internet, carrying vast amounts of data over great distances at the speed of...

-

Free

-

March 26, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal integrity

Signal quality

Slider

submarine

submarine cable systems

submarine communication

submarine optical networking

Telecommunications

Ticker