HomeStandards

Standards

Showing 1 - 10 of 283 results

Multi-Layer Encryption in Optical Fiber Networks: Comprehensive Visual Guide Multi-Layer Encryption in Optical Fiber Networks Pros, Cons, and Strategic Implementation...

-

Free

-

January 29, 2026

Complete Optical Reach Classifications: SR, DR, FR, LR, ER, ZR – Technical Reference Guide Complete Optical Reach Classifications Comprehensive Technical...

-

Free

-

January 28, 2026

Common Optical Wavelengths: 850nm, 1310nm, 1550nm Use Cases and Technical Analysis Common Optical Wavelengths: 850nm, 1310nm, 1550nm Understanding wavelength windows,...

-

Free

-

January 28, 2026

Digital Diagnostics Monitoring (DDM): Real-Time Transceiver Health Monitoring Digital Diagnostics Monitoring (DDM): Real-Time Transceiver Health Monitoring Comprehensive Guide to DDM/DOM...

-

Free

-

January 28, 2026

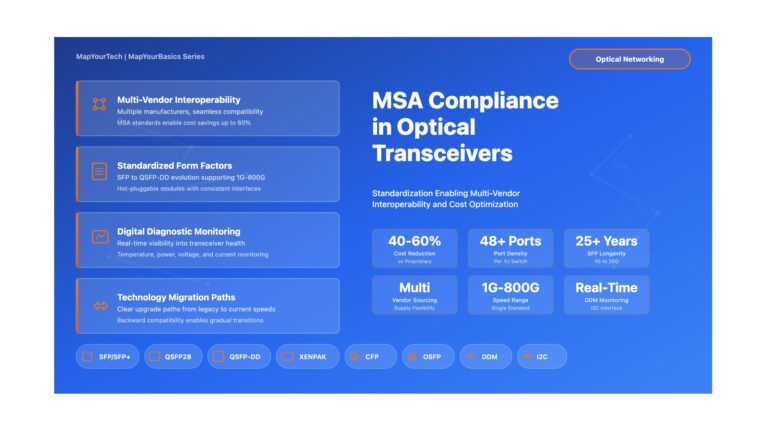

MSA Compliance in Optical Transceivers: Why Standards Are Essential MSA Compliance in Optical Transceivers: Why Standards Are Essential A Comprehensive...

-

Free

-

January 28, 2026

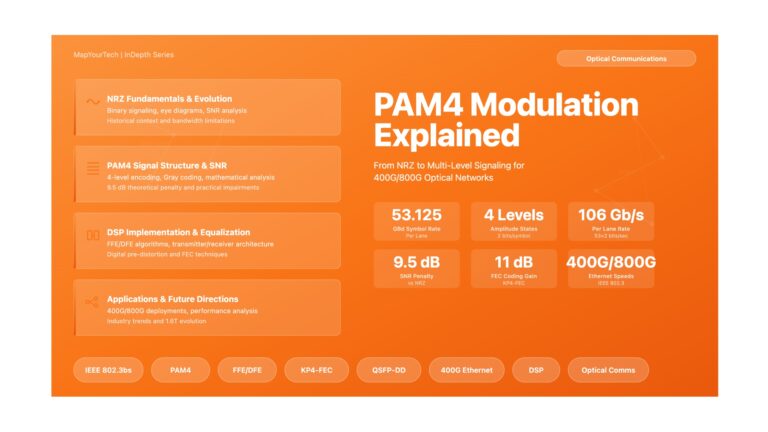

PAM4 Modulation Explained: From NRZ to Multi-Level Signaling PAM4 Modulation Explained: From NRZ to Multi-Level Signaling A comprehensive technical exploration...

-

Free

-

January 28, 2026

BiDi Transceivers: Single Fiber, Dual Wavelength Communication BiDi Transceivers: Single Fiber, Dual Wavelength Communication Comprehensive Guide to Bidirectional Optical Transmission...

-

Free

-

January 28, 2026

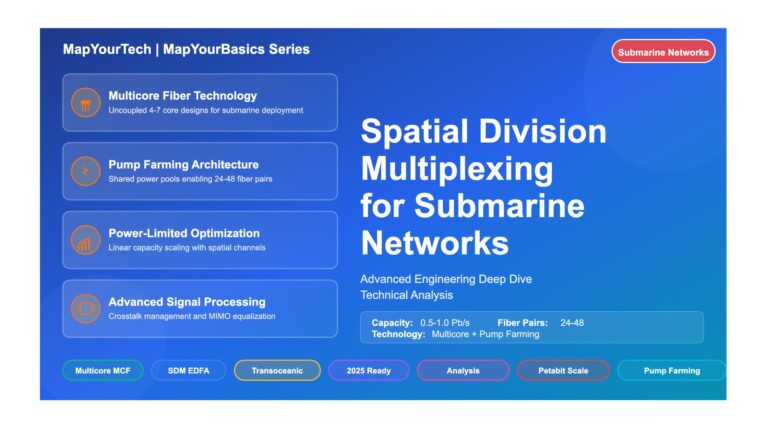

Spatial Division Multiplexing (SDM) for Submarine Networks: Advanced Deep Dive Spatial Division Multiplexing (SDM) for Submarine Networks An Advanced Engineering...

-

Free

-

January 27, 2026

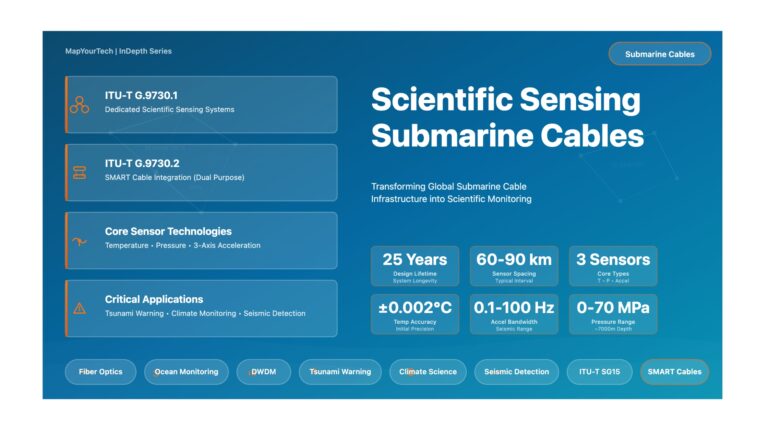

ITU-T G.9730.1 & G.9730.2: Scientific Sensing Submarine Cables – Complete Technical Guide ITU-T G.9730.1 & G.9730.2: Scientific Sensing Submarine Cables...

-

Free

-

January 27, 2026

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal integrity

Signal quality

Slider

submarine

submarine cable systems

submarine communication

submarine optical networking

Telecommunications

Ticker