Forward Error Correction (FEC) has become an indispensable tool in modern optical communication, enhancing signal integrity and extending transmission distances. ITU-T recommendations, such as G.693, G.959.1, and G.698.1, define application codes for optical interfaces that incorporate FEC as specified in ITU-T G.709. In this blog, we discuss the significance of Bit Error Ratio (BER) in FEC-enabled applications and how it influences optical transmitter and receiver performance.

The Basics of FEC in Optical Communications

FEC is a method of error control for data transmission, where the sender adds redundant data to its messages. This allows the receiver to detect and correct errors without the need for retransmission. In the context of optical networks, FEC is particularly valuable because it can significantly lower the BER after decoding, thus ensuring the accuracy and reliability of data across vast distances.

BER Requirements in FEC-Enabled Applications

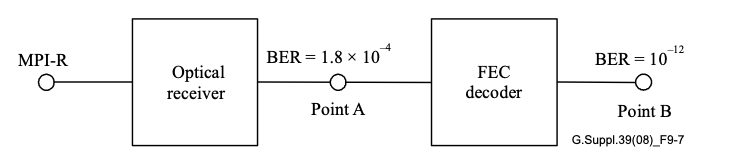

For certain optical transport unit rates (OTUk), the system BER is mandated to meet specific standards only after FEC correction has been applied. The optical parameters, in these scenarios, are designed to achieve a BER no worse than 10−12 at the FEC decoder’s output. This benchmark ensures that the data, once processed by the FEC decoder, maintains an extremely high level of accuracy, which is crucial for high-performance networks.

Practical Implications for Network Hardware

When it comes to testing and verifying the performance of optical hardware components intended for FEC-enabled applications, achieving a BER of 10−12 at the decoder’s output is often sufficient. Attempting to test components at 10−12 at the receiver output, prior to FEC decoding, can lead to unnecessarily stringent criteria that may not reflect the operational requirements of the application.

Adopting Appropriate BER Values for Testing

The selection of an appropriate BER for testing components depends on the specific application. Theoretical calculations suggest a BER of 1.8×10−4at the receiver output (Point A) to achieve a BER of 10−12 at the FEC decoder output (Point B). However, due to variations in error statistics, the average BER at Point A may need to be lower than the theoretical value to ensure the desired BER at Point B. In practice, a BER range of 10−5 to 10−6 is considered suitable for most applications.

Conservative Estimation for Receiver Sensitivity

By using a BER of 10−6 for component verification, the measurements of receiver sensitivity and optical path penalty at Point A will be conservative estimates of the values after FEC correction. This approach provides a practical and cost-effective method for ensuring component performance aligns with the rigorous demands of FEC-enabled systems.

Conclusion

FEC is a powerful mechanism that significantly improves the error tolerance of optical communication systems. By understanding and implementing appropriate BER testing methodologies, network operators can ensure their components are up to the task, ultimately leading to more reliable and efficient networks.

As the demands for data grow, the reliance on sophisticated FEC techniques will only increase, cementing BER as a fundamental metric in the design and evaluation of optical communication systems.