In the world of fiber-optic communication, the integrity of the transmitted signal is critical. As an optical engineers, our primary objective is to mitigate the attenuation of signals across long distances, ensuring that data arrives at its destination with minimal loss and distortion. In this article we will discuss into the challenges of linear and nonlinear degradations in fiber-optic systems, with a focus on transoceanic length systems, and offers strategies for optimising system performance.

The Role of Optical Amplifiers

Erbium-doped fiber amplifiers (EDFAs) are the cornerstone of long-distance fiber-optic transmission, providing essential gain within the low-loss window around 1550 nm. Positioned typically between 50 to 100 km apart, these amplifiers are critical for compensating the fiber’s inherent attenuation. Despite their crucial role, EDFAs introduce additional noise, progressively degrading the optical signal-to-noise ratio (OSNR) along the transmission line. This degradation necessitates a careful balance between signal amplification and noise management to maintain transmission quality.

OSNR: The Critical Metric

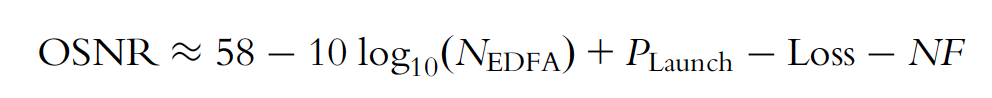

The received OSNR, a key metric for assessing channel performance, is influenced by several factors, including the channel’s fiber launch power, span loss, and the noise figure (NF) of the EDFA. The relationship is outlined as follows:

Where:

- is the number of EDFAs the signal has passed through.

- is the power of the signal when it’s first sent into the fiber, in dBm.

- Loss represents the total loss the signal experiences, in dB.

- NF is the noise figure of the EDFA, also in dB.

Increasing the launch power enhances the OSNR linearly; however, this is constrained by the onset of fiber nonlinearity, particularly Kerr effects, which limit the maximum effective launch power.

The Kerr Effect and Its Implications

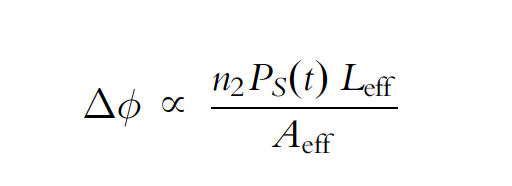

The Kerr effect, stemming from the intensity-dependent refractive index of optical fiber, leads to modulation in the fiber’s refractive index and subsequent optical phase changes. Despite the Kerr coefficient () being exceedingly small, the combined effect of long transmission distances, high total power from EDFAs, and the small effective area of standard single-mode fiber (SMF) renders this nonlinearity a dominant factor in signal degradation over transoceanic distances.

The phase change induced by this effect depends on a few key factors:

- The fiber’s nonlinear coefficient .

- The signal power , which varies over time.

- The transmission distance.

- The fiber’s effective area .

This phase modulation complicates the accurate recovery of the transmitted optical field, thus limiting the achievable performance of undersea fiber-optic transmission systems.

The Kerr effect is a bit like trying to talk to someone at a party where the music volume keeps changing. Sometimes your message gets through loud and clear, and other times it’s garbled by the fluctuations. In fiber optics, managing these fluctuations is crucial for maintaining signal integrity over long distances.

Striking the Right Balance

Understanding and mitigating the effects of both linear and nonlinear degradations are critical for optimising the performance of undersea fiber-optic transmission systems. Engineers must navigate the delicate balance between maximizing OSNR for enhanced signal quality and minimising the impact of nonlinear distortions.The trick, then, is to find that sweet spot where our OSNR is high enough to ensure quality transmission but not so high that we’re deep into the realm of diminishing returns due to nonlinear degradation. Strategies such as carefully managing launch power, employing advanced modulation formats, and leveraging digital signal processing techniques are vital for overcoming these challenges.