>> The Optical Time Domain Reflectometer (OTDR)

OTDR is connected to one end of any fiber optic system up to 250km in length. Within a few seconds, we are able to measure the overall loss, or the loss of any part of a system, the overall length of the fiber and the distance between any points of interest. OTDR is a amazing test instrument for fiber optic systems.

1. A Use for Rayleigh Scatter

As light travels along the fiber, a small proportion of it is lost by Rayleigh scattering. As the light is scattered in all directions, some of it just happens to return back along the fiber towards the light source. This returned light is calledbackscatter as shown below.

The backscatter power is a fixed proportion of the incoming power and as the losses take their toll on the incoming power, the returned power also diminishes as shown in the following figure.

The OTDR can continuously measure the returned power level and hence deduce the losses encountered on the fiber. Any additional losses such as connectors and fusion splices have the effect of suddenly reducing the transmitted power on the fiber and hence causing a corresponding change in backscatter power. The position and degree of the losses can be ascertained.

2. Measuring Distances

The OTDR uses a system rather like a radar set. It sends out a pulse of light and ‘listens’ for echoes from the fiber.

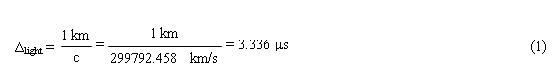

If it knows the speed of light and can measure the time taken for the light to travel along the fiber, it is an easy job to calculate the length of the fiber.

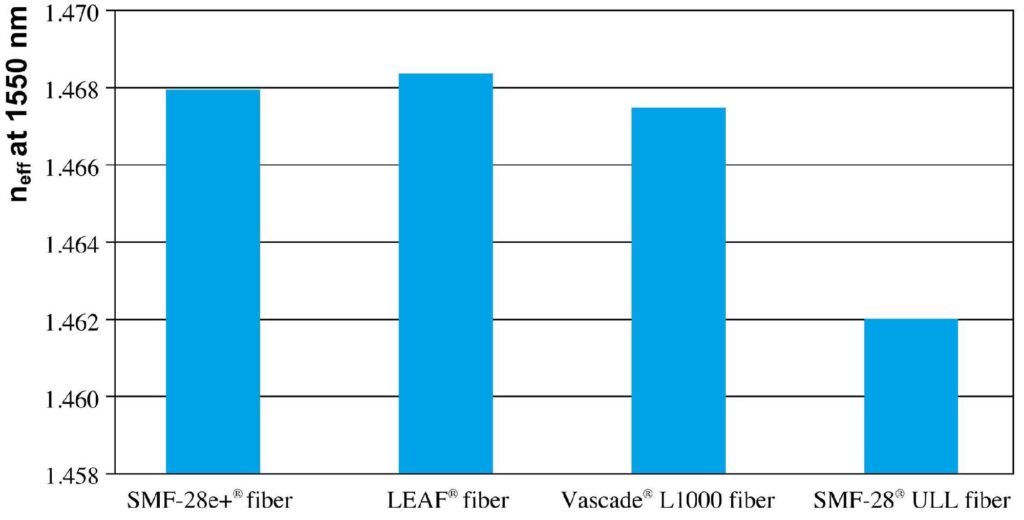

3. To Find the Speed of the Light

Assuming the refractive index of the core is 1.5, the infrared light travels at a speed of

This means that it will take

This is a useful figure to remember, 5 nanoseconds per meter (5 nsm-1).

If the OTDR measures a time delay of 1.4us, then the distance travelled by the light is

The 280 meters is the total distance traveled by the light and is the ‘there and back’ distance. The length of the fiber is therefore only 140m. This adjustment is performed automatically by the OTDR – it just displays the final result of 140m.

4. Inside the OTDR

A. Timer

The timer produces a voltage pulse which is used to start the timing process in the display at the same moment as the laser is activated.

B. Pulsed Laser

The laser is switched on for a brief moment. The ‘on’ time being between 1ns and 10us. We will look at the significance of the choice of ‘on’ time or pulsewidth a little bit later. The wavelength of the laser can be switched to suit the system to be investigated.

C. Directive Coupler

The directive coupler allows the laser light to pass straight through into the fiber under test. The backscatter from the whole length of the fiber approaches the directive coupler from the opposite direction. In this case the mirror surface reflects the light into the avalanche photodiode (APD). The light has now been converted into an electrical signal.

D. Amplifying and Averaging

The electrical signal from the APD is very weak and requires amplification before it can be displayed. The averaging feature is quite interesting and we will look at it separately towards the end of this tutorial.

E. Display

The amplified signals are passed on to the display. The display is either a CRT like an oscilloscope, or a LCD as in laptop computers. They display the returned signals on a simple XY plot with the range across the bottom and the power level in dB up the side.

The following figure shows a typical display. The current parameter settings are shown over the grid. They can be changed to suit the measurements being undertaken. The range scale displayed shows a 50km length of fiber. In this case it is from 0 to 50km but it could be any other 50km slice, for example, from 20km to 70km. It can also be expanded to give a detailed view of a shorter length of fiber such as 0-5m, or 25-30m.

The range can be read from the horizontal scale but for more precision, a variable range marker is used. This is a movable line which can be switched on and positioned anywhere on the trace. Its range is shown on the screen together with the power level of the received signal at that point. To find the length of the fiber, the marker is simply positioned at the end of the fiber and the distance is read off the screen. It is usual to provide up to five markers so that several points can be measured simultaneously.

F. Data Handling

An internal memory or floppy disk can store the data for later analysis. The output is also available via RS232 link for downloading to a computer. In addition, many OTDRs have an onboard printer to provide hard copies of the information on the screen. This provides useful ‘before and after’ images for fault repair as well as a record of the initial installation.

5. A Simple Measurement

If we were to connect a length of fiber, say 300m, to the OTDR the result would look as shown in the following figure.

Whenever the light passes through a cleaved end of a piece of fiber, a Fresnel reflection occurs. This is seen at the far end of the fiber and also at the launch connector. Indeed, it is quite usual to obtain a Fresnel reflection from the end of the fiber without actually cleaving it. Just breaking the fiber is usually enough.

The Fresnel at the launch connector occurs at the front panel of the OTDR and, since the laser power is high at this point, the reflection is also high. The result of this is a relatively high pulse of energy passing through the receiver amplifier. The amplifier output voltage swings above and below the real level, in an effect called ringing. This is a normal amplifier response to a sudden change of input level. The receiver takes a few nanoseconds to recover from this sudden change of signal level.

6. Dead Zones

The Fresnel reflection and the subsequent amplifier recovery time results in a short period during which the amplifier cannot respond to any further input signals. This period of time is called a dead zone. It occurs to some extent whenever a sudden change of signal amplitude occurs. The one at the start of the fiber where the signal is being launched is called the launch dead zone and others are called event dead zones or just dead zones.

7. Overcoming the Launch Dead Zone

As the launch dead zone occupies a distance of up to 20 meters or so, this means that, given the job of checking a 300m fiber, we may only be able to check 280m of it. The customer would not be delighted.

To overcome this problem, we add our own patch cord at the beginning of the system. If we make this patch cord about 100m in length, we can guarantee that all launch dead zone problems have finished before the customers’ fiber is reached.

The patch cord is joined to the main system by a connector which will show up on the OTDR readout as a small Fresnel reflection and a power loss. The power loss is indicated by the sudden drop in the power level on the OTDR trace.

8. Length and Attenuation

The end of the fiber appears to be at 400m on the horizontal scale but we must deduct 100m to account for our patch cord. This gives an actual length of 300m for the fiber being tested.

Immediately after the patch cord Fresnel reflection the power level shown on the vertical scale is about –10.8dB and at the end of the 300m run, the power has fallen to about –11.3 dB. A reduction in power level of 0.5 dB in 300 meters indicates a fiber attenuation of:

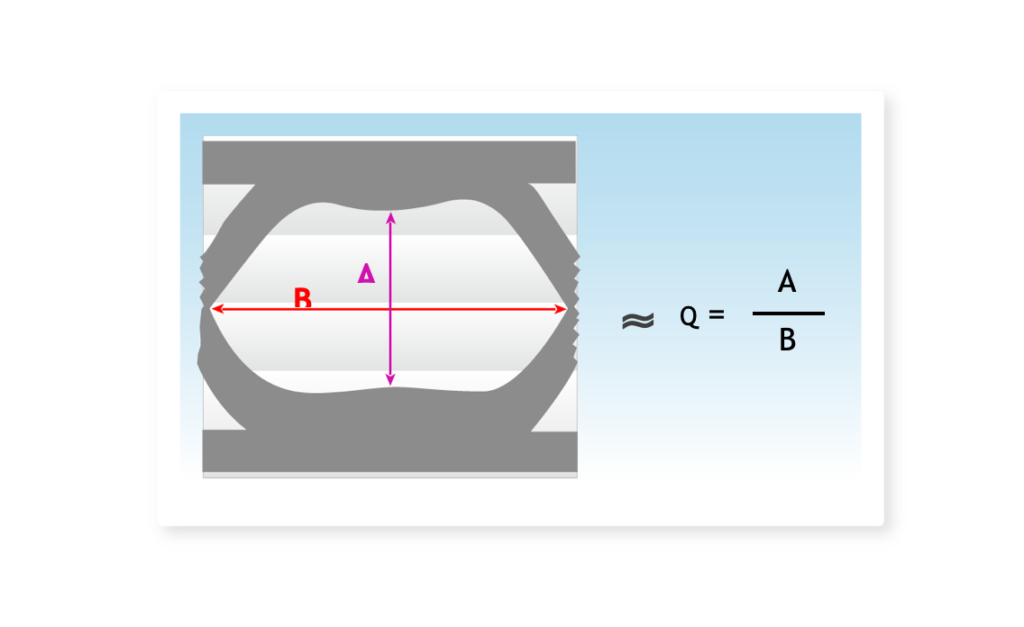

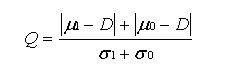

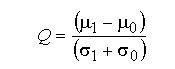

Most OTDRs provide a loss measuring system using two markers. The two makers are switched on and positioned on a length of fiber which does not include any other events like connectors or whatever as shown in the following figure.

The OTDR then reads the difference in power level at the two positions and the distance between them, performs the above calculation for us and displays the loss per kilometer for the fiber. This provides a more accurate result than trying to read off the decibel and range values from the scales on the display and having to do our own calculations.

9. An OTDR Display of a Typical System

The OTDR can ‘see’ Fresnel reflections and losses. With this information, we can deduce the appearance of various events on an OTDR trace as seen below.

A. Connectors

A pair of connectors will give rise to a power loss and also a Fresnel reflection due to the polished end of the fiber.

B. Fusion Splice

Fusion splices do not cause any Fresnel reflections as the cleaved ends of the fiber are now fused into a single piece of fiber. They do, however, show a loss of power. A good quality fusion splice will actually be difficult to spot owing to the low losses. Any signs of a Fresnel reflection is a sure sign of a very poor fusion splice.

C. Mechanical Splice

Mechanical splices appear similar to a poor quality fusion splice. The fibers do have cleaved ends of course but the Fresnel reflection is avoided by the use of index marching gel within the splice. The losses to be expected are similar to the least acceptable fusion splices.

D. Bend Loss

This is simply a loss of power in the area of the bend. If the loss is very localized, the result is indistinguishable from a fusion or mechanic splice.

10. Ghost Echoes (False Reflection)

In the following figure, some of the launched energy is reflected back from the connectors at the end of the patch cord at a range of 100m. This light returns and strikes the polished face of the launch fiber on the OTDR front panel. Some of this energy is again reflected to be re-launched along the fiber and will cause another indication from the end of the patch cord, giving a false, or ghost, Fresnel reflection at a range of 200m and a false ‘end’ to the fiber at 500m.

As there is a polished end at both ends of the patch cord, it is theoretically possible for the light to bounce to and fro along this length of fiber giving rise to a whole series of ghost reflections. In the figure a second reflection is shown at a range of 300m.

It is very rare for any further reflections to be seen. The maximum amplitude of the Fresnel reflection is 4% of the incoming signal, and is usually much less. Looking at the calculations, even assuming the worst reflection, the returned energy is 4% or 0.04 of the launched energy. The re-launched energy, as a result of another reflection is 4% of the 4% or 0.042 = 0.0016 x input energy. This shows that we need a lot of input energy to cause a ghost reflection.

A second ghost would require another two reflections giving rise to a signal of only 0.00000256 of the launched energy. Subsequent reflections die out very quickly as we could imagine.

Ghost reflections can be recognized by their even spacing. If we have a reflection at 387m and another at 774 then we have either a strange coincidence or a ghost. Ghost reflections have a Fresnel reflection but do not show any loss. The loss signal is actually of too small an energy level to be seen on the display. If a reflection shows up after the end of the fiber, it has got to be a ghost.

11. Effects of Changing the Pulsewidth

The maximum range that can be measured is determined by the energy contained within the pulse of laser light. The light has to be able to travel the full length of the fiber, be reflected, and return to the OTDR and still be of larger amplitude than the background noise. Now, the energy contained in the pulse is proportional to the length of the pulse so to obtain the greatest range the longest possible pulsewidth should be used as illustrated in the following figure.

This cannot be the whole story, as OTDRs offer a wide range of pulsewidths.

We have seen that light covers a distance of 1 meter every 5 nanoseconds so a pulsewidth of 100nm would extend for a distance of 20 meters along the fiber (see the following figure). When the light reaches an event, such as a connector, there is a reflection and a sudden fall in power level. The reflection occurs over the whole of the 20m of the outgoing pulse. Returning to the OTDR is therefore a 20m reflection. Each event on the fiber system will also cause a 20m pulse to be reflected back towards the OTDR.

Now imagine two such events separated by a distance of 10m or less as in the following figure. The two reflections will overlap and join up on the return path to the OTDR. The OTDR will simply receive a single burst of light and will be quite unable to detect that two different events have occurred. The losses will add, so two fusion splices for example, each with a loss of 0.2dB will be shown as a single splice with a loss of 0.4dB.

The minimum distance separating two events that can be displayed separately is called the range discrimination of the OTDR.

The shortest pulsewidth on an OTDR may well be in the order of 10ns so at a rate of 5nsm-1 this will provide a pulse length in the fiber of 2m. The range discrimination is half this figure so that two events separated by a distance greater than 1m can be displayed as separate events. At the other end of the scale, a maximum pulsewidth of 10us would result in a range discrimination of 1 km.

Another effect of changing the pulsewidth is on dead zones. Increasing the energy in the pulse will cause a larger Fresnel reflection. This, in turn, means that the amplifier will take longer to recover and hence the event dead zones will become larger as shown in the next figure.

12. Which Pulsewidth to Choose?

Most OTDRs give a choice of at least five different pulse length from which to select.

Low pulse widths mean good separation of events but the pulse has a low energy content so the maximum range is very poor. A pulse width of 10ns may well provide a maximum range of only a kilometer with a range discrimination of 1 meter.

The wider the pulse, the longer the range but the worse the range discrimination. A 1us pulse width will have a range of 40 km but cannot separate events closer together than 100 m.

As a general guide, use the shortest pulse that will provide the required range.

13. Averaging

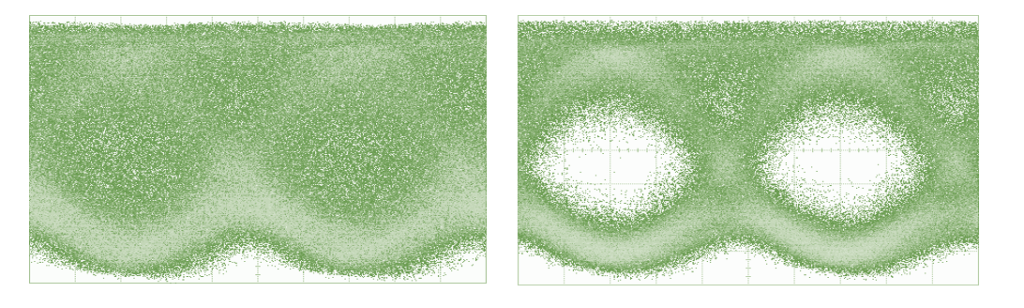

The instantaneous value of the backscatter returning from the fiber is very weak and contains a high noise level which tends to mask the return signal.

As the noise is random, its amplitude should average out to zero over a period of time. This is the idea behind the averaging circuit.

The incoming signals are stored and averaged before being displayed. The larger the number of signals averaged, the cleaner will be the final result but the slower will be the response to any changes that occur during the test. The mathematical process used to perform the effect is called least squares averaging or LSA.

The following figure shows the enormous benefit of employing averaging to reduce the noise effect.

Occasionally it is useful to switch the averaging off to see a real time signal from the fiber to see the effects of making adjustments to connectors etc. This is an easy way to optimize connectors, mechanical splices, bends etc. Simply fiddle with it and watch the OTDR screen.

14. OTDR Dynamic Range

When a close range reflection, such as the launch Fresnel occurs, the reflected energy must not be too high otherwise it could damage the OTDR receiving circuit. The power levels decrease as the light travels along the fiber and eventually the reflections are similar in level to that of the noise and can no longer be used.

The difference between the highest safe value of the input power and the minimum detectable power is called thedynamic range of the OTDR and, along with the pulse width and the fiber losses, determines the useful range of the equipment.

If an OTDR was quoted as having a dynamic range of 36 dB, it could measure an 18km run of fiber with a loss of 2 dB/km, or alternatively a 72 km length of fiber having a loss of 0.5 dB/km, or any other combination that multiplies out to 36 dB.