Standards

Showing 21 - 30 of 271 results

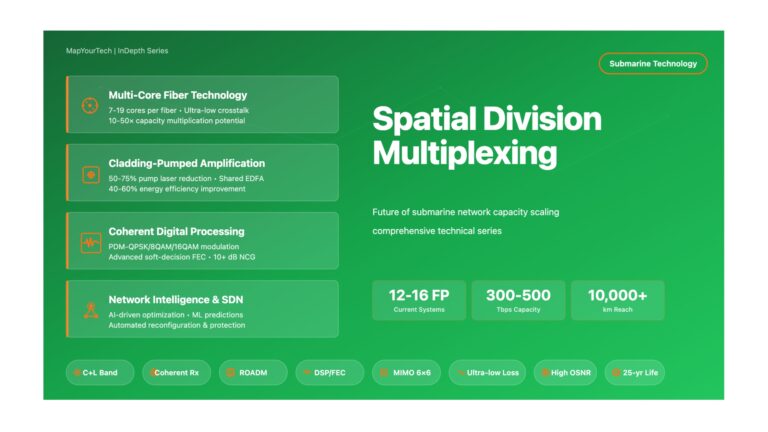

Spatial Division Multiplexing: Future of Submarine Network Capacity Spatial Division Multiplexing: Future of Submarine Network Capacity Exploring the Next Generation...

-

Free

-

November 30, 2025

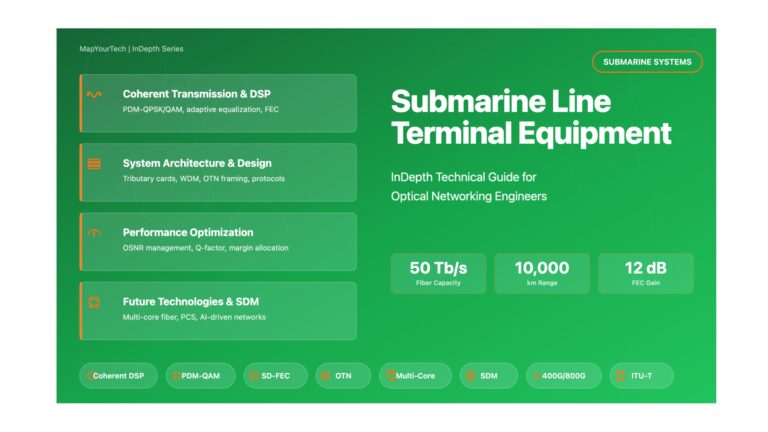

Submarine Line Terminal Equipment (SLTE) Submarine Line Terminal Equipment (SLTE):InDepth Foundation, Evolution & Core Concepts Submarine Optical Networks Introduction Submarine...

-

Free

-

November 30, 2025

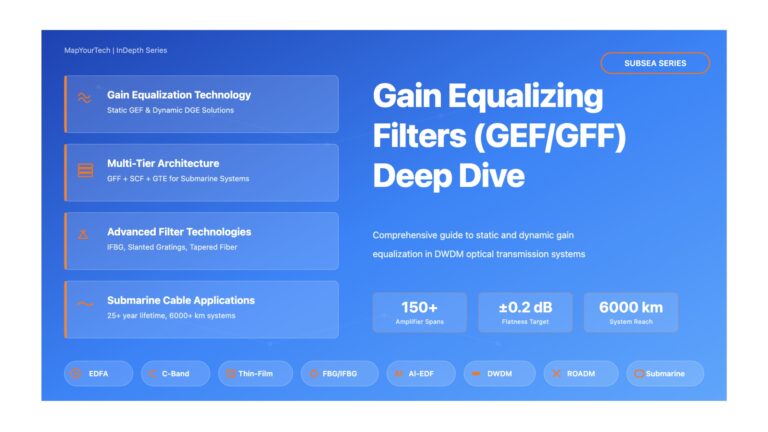

Gain Equalizing Filters (GEF) – Comprehensive Visual Guide | MapYourTech Gain Equalizing Filters (GEF) Deep Dive Practical Information Based on...

-

Free

-

November 30, 2025

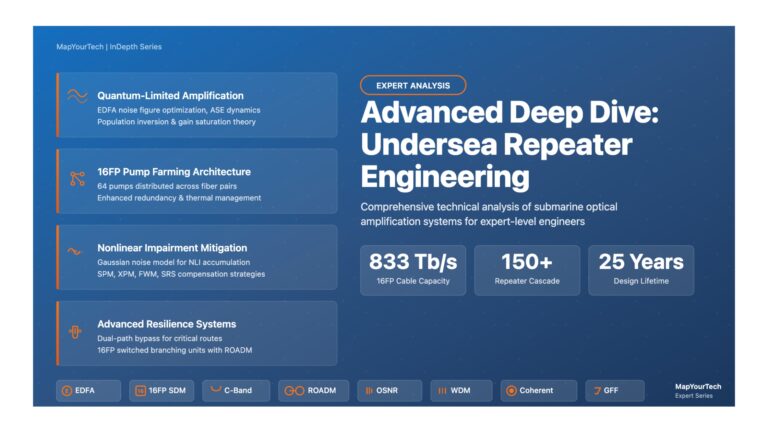

Advanced Deep Dive: Undersea Repeater Engineering Advanced Deep Dive: Undersea Repeater Engineering Comprehensive Technical Analysis of Submarine Optical Amplification Systems...

-

Free

-

November 29, 2025

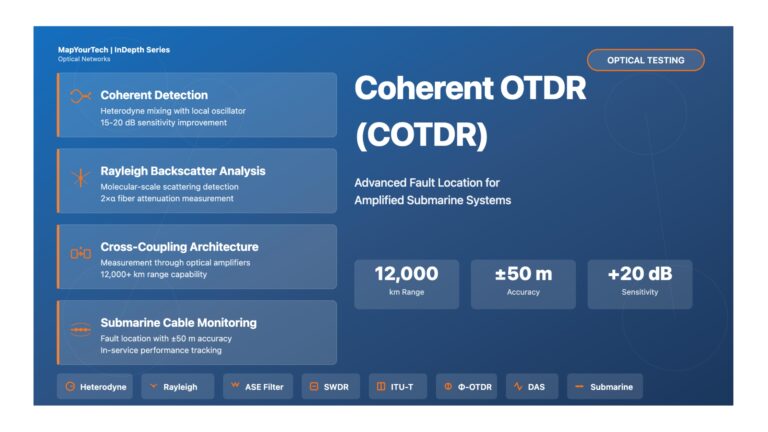

Coherent Optical Time Domain Reflectometry (COTDR) – Comprehensive Visual Guide Coherent Optical Time Domain Reflectometry (COTDR) Technical Guide for Optical...

-

Free

-

November 29, 2025

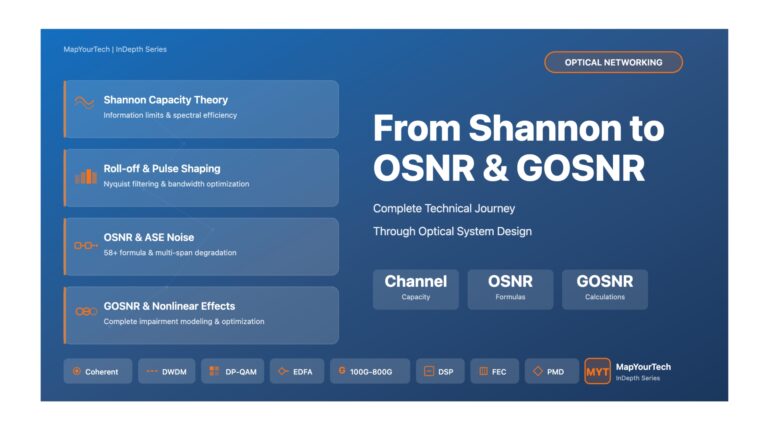

Complete Guide: Shannon Capacity to OSNR and GOSNR – Part 1 From Shannon Capacity to OSNR and GOSNR: A Complete...

-

Free

-

November 20, 2025

Pluggable vs Embedded Optics: Which is Right for Your Network? | MapYourTech Pluggable vs Embedded Optics: Which is Right for...

-

Free

-

November 20, 2025

Multi-Vendor ROADM Interoperability – Part 1: Introduction & Architecture Multi-Vendor ROADM Interoperability in Optical Transport Networks Introduction The optical networking...

-

Free

-

November 16, 2025

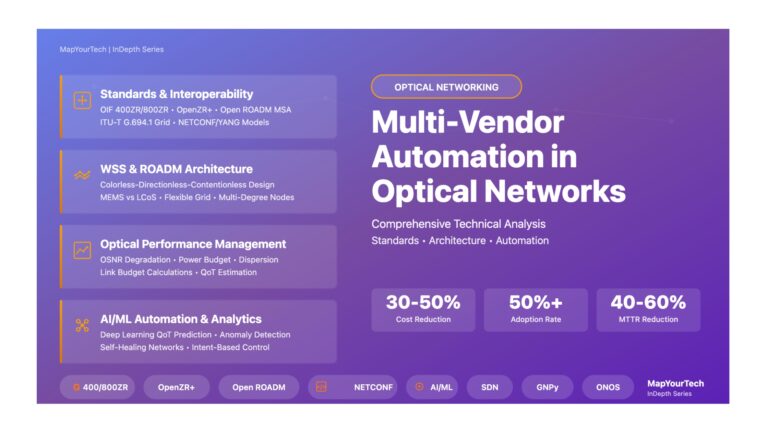

Multi-Vendor Integration in Optical Networks: A Comprehensive Technical Analysis Multi-Vendor Automation in Optical Networks: A Comprehensive Technical Analysis Introduction The...

-

Free

-

November 15, 2025

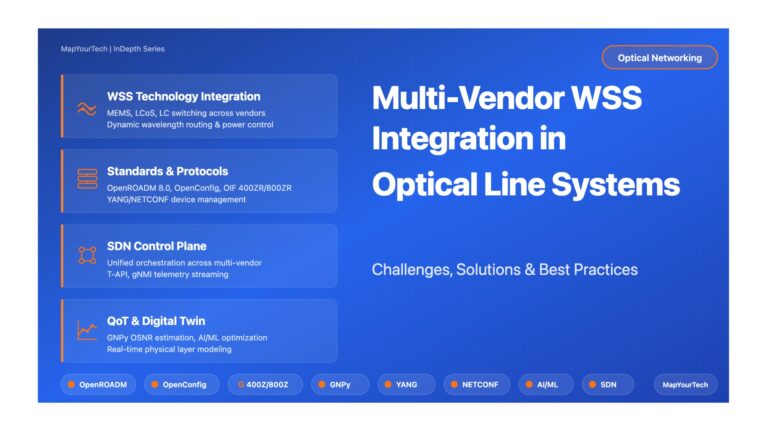

Multi-Vendor WSS Integration in Optical Line Systems: Comprehensive Technical Guide Multi-Vendor WSS Integration in Optical Line Systems Comprehensive Technical Guide:...

-

Free

-

November 15, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal amplification

Signal integrity

Signal quality

Slider

submarine

submarine communication

submarine optical networking

Telecommunications

Ticker