The advent of 5G technology is set to revolutionise the way we connect, and at its core lies a sophisticated transport network architecture. This architecture is designed to support the varied requirements of 5G’s advanced services and applications.

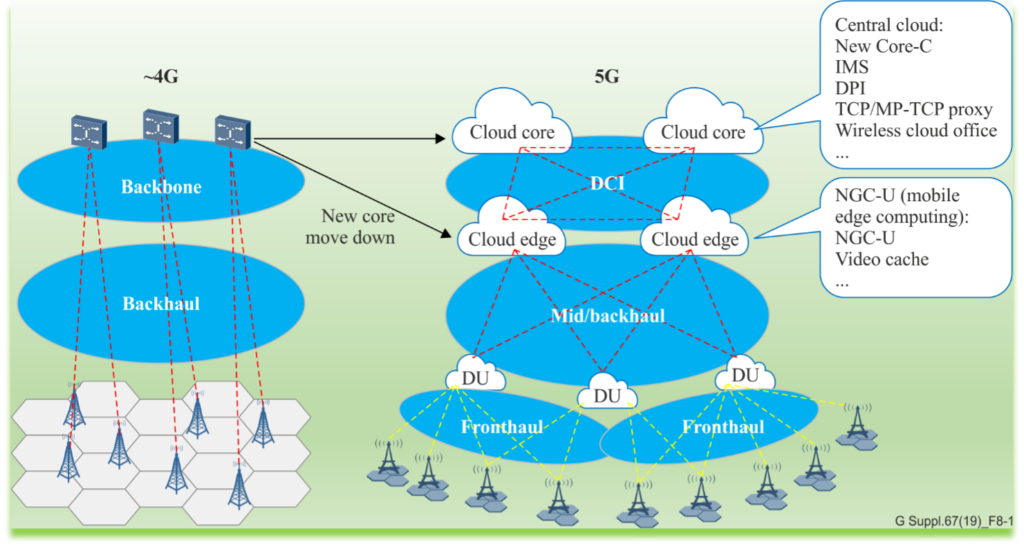

As we migrate from the legacy 4G to the versatile 5G, the transport network must evolve to accommodate new deployment strategies influenced by the functional split options specified by 3GPP and the drift of the Next Generation Core (NGC) network towards cloud-edge deployment.

The Four Pillars of 5G Transport Network

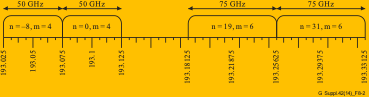

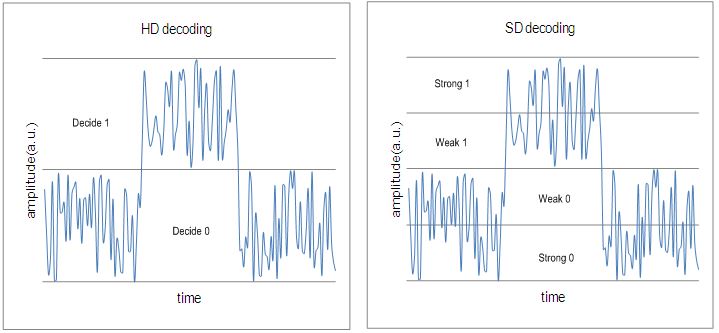

1. Fronthaul: This segment of the network deals with the connection between the high PHY and low PHY layers. It requires a high bandwidth, about 25 Gbit/s for a single UNI interface, escalating to 75 or 150 Gbit/s for an NNI interface in pure 5G networks. In hybrid 4G and 5G networks, this bandwidth further increases. The fronthaul’s stringent latency requirements (<100 microseconds) necessitate point-to-point (P2P) deployment to ensure rapid and efficient data transfer.

2. Midhaul: Positioned between the Packet Data Convergence Protocol (PDCP) and Radio Link Control (RLC), the midhaul section plays a pivotal role in data aggregation. Its bandwidth demands are slightly less than that of the fronthaul, with UNI interfaces handling 10 or 25 Gbit/s and NNI interfaces scaling according to the DU’s aggregation capabilities. The midhaul network typically adopts tree or ring modes to efficiently connect multiple Distributed Units (DUs) to a centralized Control Unit (CU).

3. Backhaul: Above the Radio Resource Control (RRC), the backhaul shares similar bandwidth needs with the midhaul. It handles both horizontal traffic, coordinating services between base stations, and vertical traffic, funneling various services like Vehicle to Everything (V2X), enhanced Mobile BroadBand (eMBB), and Internet of Things (IoT) from base stations to the 5G core.

4. NGC Interconnection: This crucial juncture interconnects nodes post-deployment in the cloud edge, demanding bandwidths equal to or in excess of 100 Gbit/s. The architecture aims to minimize bandwidth wastage, which is often a consequence of multi-hop connections, by promoting single hop connections.

The Impact of Deployment Locations

The transport network’s deployment locations—fronthaul, midhaul, backhaul, and NGC interconnection—each serve unique functions tailored to the specific demands of 5G services. From ensuring ultra-low latency in fronthaul to managing service diversity in backhaul, and finally facilitating high-capacity connectivity in NGC interconnections, the transport network is the backbone that supports the high-speed, high-reliability promise of 5G.

As we move forward into the 5G era, understanding and optimizing these transport network segments will be crucial for service providers to deliver on the potential of this transformative technology.