HomePosts tagged “DWDM”

DWDM

Showing 1 - 10 of 34 results

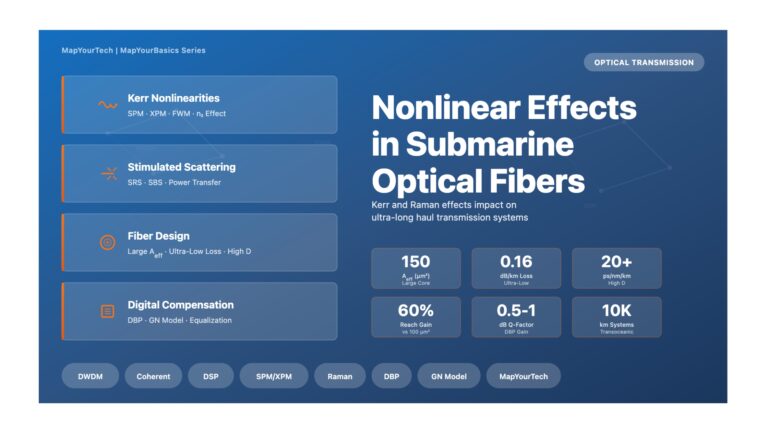

Nonlinear Effects in Submarine Optical Fibers Optical Transmission Nonlinear Effects in Submarine Optical Fibers Understanding Kerr and Raman nonlinearities and...

-

Free

-

December 7, 2025

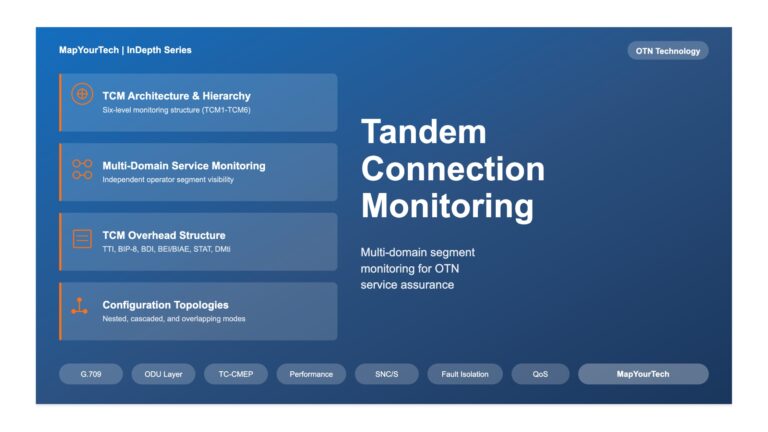

Tandem Connection Monitoring in OTN Networks | MapYourTech Tandem Connection Monitoring in Optical Transport Networks Comprehensive guide to multi-domain service...

-

Free

-

December 3, 2025

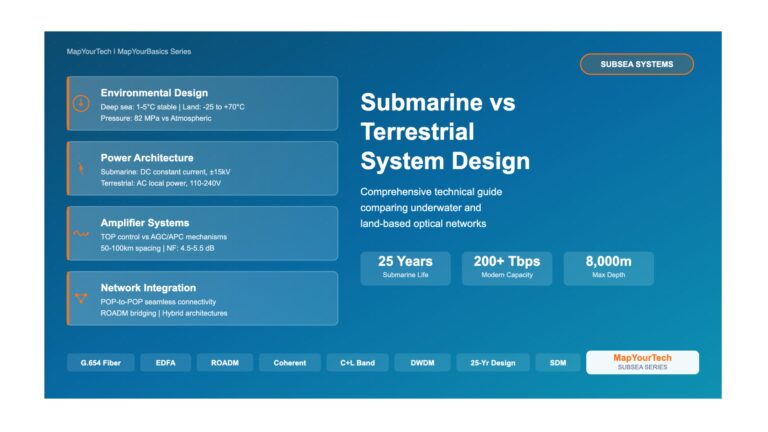

Submarine vs Terrestrial System Design Differences – Comprehensive Visual Guide Submarine vs Terrestrial System Design Differences Practical Information Based on...

-

Free

-

November 30, 2025

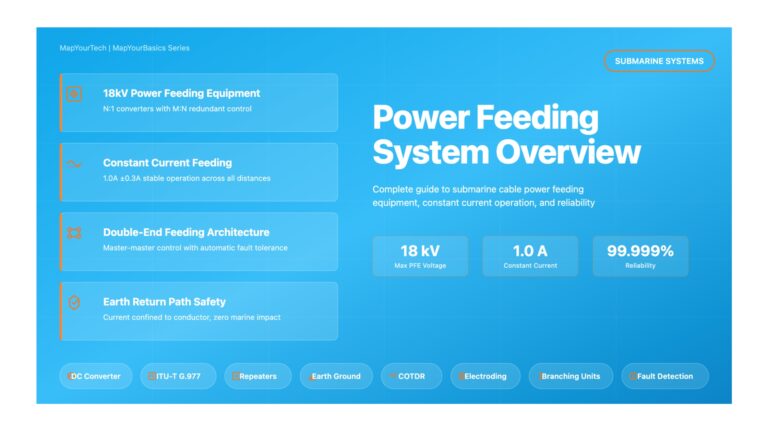

Power Feeding System Overview – Comprehensive Visual Guide Power Feeding System Overview Power Feeding Equipment (PFE) Practical Information Based on...

-

Free

-

November 30, 2025

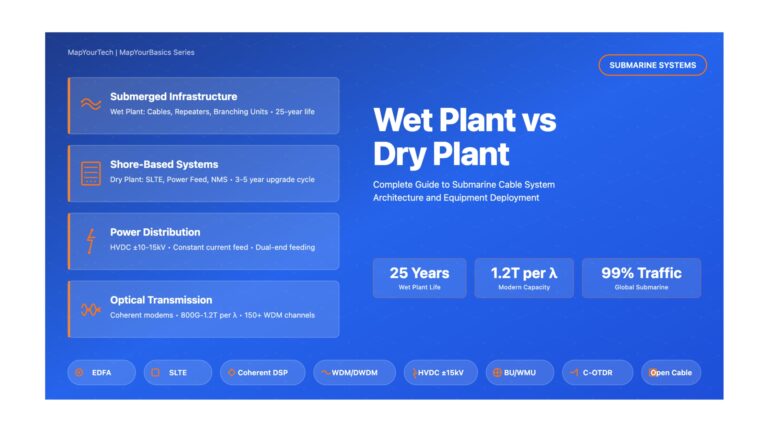

Wet Plant vs Dry Plant Equipment in Submarine Networks – Comprehensive Visual Guide Wet Plant vs Dry Plant Equipmentin Submarine...

-

Free

-

November 30, 2025

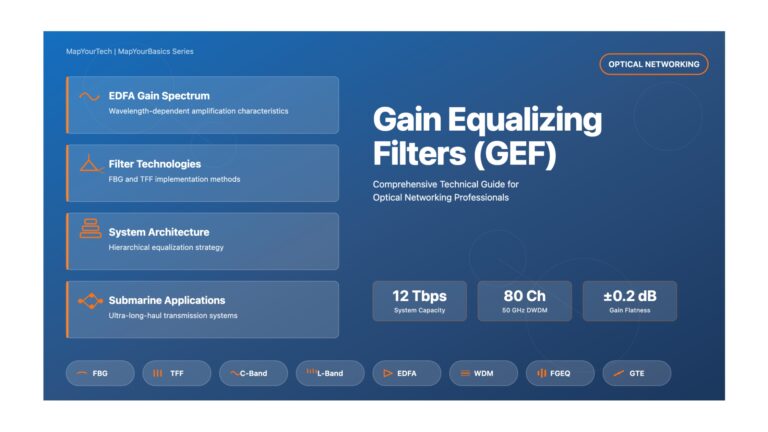

Gain Equalizing Filters (GEF) – Comprehensive Visual Guide | MapYourTech Overview of Gain Equalizing Filters (GEF) Comprehensive Technical Guide for...

-

Free

-

November 30, 2025

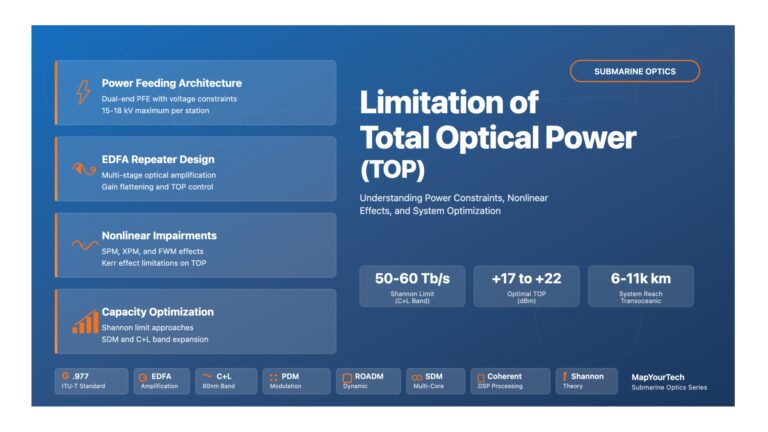

Limitation of Total Optical Power (TOP) in Submarine Optical Networks – Comprehensive Visual Guide Limitation of Total Optical Power (TOP)...

-

Free

-

November 29, 2025

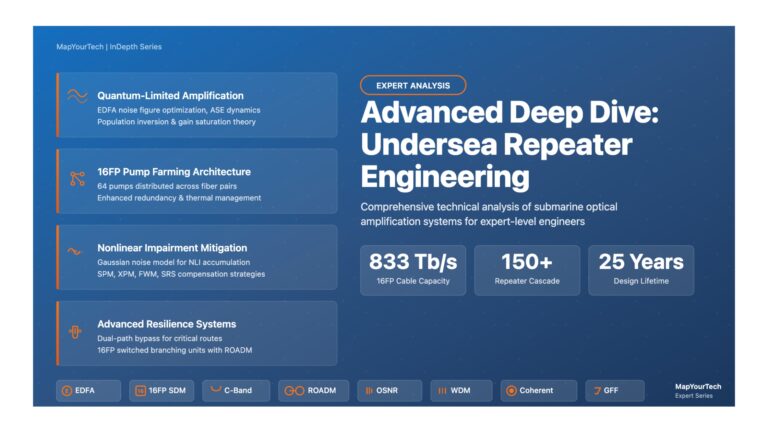

Advanced Deep Dive: Undersea Repeater Engineering Advanced Deep Dive: Undersea Repeater Engineering Comprehensive Technical Analysis of Submarine Optical Amplification Systems...

-

Free

-

November 29, 2025

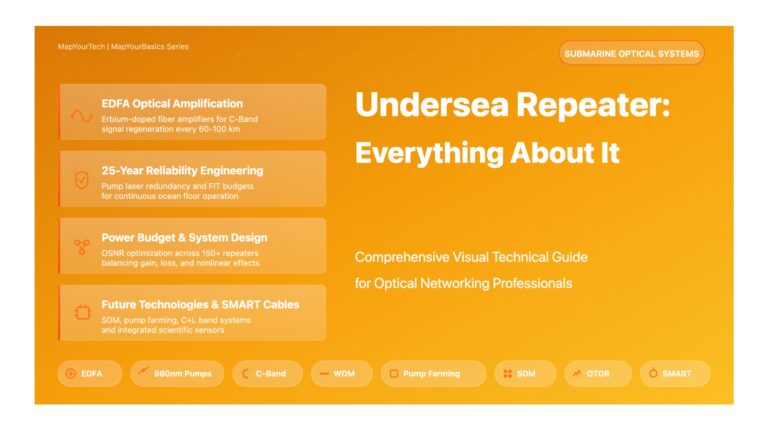

Undersea Repeater: Everything About It – Comprehensive Visual Guide Undersea Repeater: Everything About It Comprehensive Visual Technical Guide for Optical...

-

Free

-

November 29, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal integrity

Signal quality

Slider

submarine

submarine cable systems

submarine communication

submarine optical networking

Telecommunications

Ticker