The maximum number of erbium-doped fiber amplifiers (EDFAs) in a fiber chain is about four to six.

Explanation

The rule is based on the following rationales:

1. About 80 km exists between each in-line EDFA, because this is the approximate distance at which the signal needs to be amplified.

2. One booster is used after the transmitter.

3. One preamplifier is used before the receiver.

4. Approximately 400 km is used before an amplified spontaneous emission (ASE) has approached the signal (resulting in a loss of optical signal-to-noise ratio [OSNR]) and regeneration needs to be used.

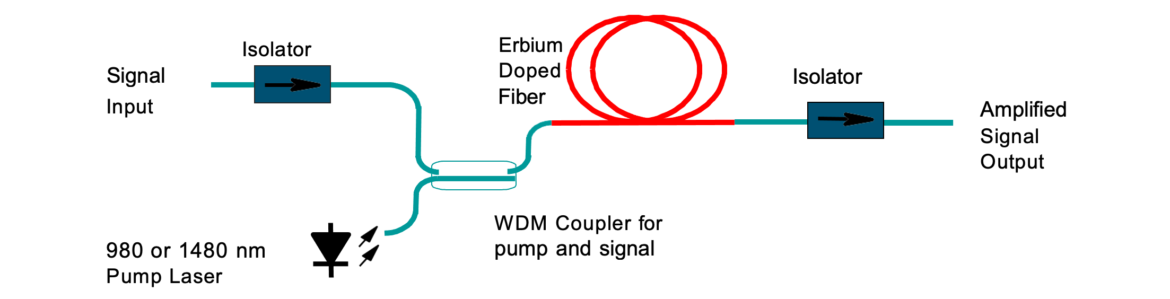

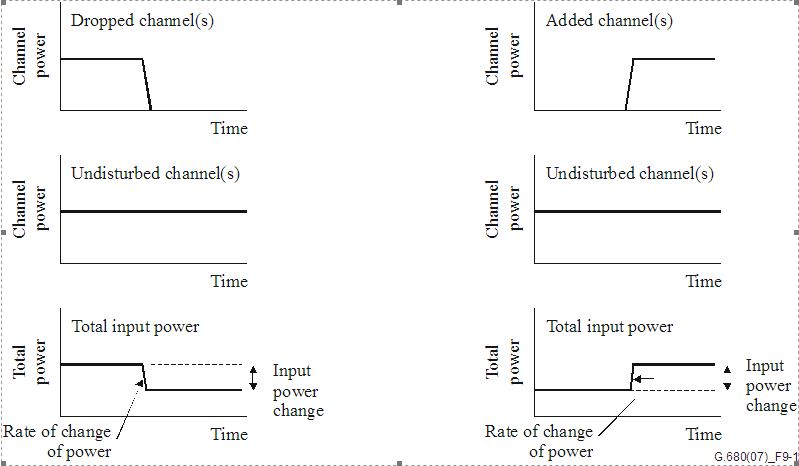

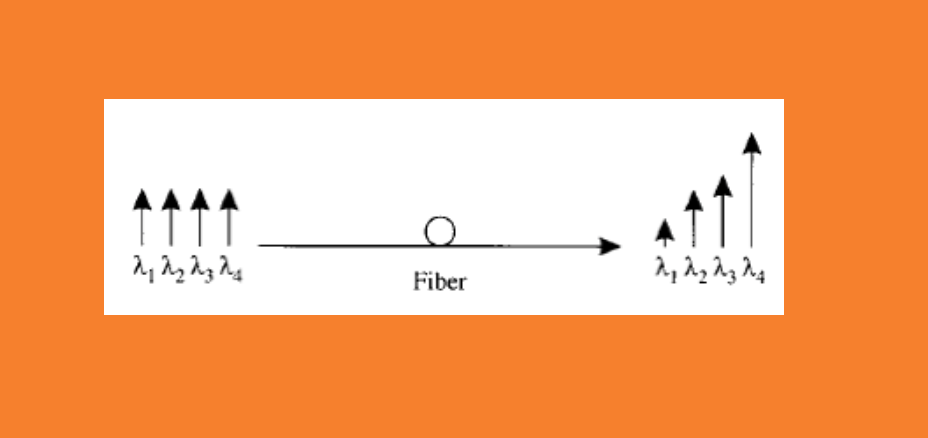

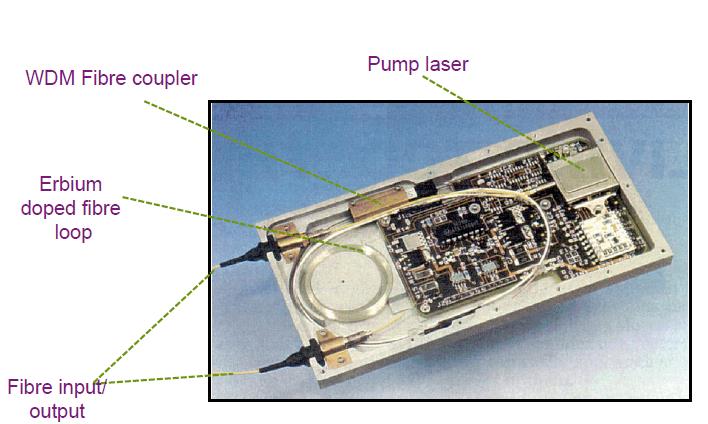

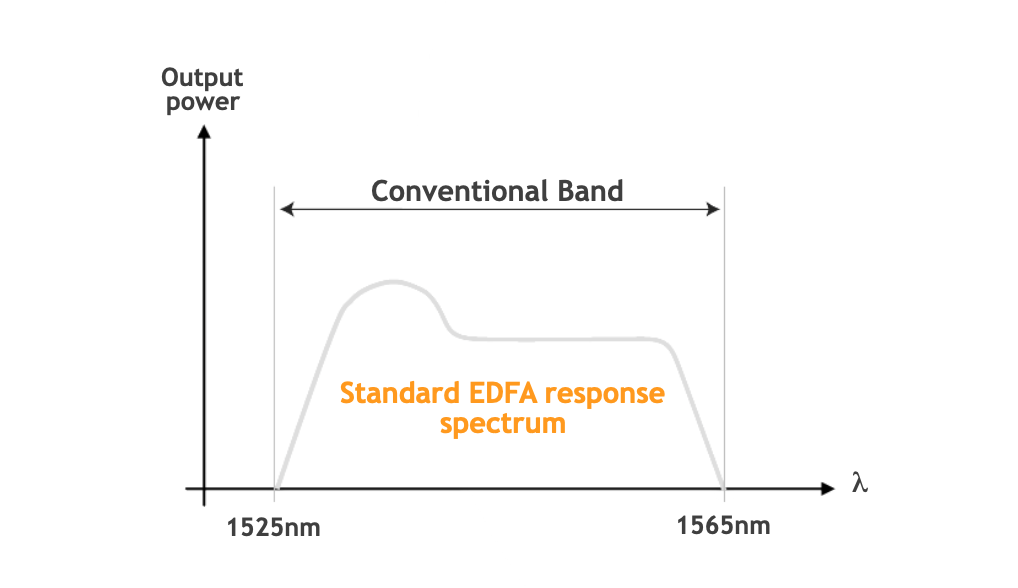

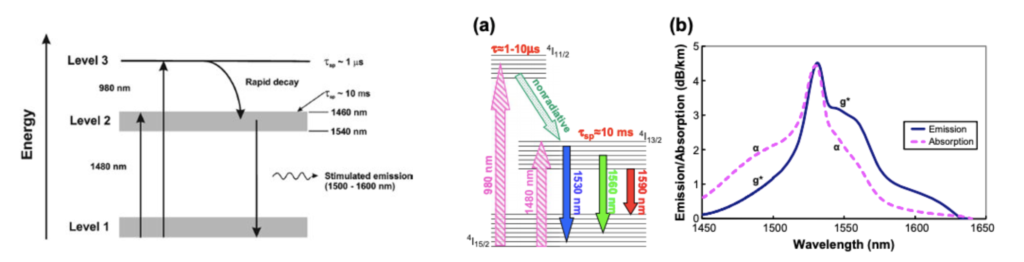

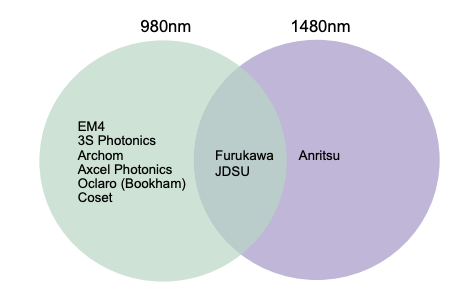

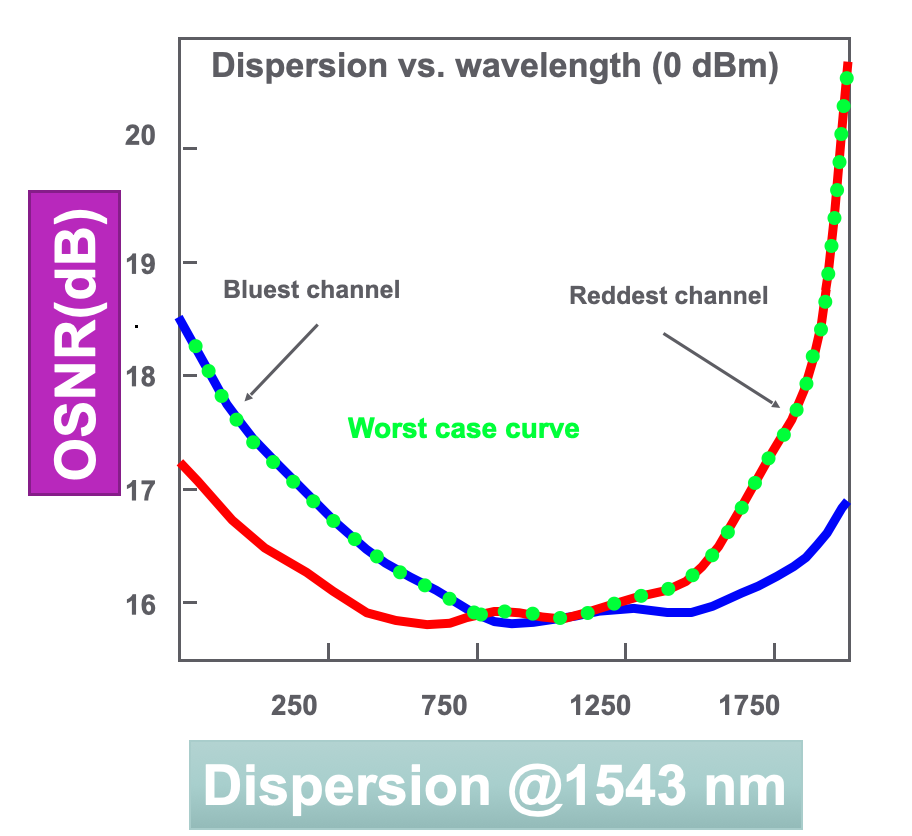

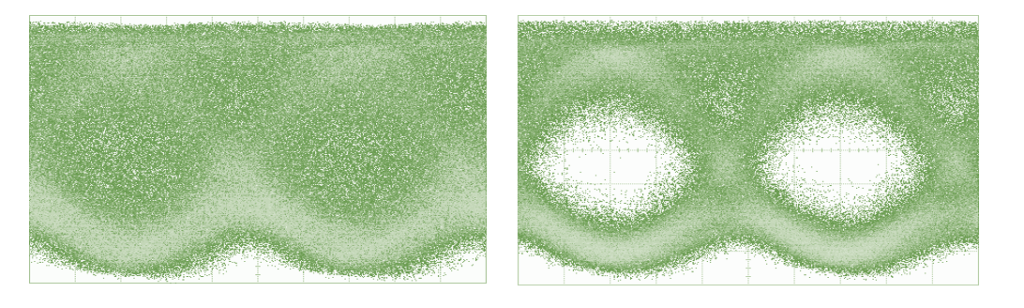

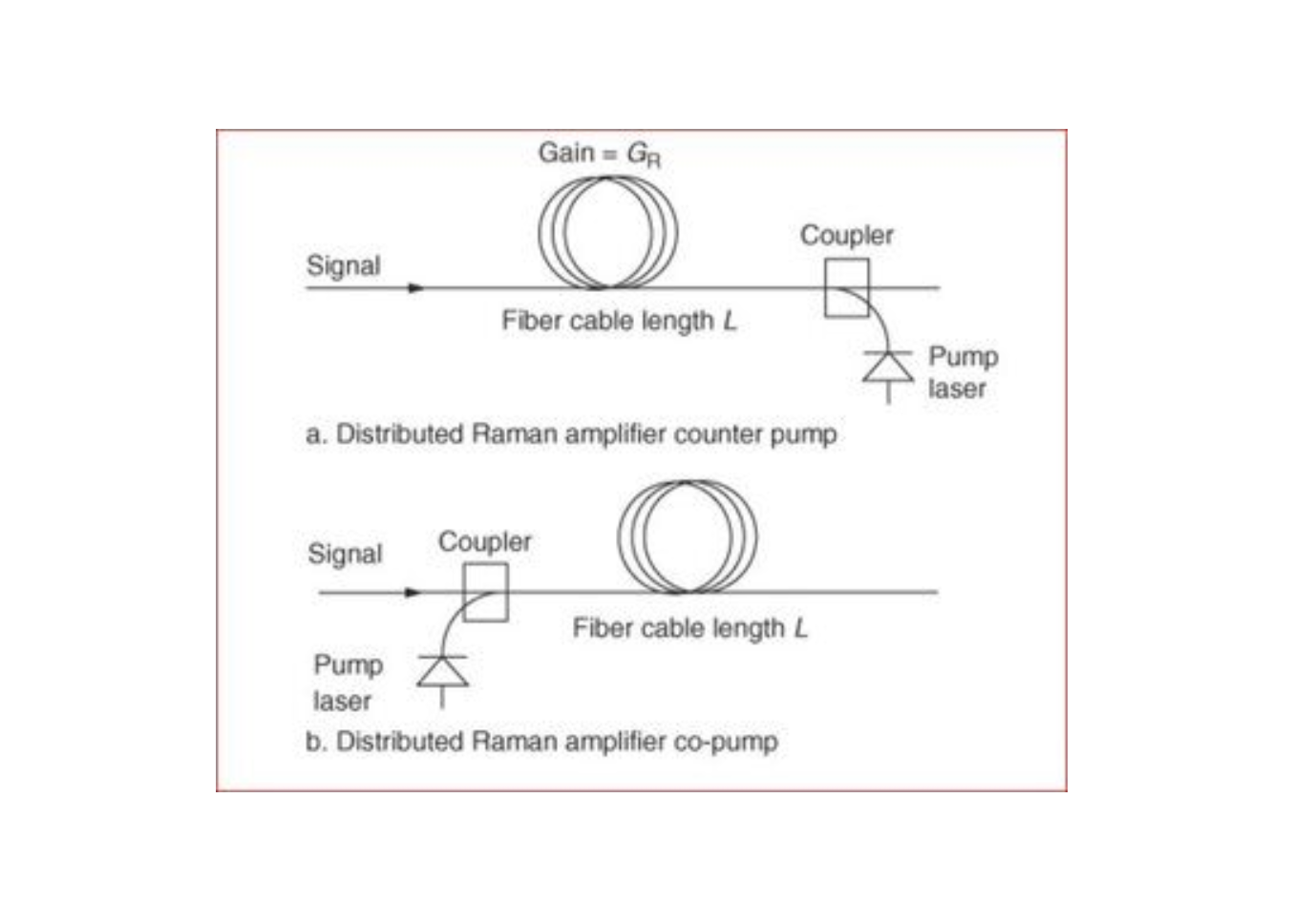

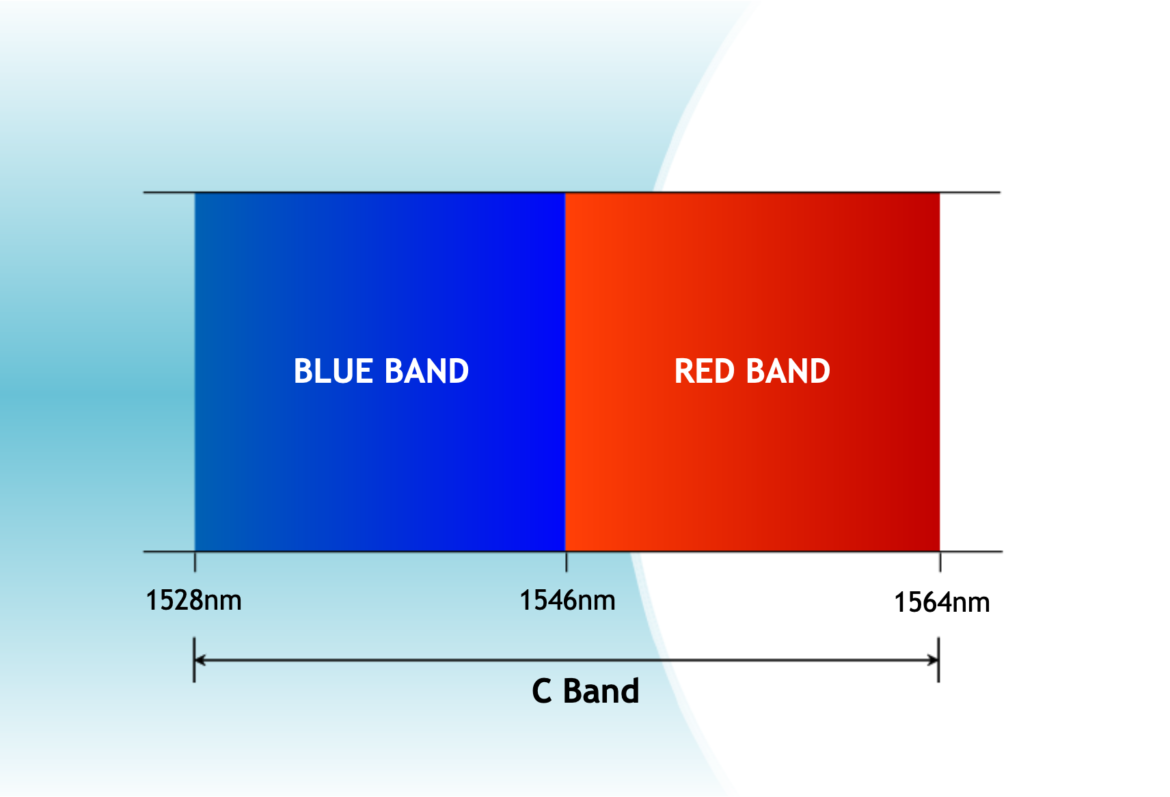

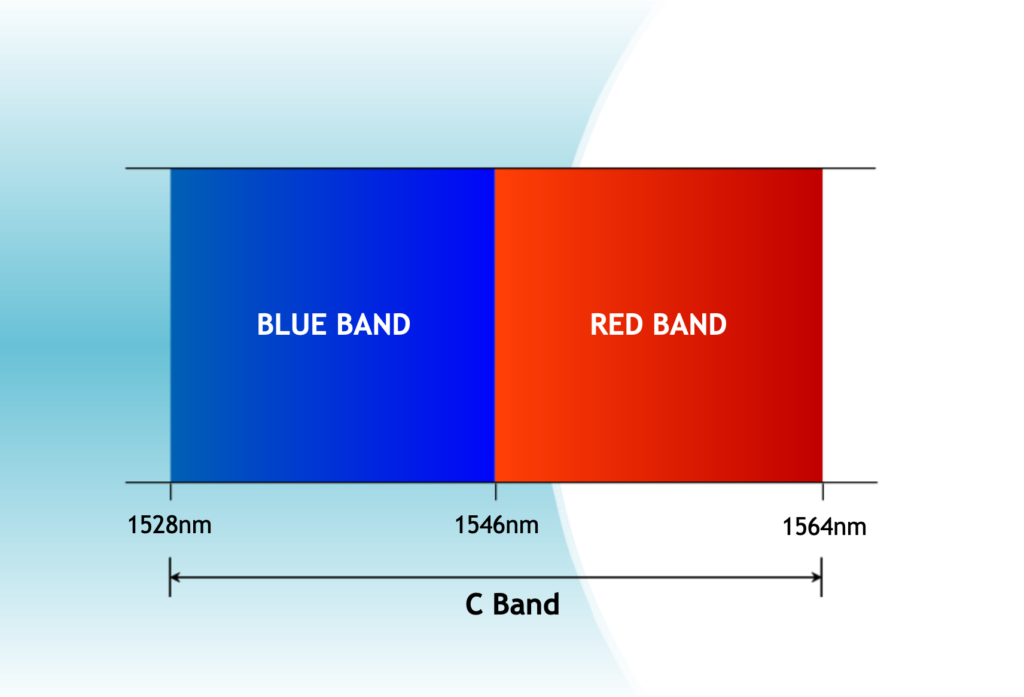

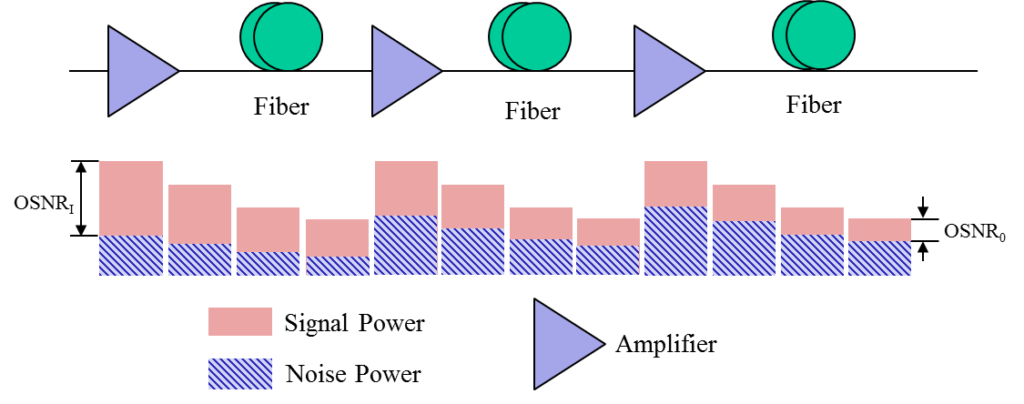

An EDFA amplifies all the wavelengths and modulated as well as unmodulated light. Thus, every time it is used, the noise floor from stimulated emissions rises. Since the amplification actually adds power to each band (rather than multiplying it), the signal-to-noise ratio is decreased at each amplification. EDFAs also work only on the C and L bands and are typically pumped with a 980- or 1480-nm laser to excite the erbium electrons. About 100 m of fiber is needed for a 30-dB gain, but the gain curve doesn’t have a flat distribution, so a filter is usually included to ensure equal gains across the C and L bands.

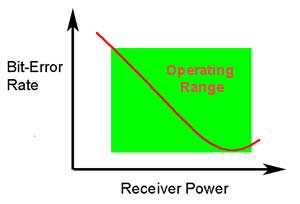

For example, assume that the modulated power was 0.5 mW, and the noise from stimulated emission was 0.01 mW. The signal-to-noise ratio is 0.5/0.01 or 50. If an EDFA adds a 0.5 mW to both the modulated signal and the noise, then the modulated signal becomes 1 mW, and the noise becomes 0.501 mW, and the SNR is reduced to 2. After many amplifications,even if the total power is high, the optical signal-to-noise ratio becomes too low. This typically occurs after four to six amplifications.

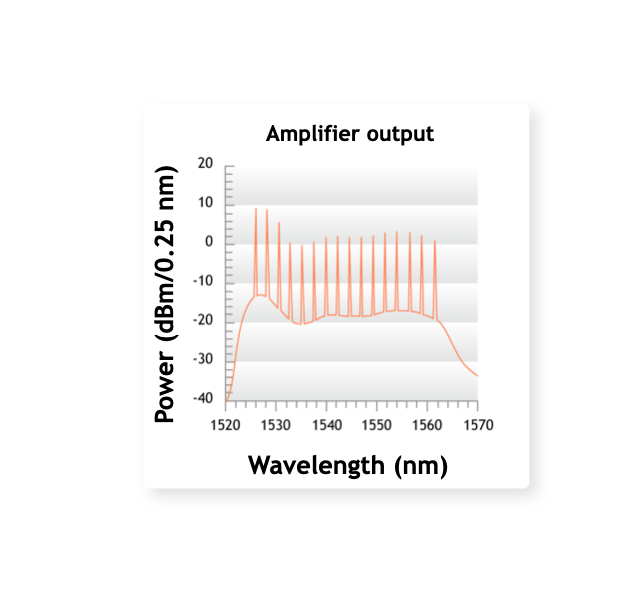

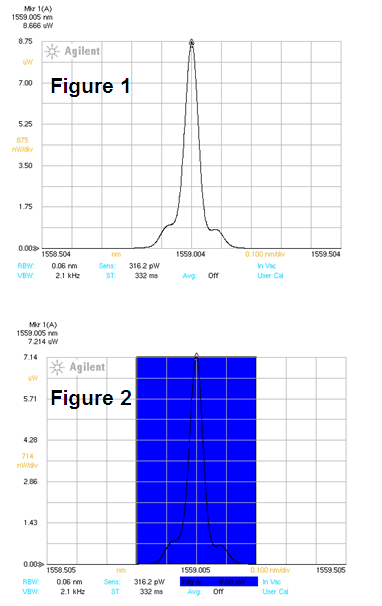

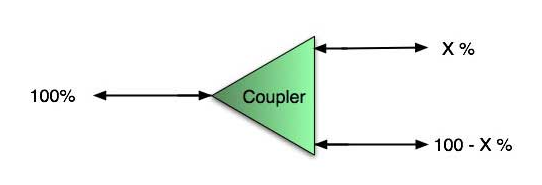

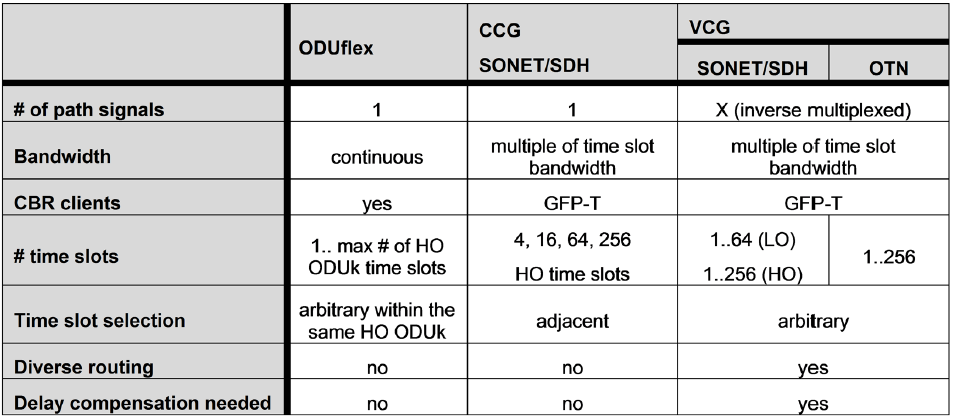

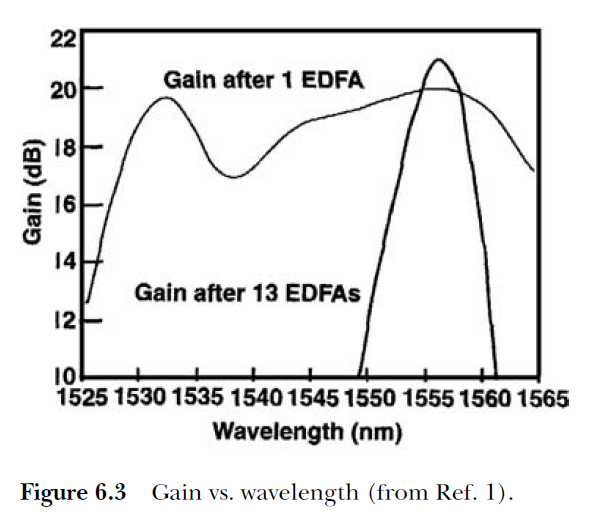

Another reason to limit the number of chained EDFAs is the nonuniform nature of the gain. Generally, the gain peaks at 1555 nm and falls off on each side, and it is a function of the inversion of Er+3. When a large number of EDFAs are cascaded, the sloped of the gain becomes multiplied and sharp, as indicated in Fig. 6.3. This results is too little gain-bandwidth for a system. To help alleviate this effect, a gain flattening device often is used, such as a Mach–Zehnder or a long-period grating filter.

Reference

1. A. Willner and Y. Xie, “Wavelength Domain Multiplexed (WDM) Fiber-optic Communications Networks,” in Handbook of Optics, Vol. 4., M. Bass, Ed.,McGraw-Hill, New York, pp. 13–19, 2001.

2.http://www.pandacomdirekt.com/en/technologies/wdm/optical-amplifiers.html

3.http://blog.cubeoptics.com/index.php/2015/03/what-edfa-a-noise-source

Source: Optical Communications Rules of Thumb

Note:I have heard many times among optical folks discussing maximum number of amplifiers in a link;so thought of posting this.