1. Introduction and Background

Optical networks form the backbone of modern communications infrastructure, enabling the high-speed transmission of vast amounts of data across global networks. While bandwidth has traditionally been the primary focus of network performance metrics, latency has emerged as an equally critical parameter, particularly for time-sensitive applications. Latency, defined as the delay from the time of packet transmission at the sender to the end of packet reception at the receiver, can significantly impact the overall performance of communication systems even when bandwidth remains constant.

The evolution of optical networking technologies has continually pushed the boundaries of data transmission capabilities. However, as applications become increasingly time-sensitive, the focus has shifted toward minimizing delay in addition to maximizing throughput. This shift represents a fundamental change in how we evaluate network performance and design optical communication systems.

Historically, optical networks were primarily designed to maximize bandwidth and transmission distance. The first-generation systems focused on basic point-to-point connections, while subsequent generations introduced wavelength division multiplexing (WDM) to increase capacity. Modern systems have evolved to include advanced techniques for dispersion compensation, signal amplification, and digital signal processing, all of which affect latency in various ways.

2. Fundamentals of Latency in Optical Systems

2.1 Physical Principles of Latency

At its most basic level, latency in optical fiber networks arises from the time it takes light to travel through the transmission medium. While light travels at approximately 299,792.458 km/s in vacuum, it propagates more slowly in optical fiber due to the refractive index of the material. This fundamental physical constraint establishes a lower bound on achievable latency.

The effective group index of refraction (neff) is a critical parameter that determines the actual speed of light in an optical fiber. It represents a weighted average of all the indices of refraction encountered by light as it travels within the fiber. For standard single-mode fiber (SMF) defined by ITU-T G.652 recommendation, the neff is approximately 1.4676 for transmission at 1310 nm and 1.4682 for transmission at 1550 nm wavelength.

Using these values, we can calculate the speed of light in optical fiber:

At 1310 nm wavelength: v₁₃₁₀ = c/neff = 299,792.458 km/s / 1.4676 = 204,271.5 km/s

At 1550 nm wavelength: v₁₅₅₀ = c/neff = 299,792.458 km/s / 1.4682 = 204,189.7 km/sThis translates to a propagation delay of approximately:

- 4.895 μs/km at 1310 nm

- 4.897 μs/km at 1550 nm

These values represent the theoretical minimum latency for signal transmission over optical fiber, assuming no additional delays from other network components or processing overhead.

2.2 Major Sources of Latency in Optical Networks

Beyond the inherent delay caused by light propagation in fiber, multiple components and processes contribute to the overall latency in optical networks. These can be broadly categorized into:

- Optical Fiber Delays:

- Propagation delay (approximately 4.9 μs/km)

- Additional delay due to fiber type and refractive index profile

- Optical Component Delays:

- Amplifiers (e.g., EDFAs add approximately 0.15 μs due to 30m of erbium-doped fiber)

- Dispersion compensation modules (DCMs)

- Fiber Bragg gratings (FBGs)

- Optical switches and ROADMs (Reconfigurable Optical Add-Drop Multiplexers)

- Opto-Electrical Component Delays:

- Transponders and muxponders (typically 5-10 μs per unit)

- O-E-O conversion (approximately 100 μs)

- Digital signal processing (up to 1 μs)

- Forward Error Correction (15-150 μs depending on algorithm)

- Protocol and Processing Delays:

- Higher OSI layer processing

- Data packing and unpacking

- Switching and routing decisions

The total end-to-end latency in an optical network is the sum of multiple delay components. While fiber propagation delay often constitutes the largest portion (60-80%), other components can add significant overhead. Understanding the relative contribution of each component is crucial for effective latency optimization.

3. Applications Requiring Low Latency

3.1 Financial Services

In the financial sector, particularly in high-frequency trading, latency can have a direct impact on profitability. Even a 10 ms delay can potentially result in a 10% drop in revenue. Modern trading systems have moved from executing transactions within seconds to requiring millisecond, microsecond, and now even nanosecond response times. Some institutions can complete transactions within 0.35 μs during high-frequency trading.

Key requirements:

- Ultra-low latency (sub-millisecond)

- High stability (minimal jitter)

- Predictable performance

3.2 Interactive Entertainment Services

Time-critical, bandwidth-hungry services such as 4K/8K video, virtual reality (VR), and augmented reality (AR) require low-latency networks to provide a seamless user experience. For VR services specifically, industry consensus suggests that latency should not exceed 20 ms to avoid vertigo and ensure a positive user experience.

Key requirements:

- Latency < 20 ms

- Consistent performance

- High bandwidth

3.3 IoT and Real-Time Cloud Services

Applications such as data hot backup, cloud desktop, and intra-city disaster recovery also benefit from low-latency networks. For optimal cloud desktop service experience and high-reliability intra-city data center disaster recovery, latency requirements are typically less than 20 ms.

Key requirements:

- Latency < 20 ms

- Reliability

- Scalability

3.4 5G and Autonomous Systems

Emerging technologies like autonomous driving require extremely low latency to function safely. For autonomous driving, the end-to-end latency requirement is approximately 5 ms, with a round-trip time (RTT) latency of 2 ms reserved for the transport network.

Key requirements:

- Latency < 1 ms

- Ultra-high reliability

- Widespread coverage

| Application | Maximum Acceptable Latency | One-Way/Round-Trip | Critical Factors | Recommended Technologies |

|---|---|---|---|---|

| High-Frequency Trading | 0.35-1 ms | One-way | • Consistency • Deterministic performance |

• Direct fiber routes • Hollow-core fiber • Minimal processing |

| Virtual/Augmented Reality | 20 ms | Round-trip | • Low jitter • Consistent performance |

• Edge computing • Optimized backbone • Content caching |

| Cloud Services | 20 ms | Round-trip | • Reliability • Scalability |

• Distributed data centers • Full-mesh topology • Protocol optimization |

| Autonomous Driving | 5 ms | Round-trip | • Ultrahigh reliability • Widespread coverage |

• Mobile edge computing • 5G integration • URLLC protocols |

| 5G URLLC Services | 1 ms | Round-trip | • Coverage • Reliability |

• Fiber fronthaul/backhaul • Distributed architecture |

4. Mathematical Models and Analysis

4.1 Propagation Delay Modeling

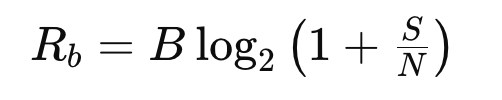

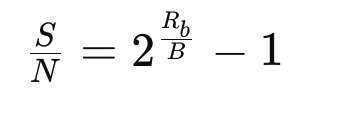

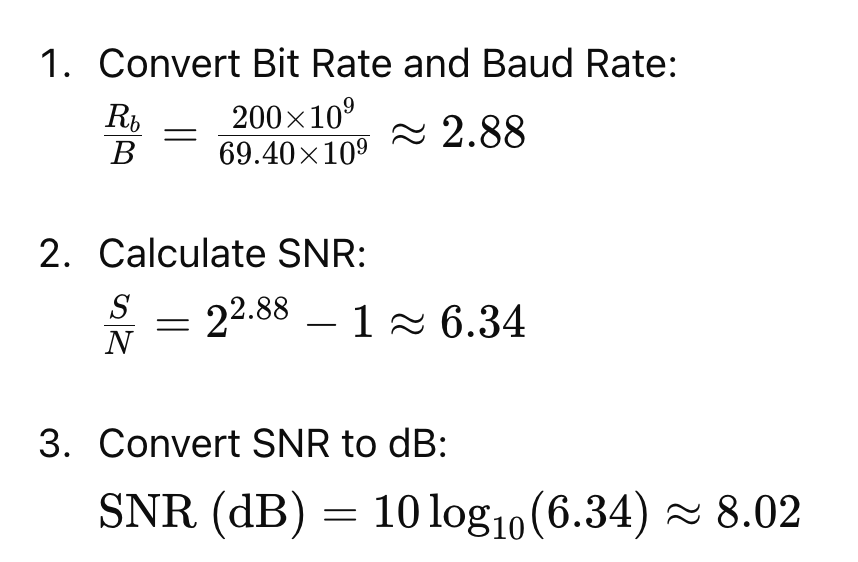

The propagation delay in optical fiber can be modeled mathematically as:

Delay (in seconds) = Length (in meters) / Velocity (in meters/second)Where velocity is determined by:

Velocity = c / neffWith c being the speed of light in vacuum (299,792,458 m/s) and neff being the effective group index of refraction.

For a fiber of length L with an effective group index of refraction neff, the propagation delay T can be calculated as:

T = L × neff / cThis equation forms the foundation for understanding latency in optical networks and serves as the starting point for more complex analysis involving additional network components.

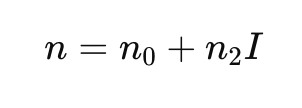

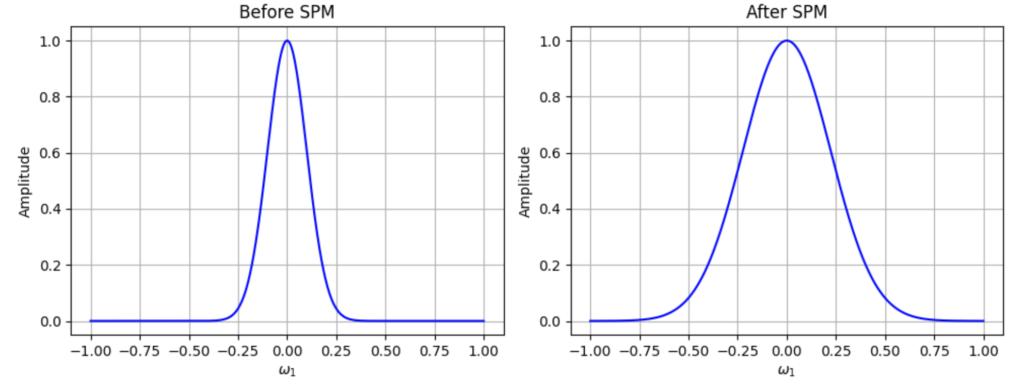

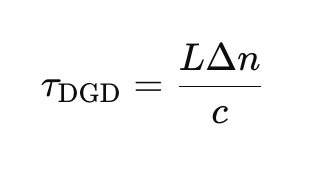

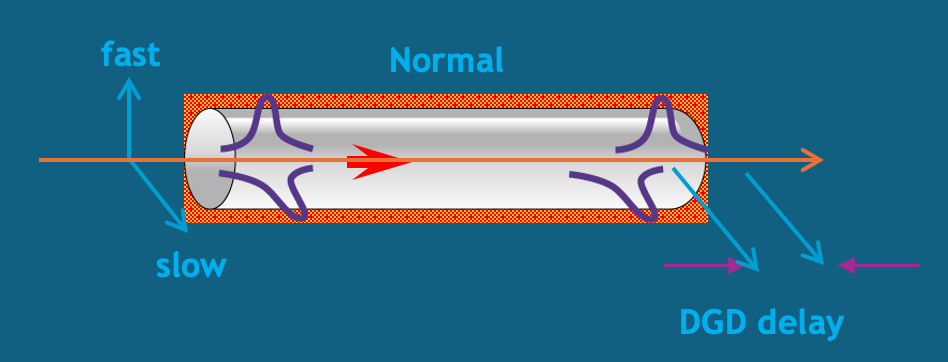

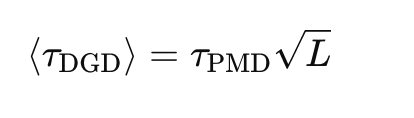

4.2 Chromatic Dispersion Effects on Latency

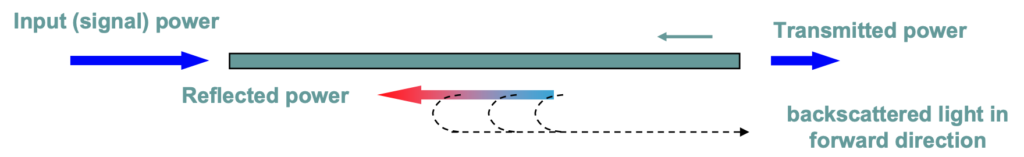

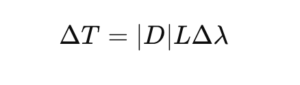

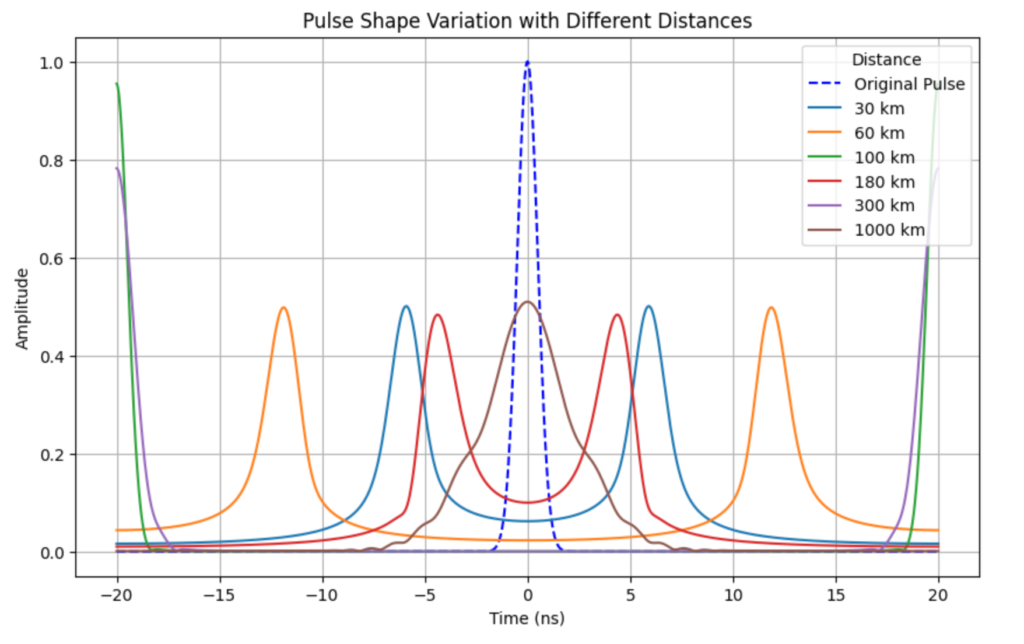

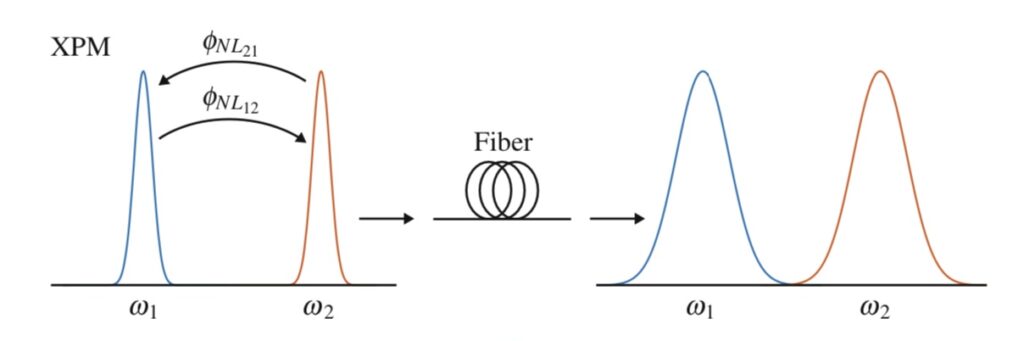

Chromatic dispersion (CD) occurs because different wavelengths of light travel at different speeds in optical fiber. This not only causes signal distortion but also contributes to latency. The accumulated chromatic dispersion D in a fiber of length L can be calculated as:

D = Dfiber × LWhere Dfiber is the dispersion coefficient of the fiber (typically measured in ps/nm/km).

For standard single-mode fiber (SMF) with a dispersion coefficient of approximately 17 ps/nm/km at 1550 nm, a 100 km fiber link would accumulate about 1700 ps/nm of dispersion, requiring compensation to maintain signal quality.

4.3 Latency Budget Analysis

When designing low-latency optical networks, engineers often perform latency budget analysis to account for all sources of delay:

Total Latency = Tfiber + Tcomponents + Tprocessing + TFEC + TDSPWhere:

- Tfiber is the propagation delay in the fiber

- Tcomponents is the delay introduced by optical components

- Tprocessing is the delay from signal processing

- TFEC is the delay from forward error correction

- TDSP is the delay from digital signal processing

This comprehensive approach allows for precise latency calculations and helps identify opportunities for optimization.

5. Latency Optimization Strategies

| Component | Typical Latency | Percentage of Total Latency | Optimization Techniques | Latency Reduction Potential |

|---|---|---|---|---|

| Fiber Propagation | 4.9 µs/km | 60-80% | • Hollow-core fiber • Route optimization • Straight-line deployment |

Up to 31% |

| Amplification | 0.15 µs per EDFA | 1-3% | • Raman amplification • Minimizing amplifier count • Optimized EDFA design |

Up to 90% |

| Dispersion Compensation | 15-25% of fiber delay | 10-20% | • FBG instead of DCF • Coherent detection with DSP • Dispersion-shifted fiber |

95-99% |

| OEO Conversion | ~100 µs | 5-15% | • All-optical switching (ROADM/OXC) • Optimized transponders • Reducing conversion points |

Up to 100% (elimination) |

| Forward Error Correction | 15-150 µs | 5-20% | • Optimized FEC algorithms • Flexible FEC levels • Low-latency coding schemes |

50-90% |

| Protocol Processing | Variable (ns to ms) | 1-10% | • Lower OSI layer protocols • Protocol stack simplification • Hardware acceleration |

50-70% |

5.1 Route Optimization

One of the most direct approaches to reducing latency is optimizing the physical route of the optical fiber. This can involve:

- Deploying fiber along the shortest possible path between endpoints

- Simplifying network architecture to reduce forwarding nodes

- Constructing one-hop transmission networks to reduce system latency

- Optimizing conventional ring or chain topologies to full-mesh topologies during backbone network planning

Such optimizations reduce the physical distance light must travel, thereby minimizing propagation delay.

5.2 Fiber Type Selection

Different types of optical fibers offer varying latency characteristics:

- Standard Single-Mode Fiber (SMF, G.652): The most commonly deployed fiber type with neff of approximately 1.467-1.468.

- Non-Zero Dispersion-Shifted Fiber (NZ-DSF, G.655): Optimized for regional and metropolitan high-speed optical networks operating in the C- and L-optical bands. These fibers have lower chromatic dispersion (typically 2.6-6.0 ps/nm/km in C-band) than standard SMF, requiring simpler dispersion compensation that adds only up to 5% to the transmission time.

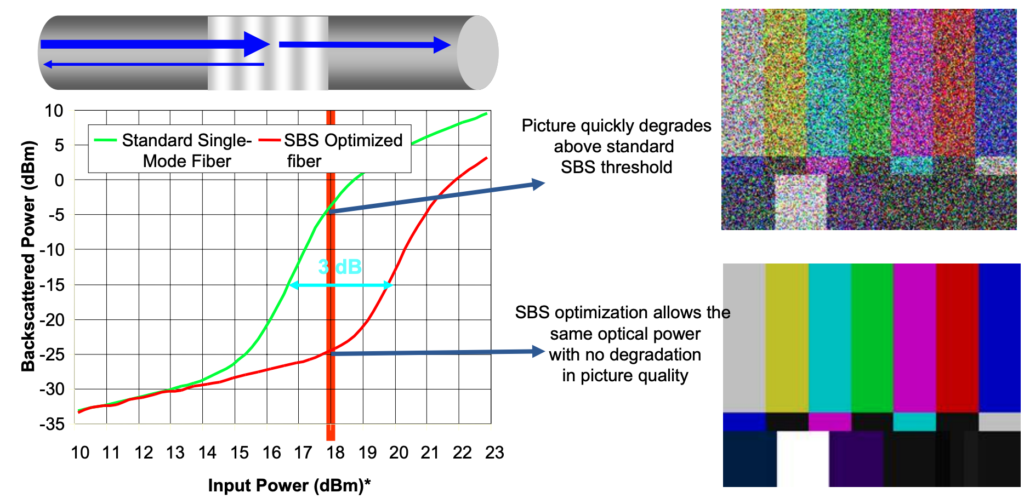

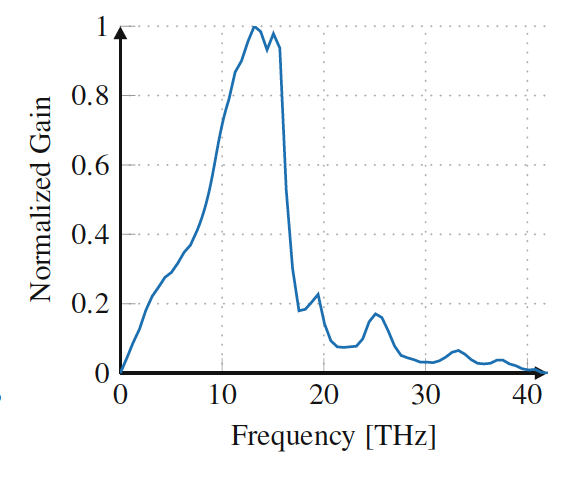

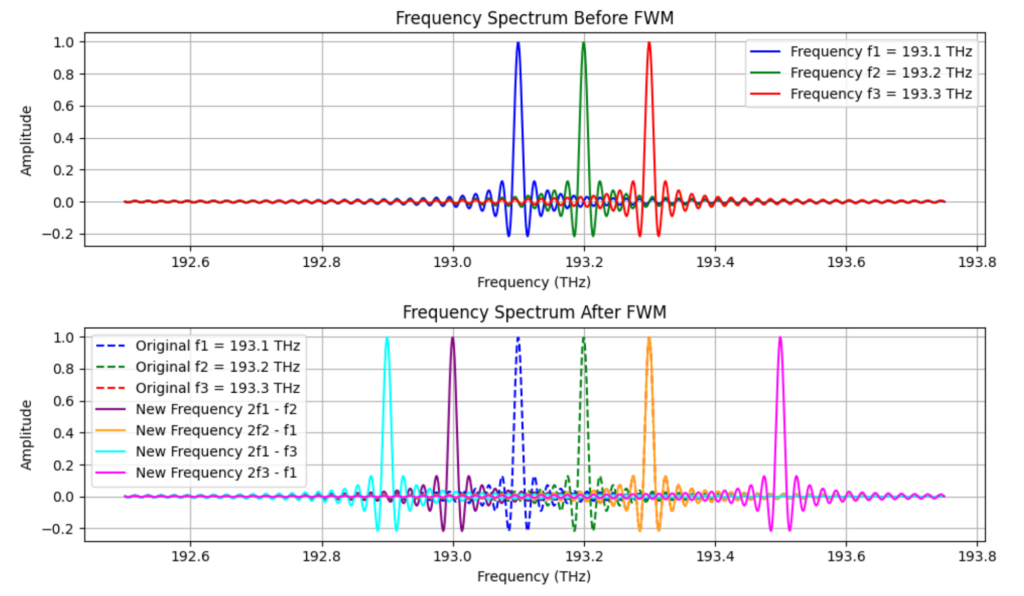

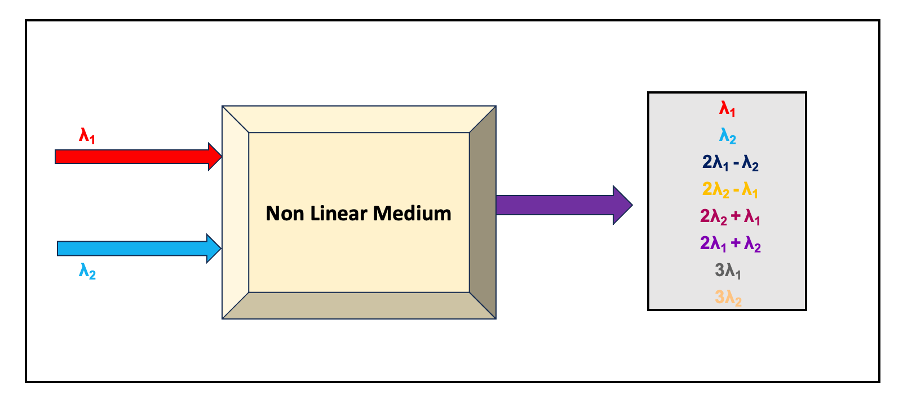

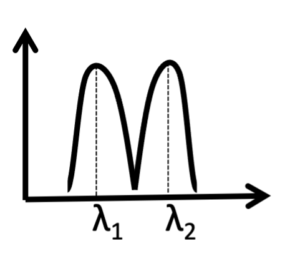

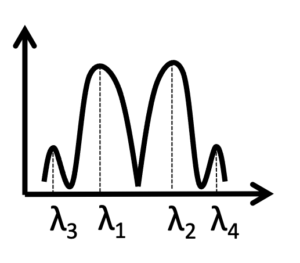

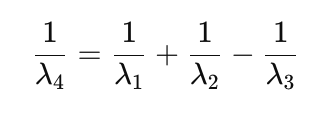

- Dispersion-Shifted Fiber (DSF, G.653): Optimized for use in the 1550 nm region with zero chromatic dispersion at 1550 nm wavelength, potentially eliminating the need for dispersion compensation. However, it’s limited to single-wavelength operation due to nonlinear effects like four-wave mixing (FWM).

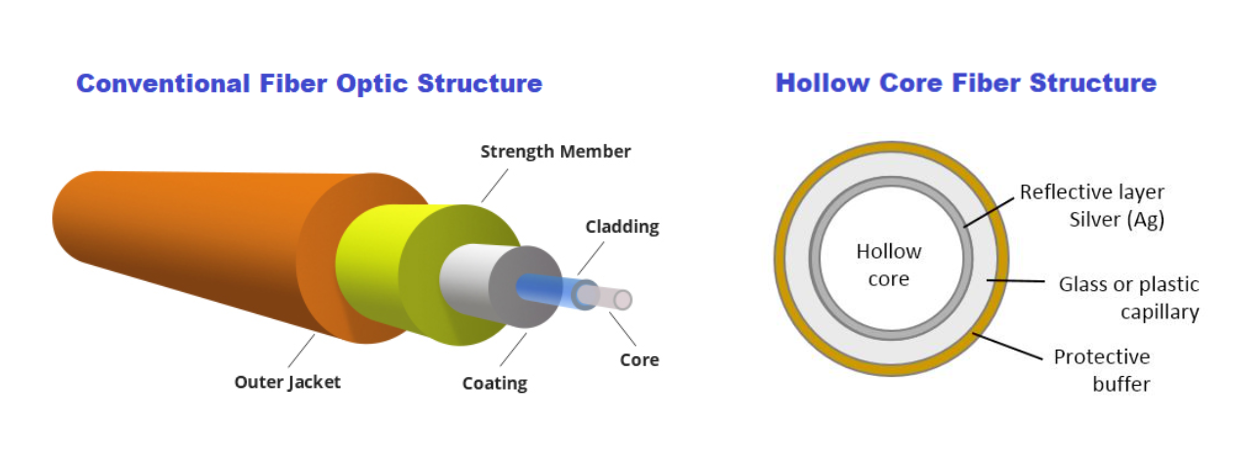

- Photonic Crystal Fibers (PCFs): These specialty fibers can have very low effective refractive indices. Hollow-core fibers (HCFs), a type of PCF, may provide up to 31% reduced latency compared to traditional fibers. However, they typically have higher attenuation (3.3 dB/km compared to 0.2 dB/km for SMF at 1550 nm), though recent advances have achieved attenuations as low as 1.2 dB/km.

Selecting the appropriate fiber type based on specific application requirements can significantly reduce latency.

| Fiber Type | Effective Group Index | Propagation Delay | Attenuation (at 1550 nm) | Special Considerations | Best Use Cases |

|---|---|---|---|---|---|

| Standard SMF (G.652) | ~1.4682 | 4.9 µs/km | 0.2 dB/km | • Widely deployed • Cost-effective |

• General purpose • Long-haul with DCM |

| NZ-DSF (G.655) | ~1.47 | 4.9 µs/km | 0.2 dB/km | • Lower dispersion • Simpler compensation |

• Regional networks • Metropolitan areas |

| DSF (G.653) | ~1.47 | 4.9 µs/km | 0.2 dB/km | • Zero dispersion at 1550 nm • FWM limitations |

• Single-wavelength • Point-to-point |

| Hollow-Core Fiber | 1.01-1.2 | 3.4-4.0 µs/km | 1.2-3.3 dB/km | • Higher attenuation • Specialized connectors |

• Ultra-low latency • Short critical links |

| Multi-Core Fiber | ~1.46-1.47 | 4.9 µs/km | 0.2 dB/km | • Higher capacity • Complex termination |

• High capacity • Space-constrained routes |

5.3 Optical-Layer Optimization

Several techniques can be employed at the optical layer to minimize latency:

- Coherent Communication Technology: Leveraging coherent detection eliminates the need for dispersion compensation fibers (DCFs), reducing latency. However, it introduces additional digital signal processing that can add up to 1 μs of delay.

- Fiber Bragg Grating (FBG) for Dispersion Compensation: Replacing DCF-based dispersion compensation modules with FBG-based ones can significantly reduce latency. While DCF adds 15-25% to the fiber propagation time, FBG typically introduces only 5-50 ns of delay.

- ROADM/OXC Implementation: Using Reconfigurable Optical Add-Drop Multiplexers (ROADM) and Optical Cross-Connects (OXC) enables optical-layer pass-through and switching, reducing the number of optical-electrical-optical (OEO) conversions.

- Raman Amplification: Replacing erbium-doped fiber amplifiers (EDFAs) with Raman amplifiers eliminates the need for erbium-doped fibers, avoiding the extra latency they introduce (typically 0.15 μs for 30m of erbium-doped fiber). Raman amplifiers also effectively extend all-optical transmission distances, reducing the need for electrical regeneration.

5.4 Electrical-Layer Optimization

At the electrical layer, several strategies can be employed to reduce latency:

- FEC Algorithm Optimization: Optimizing Forward Error Correction (FEC) algorithms can increase transmission distance while reducing latency penalties. Some systems allow flexible setting of FEC levels to balance error correction capability against latency.

- Transponder Selection: Simpler transponders without FEC or in-band management channels can operate at much lower latencies (4-30 ns compared to 5-10 μs for more complex units). Some vendors claim transponders operating with as little as 2 ns latency.

- Minimizing OEO Conversion: Avoiding optical-electrical-optical (OEO) conversion, which typically adds about 100 μs of latency, is crucial for low-latency networks.

- Flexible Service Encapsulation: Optimizing the service encapsulation mode can reduce processing overhead and associated latency.

5.5 Protocol Optimization

Network protocols significantly impact latency. WDM/OTN technologies operate at the physical (L0) and data link (L1) layers of the OSI model, offering the lowest possible latency:

- Lower OSI Layers: Protocols operating at lower OSI layers (L0/L1) introduce less latency (nanosecond level) compared to higher layers like L3/L4 (millisecond to hundreds of milliseconds).

- Protocol Simplification: Simplifying protocol stacks from 5 layers to 2 layers can reduce single-site latency by up to 70%.

- WDM/OTN Adoption: WDM/OTN technology provides latency close to the physical limit, with most latency coming from fiber transmission rather than protocol processing.

The most effective latency reduction strategies address multiple components in the optical network chain. For ultra-low latency applications like high-frequency trading, every microsecond matters, and optimizations must consider the entire path from end to end, including physical routes, component selection, and protocol implementation.6. Practical Implementation and Recommendations

| Component | Typical Implementation | Latency | Optimized Implementation | Latency | Reduction |

|---|---|---|---|---|---|

| Fiber (100 km) | Standard SMF | 490 µs | Hollow-core fiber | 338 µs | 31% |

| Amplifiers | 2 EDFAs | 0.3 µs | 1 Raman amplifier | 0 µs | 100% |

| Dispersion Compensation | DCF modules | 98 µs | FBG-based or coherent | 0.05 µs | 99.9% |

| Transponders | Standard with FEC | 10 µs | Low-latency specialized | 0.03 µs | 99.7% |

| OEO Conversion | 1 regeneration point | 100 µs | All-optical path | 0 µs | 100% |

| Protocol Processing | Multiple OSI layers | 5 µs | L0/L1 only | 0.5 µs | 90% |

| Total End-to-End | 703.3 µs | 338.58 µs | 51.9% |

6.1 Network Architecture Design

When designing low-latency optical networks, consider the following architectural principles:

- Direct Connectivity: Implement direct fiber connections between critical nodes rather than routing through intermediate points.

- Mesh Topology: Adopt a mesh topology rather than ring or chain topologies to minimize hop count between endpoints.

- Physical Route Planning: Carefully plan fiber routes to minimize physical distance, even if it means higher initial deployment costs.

- Redundancy with Latency Awareness: Design redundant paths with similar latency characteristics to maintain consistent performance during failover events.

6.2 Component Selection Guidelines

Select network components based on their latency characteristics:

- Fiber Selection:

- Use hollow-core or photonic crystal fibers for ultra-low-latency requirements where budget permits

- Consider NZ-DSF (G.655) for metropolitan networks to reduce dispersion compensation needs

- Amplification:

- Prefer Raman amplification over EDFA where possible

- If using EDFA, select designs with minimal erbium-doped fiber length

- Dispersion Compensation:

- Choose FBG-based compensation over DCF-based solutions

- For ultra-low-latency applications, consider coherent detection with electrical dispersion compensation, weighing the DSP latency against DCF latency

- Transponders and Muxponders:

- Select simple transponders without unnecessary functionality for critical low-latency paths

- Consider latency-optimized transponders (some vendors offer units with 2-30 ns latency)

- Evaluate the trade-off between FEC capability and latency impact

6.3 Management and Monitoring

Implementing effective latency management and monitoring is crucial:

- Latency SLA Definition: Clearly define latency Service Level Agreements (SLAs) for different application requirements.

- Threshold Monitoring: Implement latency threshold crossing and jitter alarm functions to detect when service latency exceeds predefined thresholds.

- Dynamic Routing: Utilize latency optimization functions to reroute services whose latency exceeds thresholds, ensuring committed SLAs are maintained.

- Regular Testing: Perform regular latency tests and measurements to identify degradation or opportunities for improvement.

- End-to-End Visibility: Implement monitoring tools that provide visibility into all components contributing to latency.

6.4 Industry-Specific Implementations

Different industries have unique latency requirements and implementation considerations:

- Financial Services:

- Deploy dedicated, physical point-to-point dark fiber connections between trading facilities

- Minimize or eliminate intermediate equipment

- Consider hollow-core fiber for critical routes despite higher cost

- Implement precision timing and synchronization

- Interactive Entertainment:

- Focus on consistent latency rather than absolute minimum values

- Implement edge computing to bring resources closer to users

- Design for peak load conditions to avoid latency spikes

- IoT and Cloud Services:

- Distribute data centers strategically to minimize distance to end users

- Implement intelligent caching and content delivery networks

- Optimize for both latency and jitter

- 5G and Autonomous Systems:

- Design for ultra-reliable low latency communication (URLLC)

- Implement mobile edge computing (MEC) to minimize backhaul latency

- Ensure comprehensive coverage to maintain consistent performance

7. Future Trends and Research Directions

7.1 Advanced Fiber Technologies

Research into novel fiber designs continues to push the boundaries of latency reduction:

- Next-Generation Hollow-Core Fibers: Improvements in hollow-core fiber design are reducing attenuation while maintaining the latency advantage. Research aims to achieve attenuation values closer to standard SMF while providing 30-40% latency reduction.

- Multi-Core and Few-Mode Fibers: Although primarily developed for capacity enhancement, these fibers might offer latency advantages through spatial mode management.

- Engineered Refractive Index Profiles: Custom-designed refractive index profiles could optimize for both latency and other transmission characteristics.

7.2 Integrated Photonics

The miniaturization and integration of optical components promise significant latency reductions:

- Silicon Photonics: Integration of multiple optical functions onto silicon chips can reduce physical distances between components and minimize latency.

- Photonic Integrated Circuits (PICs): These offer the potential to replace multiple discrete components with a single integrated device, reducing both physical size and signal propagation time.

- Co-packaged Optics: Bringing optical interfaces closer to electronic switches and routers reduces the need for electrical traces and interconnects, potentially lowering latency.

7.3 Machine Learning for Latency Optimization

Artificial intelligence and machine learning techniques are being applied to latency optimization:

- Predictive Routing: ML algorithms can predict network conditions and optimize routes based on expected latency performance.

- Dynamic Resource Allocation: Intelligent systems can allocate network resources based on application latency requirements and current network conditions.

- Anomaly Detection: ML can identify latency anomalies and potential issues before they impact service quality.

7.4 Quantum Communications

Quantum technologies may eventually offer novel approaches to latency reduction:

- Quantum Entanglement: While not breaking the speed-of-light limit, quantum entanglement could potentially enable new communication protocols with different latency characteristics.

- Quantum Repeaters: These could extend the reach of quantum networks without the latency penalties associated with classical regeneration.

8. Conclusion

Latency in optical networks represents a complex interplay of physical constraints, component characteristics, and processing overhead. As applications become increasingly sensitive to delay, understanding and optimizing these factors becomes crucial for network designers and operators.

The fundamental limits imposed by the speed of light in optical fiber establish a baseline for latency that cannot be overcome without changing the transmission medium itself. However, significant improvements can be achieved through careful route planning, fiber selection, component optimization, and protocol design.

Different applications have varying latency requirements, from the sub-microsecond demands of high-frequency trading to the more moderate needs of cloud services. Meeting these diverse requirements requires a tailored approach that considers the specific characteristics and priorities of each use case.

Looking forward, advances in fiber technology, integrated photonics, machine learning, and potentially quantum communications promise to push the boundaries of what’s possible in low-latency optical networking. As research continues and technology evolves, we can expect further reductions in latency and improvements in network performance.

For network operators and service providers, the ability to deliver low and predictable latency will increasingly become a competitive differentiator. Those who can provide networks with lower and more stable latency will gain advantages in business competition across multiple industries, from finance to entertainment, cloud computing, and beyond.

9. References

- ITU-T G.652 – Characteristics of a single-mode optical fibre and cable

- ITU-T G.653 – Characteristics of a dispersion-shifted single-mode optical fibre and cable

- ITU-T G.655 – Characteristics of a non-zero dispersion-shifted single-mode optical fibre and cable

- Poletti, F., et al. “Towards high-capacity fibre-optic communications at the speed of light in vacuum.” Nature Photonics 7, 279–284 (2013).

- Feuer, M.D., et al. “Joint Digital Signal Processing Receivers for Spatial Superchannels.” IEEE Photonics Technology Letters 24, 1957-1960 (2012).

- Layec, P., et al. “Low Latency FEC for Optical Communications.” Journal of Lightwave Technology 37, 3643-3654 (2019).

- MapYourTech, “Latency in Fiber Optic Networks,” 2025.

- Optical Internetworking Forum (OIF), “Implementation Agreement for CFP2-Analog Coherent Optics Module” (2018).

- “LATENCY IN OPTICAL TRANSMISSION NETWORKS 101,” 2025.

- Savory, S.J. “Digital Coherent Optical Receivers: Algorithms and Subsystems.” IEEE Journal of Selected Topics in Quantum Electronics 16, 1164-1179 (2010).

- Winzer, P.J. “High-Spectral-Efficiency Optical Modulation Formats.” Journal of Lightwave Technology 30, 3824-3835 (2012).

- Ip, E., et al. “Coherent detection in optical fiber systems.” Optics Express 16, 753-791 (2008).

- Agrawal, G.P. “Fiber-Optic Communication Systems,” 4th Edition, Wiley (2010).

- Richardson, D.J., et al. “Space-division multiplexing in optical fibres.” Nature Photonics 7, 354–362 (2013).

- Kikuchi, K. “Fundamentals of Coherent Optical Fiber Communications.” Journal of Lightwave Technology 34, 157-179 (2016).

Network Management is crucial for maintaining the performance, reliability, and security of modern communication networks. With the rapid growth of network scales—from small networks with a handful of Network Elements (NEs) to complex infrastructures comprising millions of NEs—selecting the appropriate management systems and protocols becomes essential. Lets delves into the multifaceted aspects of network management, emphasizing optical networks and networking device management systems. It explores the best practices and tools suitable for varying network scales, integrates context from all layers of network management, and provides practical examples to guide network administrators in the era of automation.

1. Introduction to Network Management

Network Management encompasses a wide range of activities and processes aimed at ensuring that network infrastructure operates efficiently, reliably, and securely. It involves the administration, operation, maintenance, and provisioning of network resources. Effective network management is pivotal for minimizing downtime, optimizing performance, and ensuring compliance with service-level agreements (SLAs).

Key functions of network management include:

- Configuration Management: Setting up and maintaining network device configurations.

- Fault Management: Detecting, isolating, and resolving network issues.

- Performance Management: Monitoring and optimizing network performance.

- Security Management: Protecting the network from unauthorized access and threats.

- Accounting Management: Tracking network resource usage for billing and auditing.

In modern networks, especially optical networks, the complexity and scale demand advanced management systems and protocols to handle diverse and high-volume data efficiently.

2. Importance of Network Management in Optical Networks

Optical networks, such as Dense Wavelength Division Multiplexing (DWDM) and Optical Transport Networks (OTN), form the backbone of global communication infrastructures, providing high-capacity, long-distance data transmission. Effective network management in optical networks is critical for several reasons:

- High Throughput and Low Latency: Optical networks handle vast amounts of data with minimal delay, necessitating precise management to maintain performance.

- Fault Tolerance: Ensuring quick detection and resolution of faults to minimize downtime is vital for maintaining service reliability.

- Scalability: As demand grows, optical networks must scale efficiently, requiring robust management systems to handle increased complexity.

- Resource Optimization: Efficiently managing wavelengths, channels, and transponders to maximize network capacity and performance.

- Quality of Service (QoS): Maintaining optimal signal integrity and minimizing bit error rates (BER) through careful monitoring and adjustments.

Managing optical networks involves specialized protocols and tools tailored to handle the unique characteristics of optical transmission, such as signal power levels, wavelength allocations, and fiber optic health metrics.

3. Network Management Layers

Network management can be conceptualized through various layers, each addressing different aspects of managing and operating a network. This layered approach helps in organizing management functions systematically.

3.1. Lifecycle Management (LCM)

Lifecycle Management oversees the entire lifecycle of network devices—from procurement and installation to maintenance and decommissioning. It ensures that devices are appropriately managed throughout their operational lifespan.

- Procurement: Selecting and acquiring network devices.

- Installation: Deploying devices and integrating them into the network.

- Maintenance: Regular updates, patches, and hardware replacements.

- Decommissioning: Safely retiring old devices from the network.

Example: In an optical network, LCM ensures that new DWDM transponders are integrated seamlessly, firmware is kept up-to-date, and outdated transponders are safely removed.

3.2. Network Service Management (NSM)

Network Service Management focuses on managing the services provided by the network. It includes the provisioning, configuration, and monitoring of network services to meet user requirements.

- Service Provisioning: Allocating resources and configuring services like VLANs, MPLS, or optical channels.

- Service Assurance: Monitoring service performance and ensuring SLAs are met.

- Service Optimization: Adjusting configurations to optimize service quality and resource usage.

Example: Managing optical channels in a DWDM system to ensure that each channel operates within its designated wavelength and power parameters to maintain high data throughput.

3.3. Element Management Systems (EMS)

Element Management Systems are responsible for managing individual network elements (NEs) such as routers, switches, and optical transponders. EMS handles device-specific configurations, monitoring, and fault management.

- Device Configuration: Setting up device parameters and features.

- Monitoring: Collecting device metrics and health information.

- Fault Management: Detecting and addressing device-specific issues.

Example: An EMS for a DWDM system manages each optical transponder’s settings, monitors signal strength, and alerts operators to any deviations from normal parameters.

3.4. Business Support Systems (BSS)

Business Support Systems interface the network with business processes. They handle aspects like billing, customer relationship management (CRM), and service provisioning from a business perspective.

- Billing and Accounting: Tracking resource usage for billing purposes.

- CRM Integration: Managing customer information and service requests.

- Service Order Management: Handling service orders and provisioning.

Example: BSS integrates with network management systems to automate billing based on the optical channel usage in an OTN setup, ensuring accurate and timely invoicing.

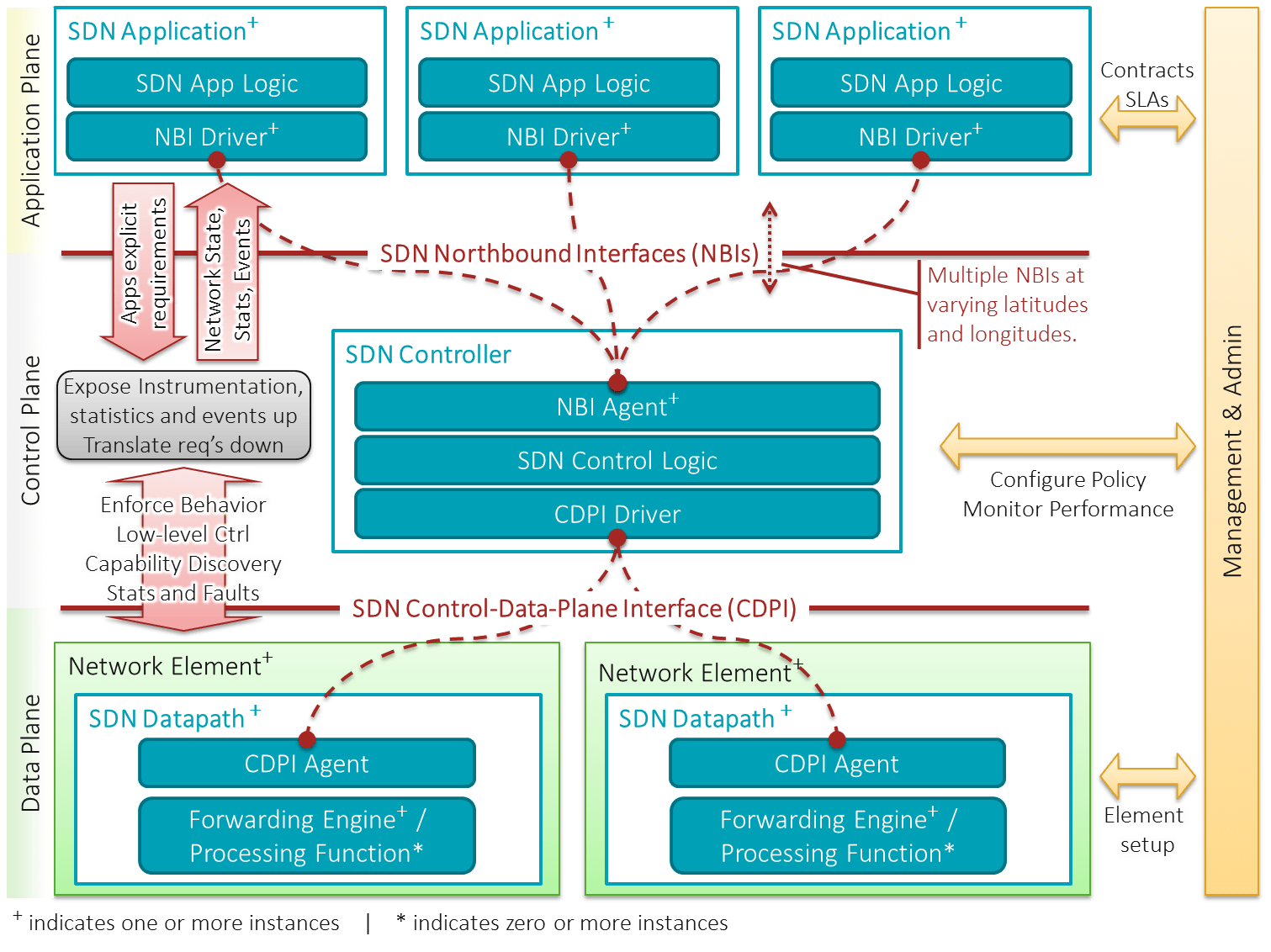

3.5. Software-Defined Networking (SDN) Orchestrators and Controllers

SDN Orchestrators and Controllers provide centralized management and automation capabilities, decoupling the control plane from the data plane. They enable dynamic network configuration and real-time adjustments based on network conditions.

- SDN Controller: Manages the network’s control plane, making decisions about data flow and configurations.

- SDN Orchestrator: Coordinates multiple controllers and automates complex workflows across the network.

Image Credit: Wiki

Example: In an optical network, an SDN orchestrator can dynamically adjust wavelength allocations in response to real-time traffic demands, optimizing network performance and resource utilization.

4. Network Management Protocols and Standards

Effective network management relies on various protocols and standards designed to facilitate communication between management systems and network devices. This section explores key protocols, their functionalities, and relevant standards.

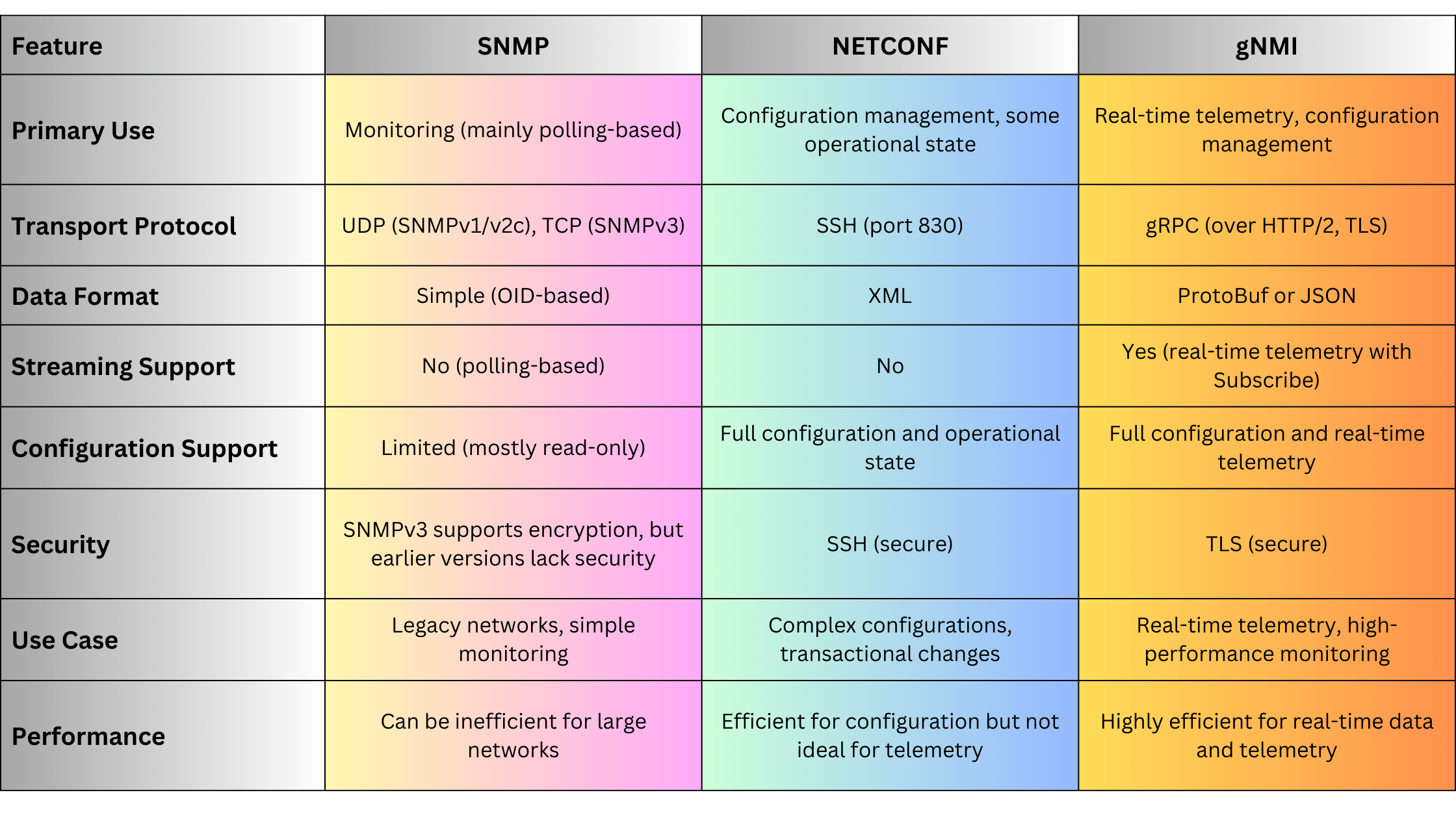

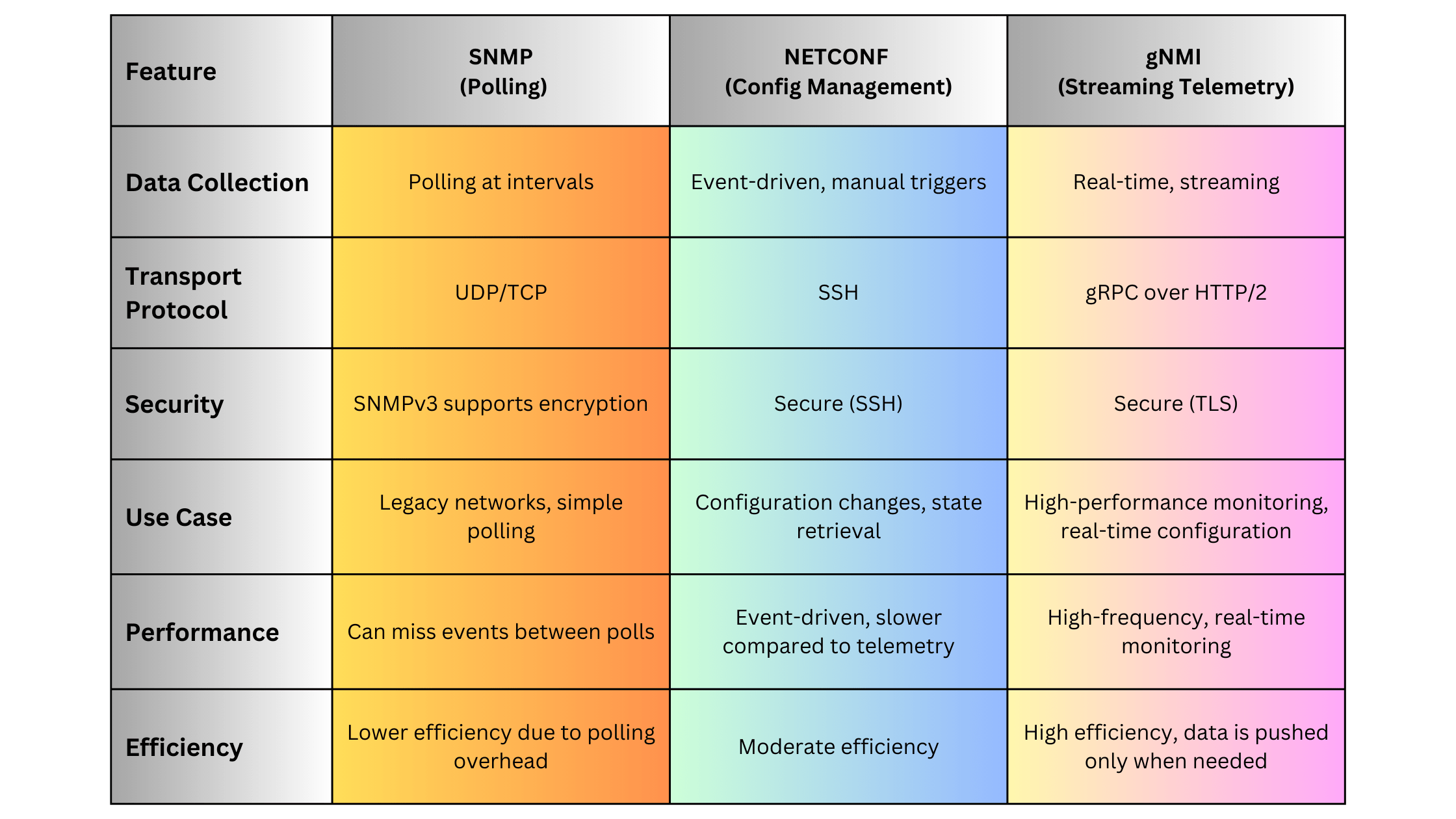

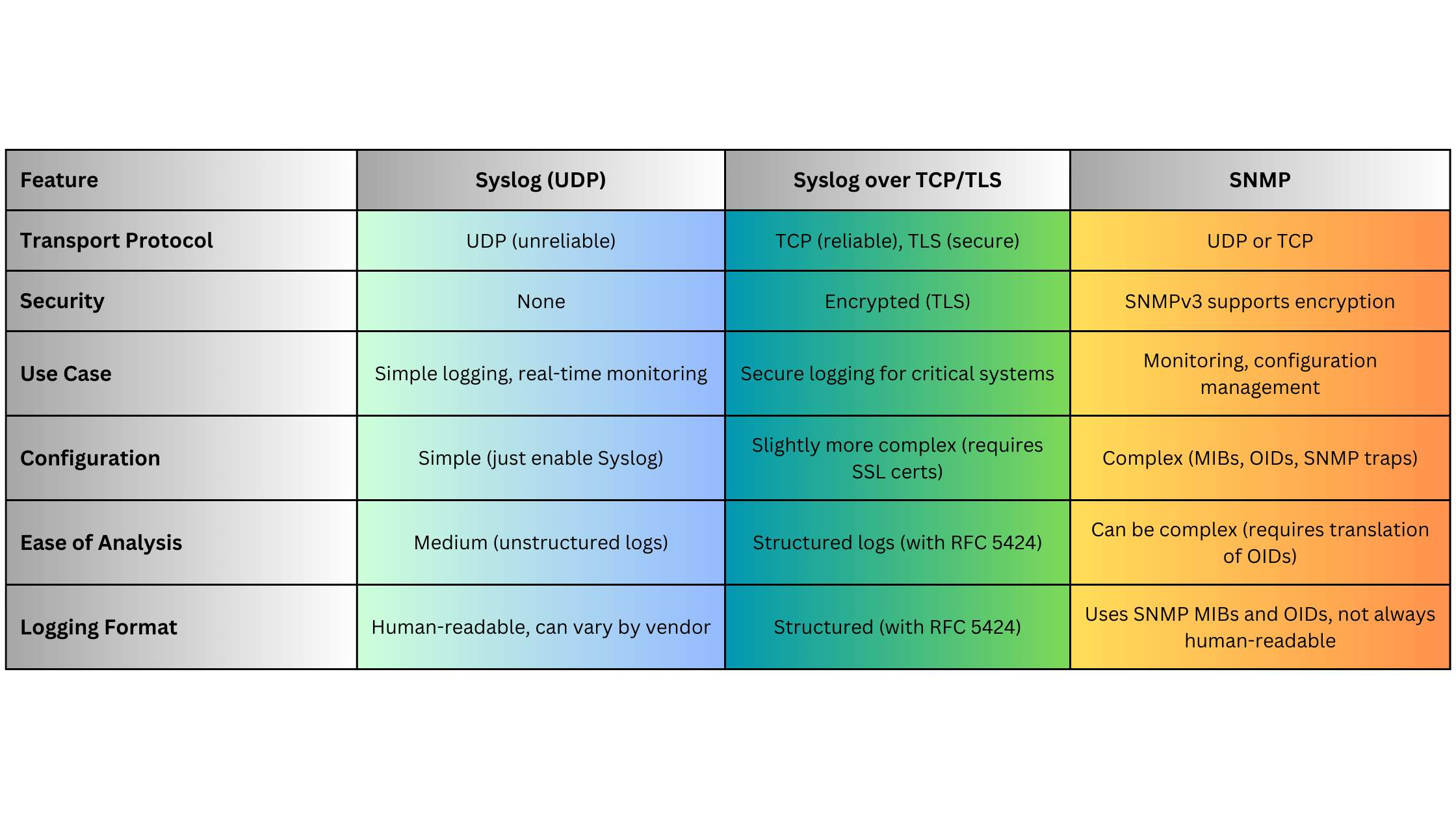

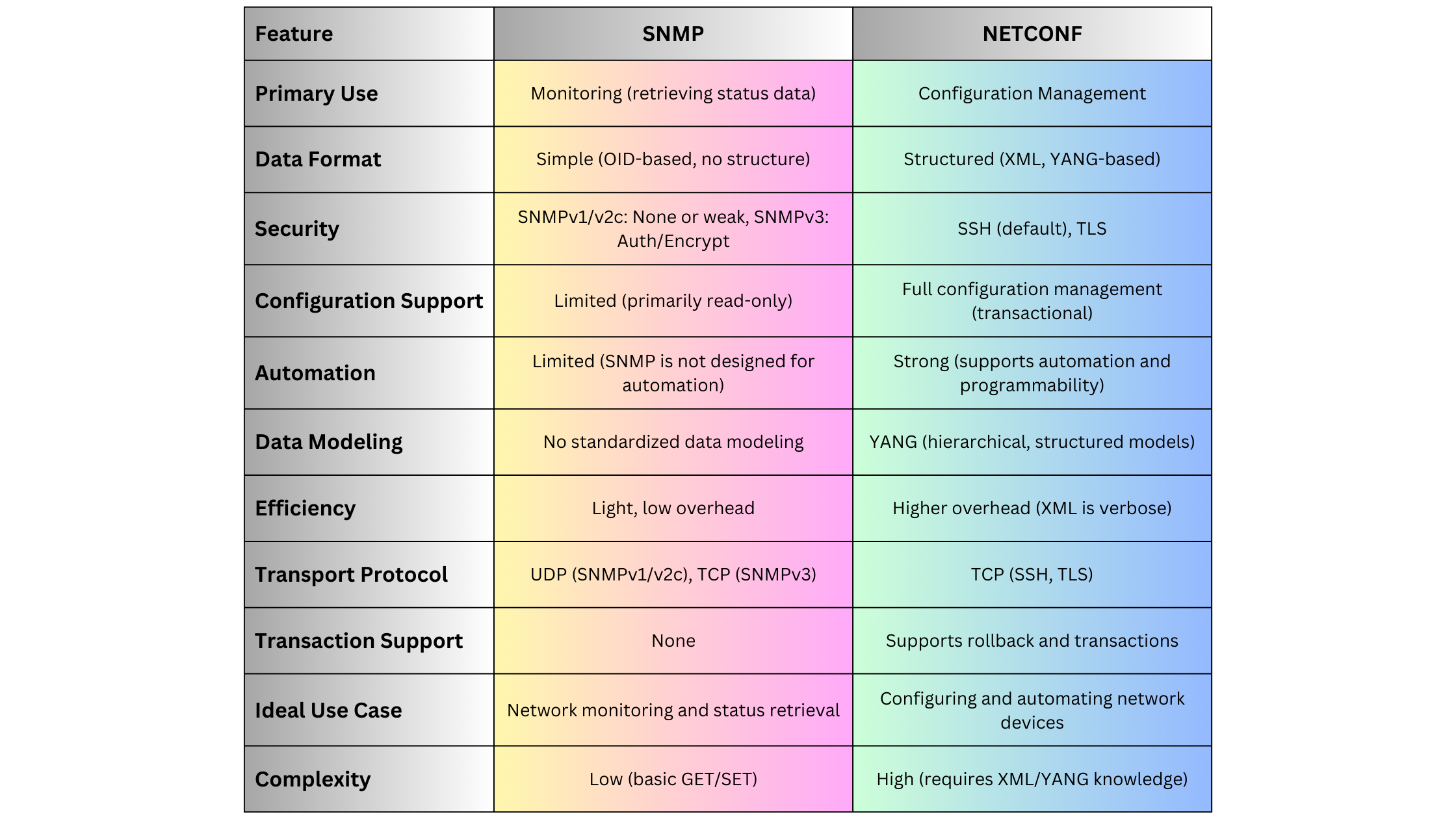

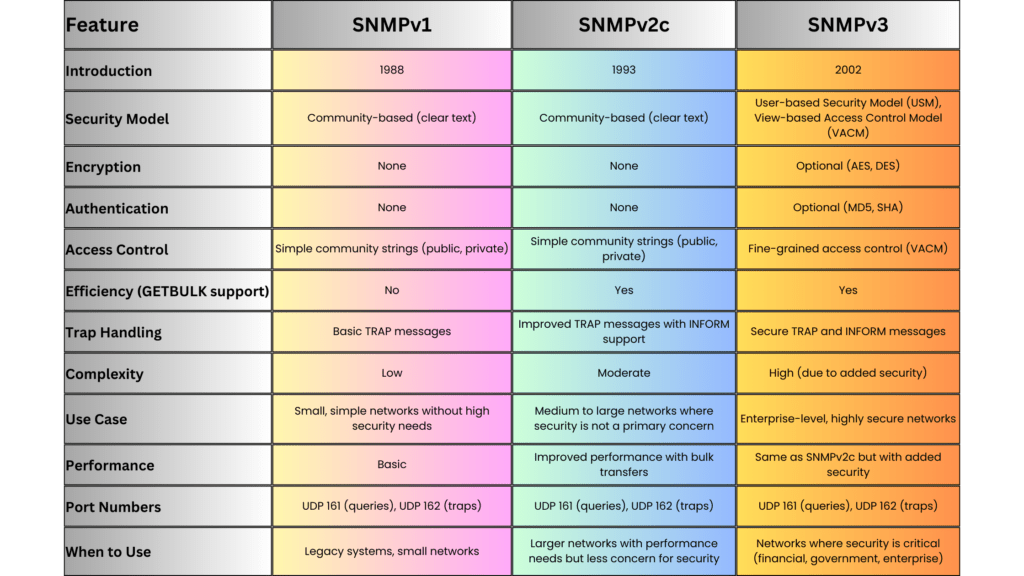

4.1. SNMP (Simple Network Management Protocol)

SNMP is one of the oldest and most widely used network management protocols, primarily for monitoring and managing network devices.

- Versions: SNMPv1, SNMPv2c, SNMPv3

- Standards:

- RFC 1157: SNMPv1

- RFC 1905: SNMPv2

- RFC 3411-3418: SNMPv3

Key Features:

- Monitoring: Collection of device metrics (e.g., CPU usage, interface status).

- Configuration: Basic configuration through SNMP SET operations.

- Trap Messages: Devices can send unsolicited alerts (traps) to managers.

Advantages:

- Simplicity: Easy to implement and use for basic monitoring.

- Wide Adoption: Supported by virtually all network devices.

- Low Overhead: Lightweight protocol suitable for simple tasks.

Disadvantages:

- Security: SNMPv1 and SNMPv2c lack robust security features. SNMPv3 addresses this but is more complex.

- Limited Functionality: Primarily designed for monitoring, with limited configuration capabilities.

- Scalability Issues: Polling large numbers of devices can generate significant network traffic.

Use Cases:

- Small to medium-sized networks for basic monitoring and alerting.

- Legacy systems where advanced management protocols are not supported.

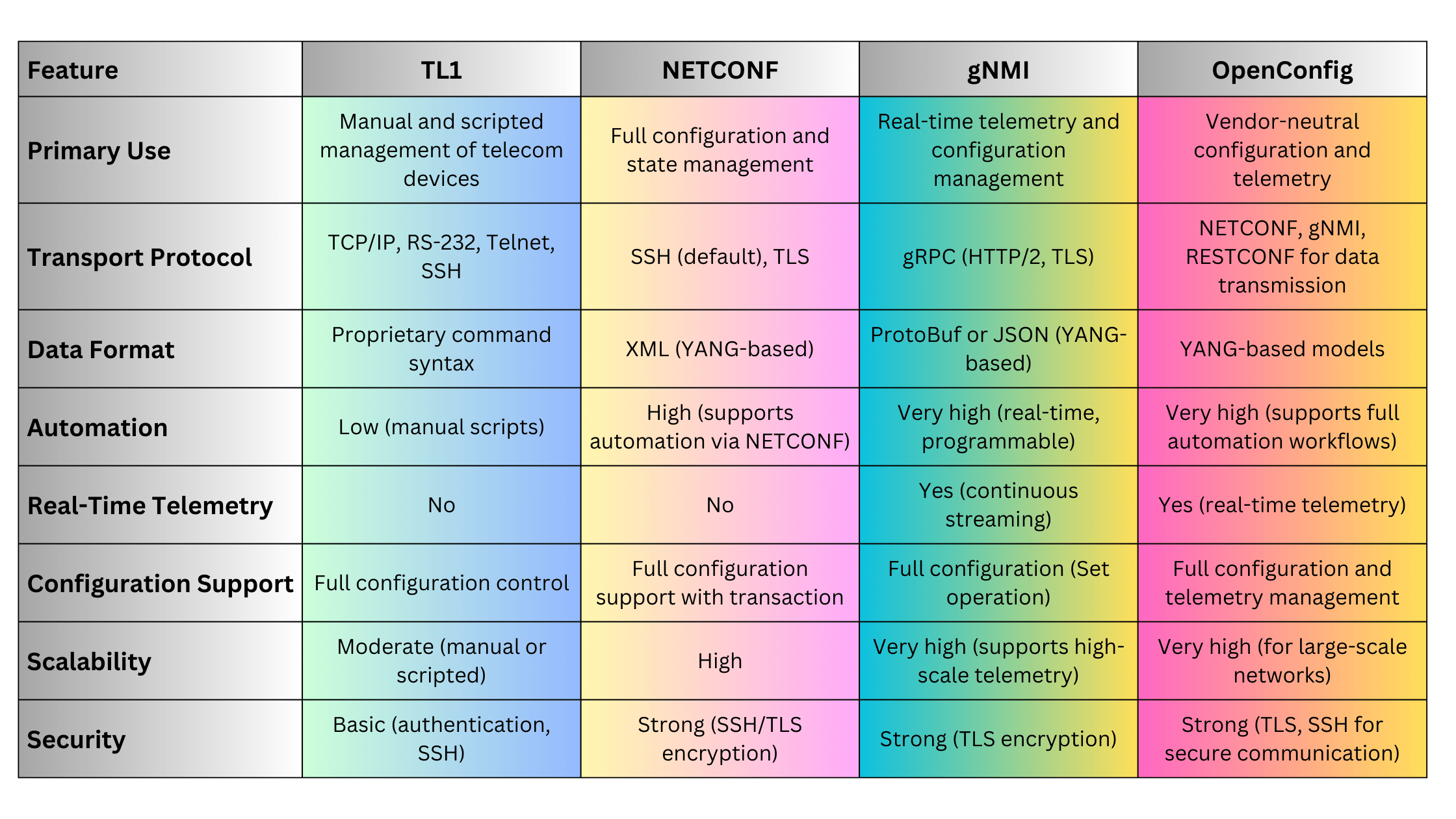

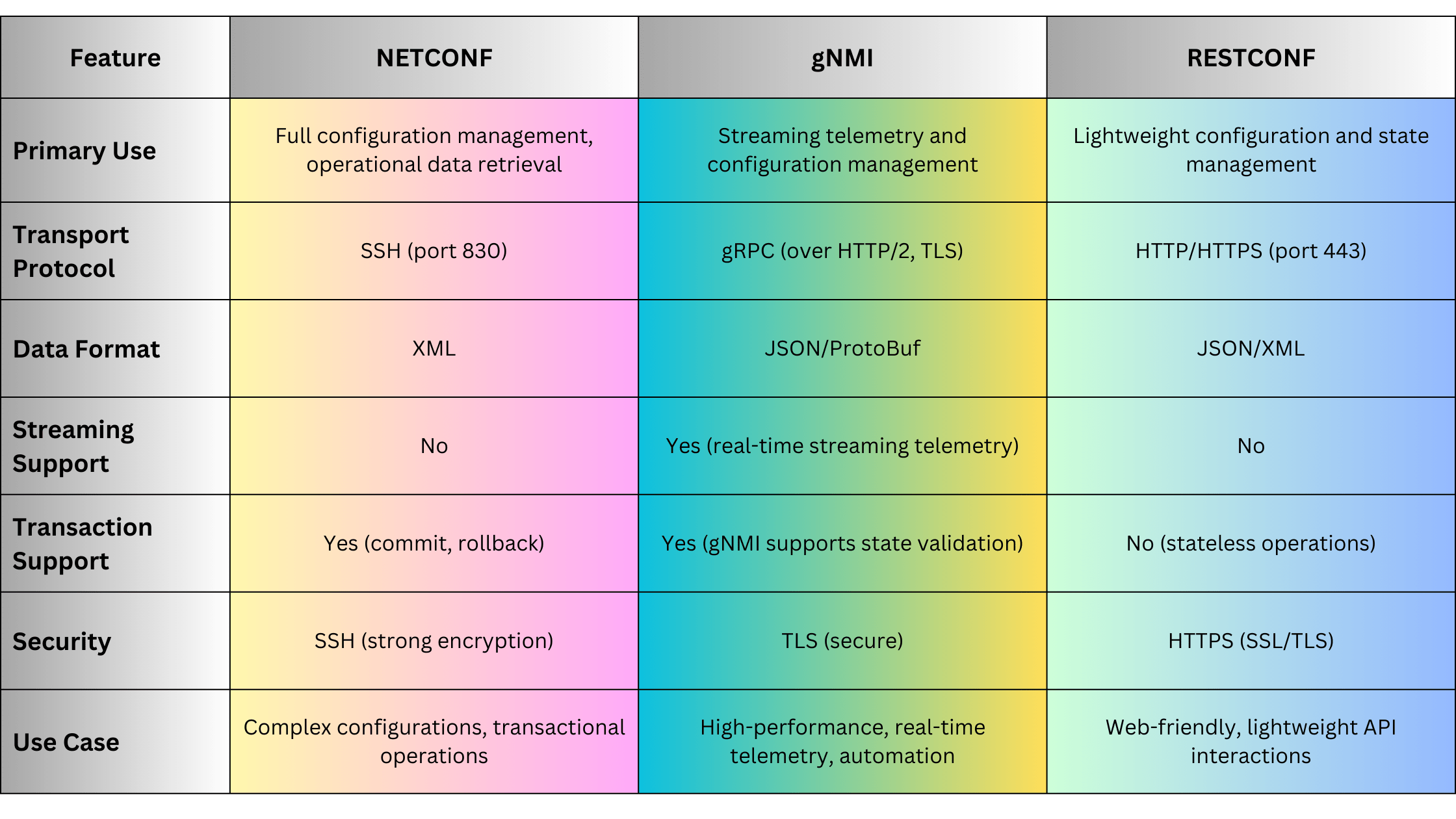

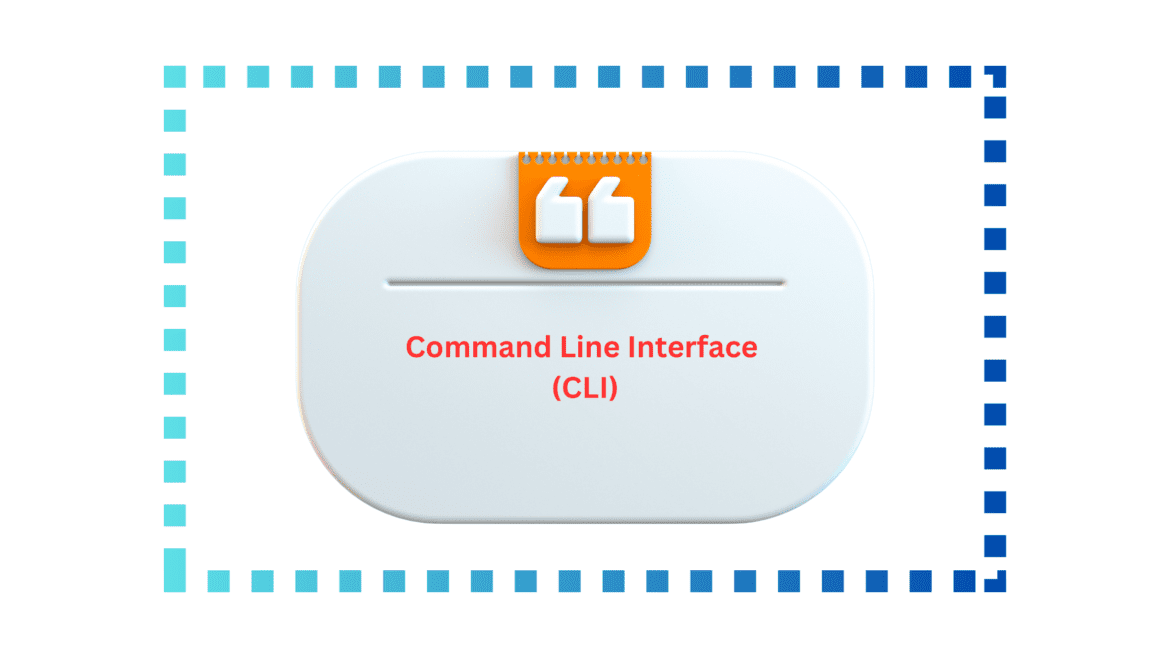

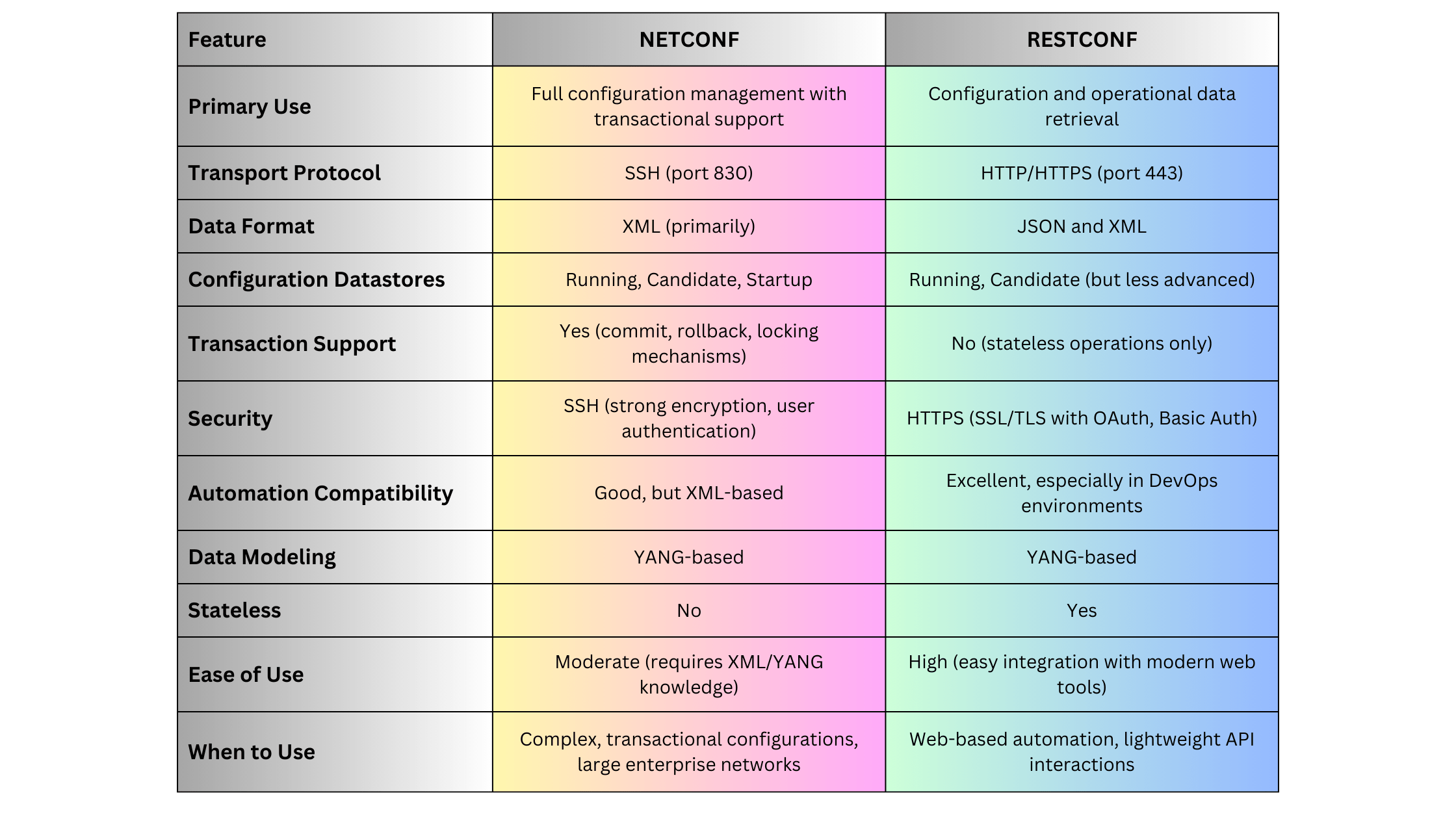

4.2. NETCONF (Network Configuration Protocol)

NETCONF is a modern network management protocol designed to provide a standardized way to configure and manage network devices.

- Version: NETCONF v1.1

- Standards:

- RFC 6241: NETCONF Protocol

- RFC 6242: NETCONF over TLS

Key Features:

- Structured Configuration: Uses XML/YANG data models for precise configuration.

- Transactional Operations: Supports atomic commits and rollbacks to ensure configuration integrity.

- Extensibility: Modular and extensible, allowing for customization and new feature integration.

Advantages:

- Granular Control: Detailed configuration capabilities through YANG models.

- Transaction Support: Ensures consistent configuration changes with commit and rollback features.

- Secure: Typically operates over SSH or TLS, providing strong security.

Disadvantages:

- Complexity: Requires understanding of YANG data models and XML.

- Resource Intensive: Can be more demanding in terms of processing and bandwidth compared to SNMP.

Use Cases:

- Medium to large-sized networks requiring precise configuration and management.

- Environments where transactional integrity and security are paramount.

4.3. RESTCONF

RESTCONF is a RESTful API-based protocol that builds upon NETCONF principles, providing a simpler and more accessible interface for network management.

- Version: RESTCONF v1.0

- Standards:

- RFC 8040: RESTCONF Protocol

Key Features:

- RESTful Architecture: Utilizes standard HTTP methods (GET, POST, PUT, DELETE) for network management.

- Data Formats: Supports JSON and XML, making it compatible with modern web applications.

- YANG Integration: Uses YANG data models for defining network configurations and states.

Advantages:

- Ease of Use: Familiar RESTful API design makes it easier for developers to integrate with web-based tools.

- Flexibility: Can be easily integrated with various automation and orchestration platforms.

- Lightweight: Less overhead compared to NETCONF’s XML-based communication.

Disadvantages:

- Limited Transaction Support: Does not inherently support transactional operations like NETCONF.

- Security Complexity: While secure over HTTPS, integrating with OAuth or other authentication mechanisms can add complexity.

Use Cases:

- Environments where integration with web-based applications and automation tools is required.

- Networks that benefit from RESTful interfaces for easier programmability and accessibility.

4.4. gNMI (gRPC Network Management Interface)

gNMI is a high-performance network management protocol designed for real-time telemetry and configuration management, particularly suitable for large-scale and dynamic networks.

- Version: gNMI v0.7.x

- Standards: OpenConfig standard for gNMI

Key Features:

- Streaming Telemetry: Supports real-time, continuous data streaming from devices to management systems.

- gRPC-Based: Utilizes the efficient gRPC framework over HTTP/2 for low-latency communication.

- YANG Integration: Leverages YANG data models for consistent configuration and telemetry data.

Advantages:

- Real-Time Monitoring: Enables high-frequency, real-time data collection for performance monitoring and fault detection.

- Efficiency: Optimized for high throughput and low latency, making it ideal for large-scale networks.

- Automation-Friendly: Easily integrates with modern automation frameworks and tools.

Disadvantages:

- Complexity: Requires familiarity with gRPC, YANG, and modern networking concepts.

- Infrastructure Requirements: Requires scalable telemetry collectors and robust backend systems to handle high-volume data streams.

Use Cases:

- Large-scale networks requiring real-time performance monitoring and dynamic configuration.

- Environments that leverage software-defined networking (SDN) and network automation.

4.5. TL1 (Transaction Language 1)

TL1 is a legacy network management protocol widely used in telecom networks, particularly for managing optical network elements.

- Standards:

- Telcordia GR-833-CORE

- ITU-T G.773

- Versions: Varies by vendor/implementation

Key Features:

- Command-Based Interface: Uses structured text commands for managing network devices.

- Manual and Scripted Management: Supports both interactive command input and automated scripting.

- Vendor-Specific Extensions: Often includes proprietary commands tailored to specific device functionalities.

Advantages:

- Simplicity: Easy to learn and use for operators familiar with CLI-based management.

- Wide Adoption in Telecom: Supported by many legacy optical and telecom devices.

- Granular Control: Allows detailed configuration and monitoring of individual network elements.

Disadvantages:

- Limited Automation: Lacks the advanced automation capabilities of modern protocols.

- Proprietary Nature: Vendor-specific commands can lead to compatibility issues across different devices.

- No Real-Time Telemetry: Designed primarily for manual or scripted command entry without native support for continuous data streaming.

Use Cases:

- Legacy telecom and optical networks where TL1 is the standard management protocol.

- Environments requiring detailed, device-specific configurations that are not available through modern protocols.

4.6. CLI (Command Line Interface)

CLI is a fundamental method for managing network devices, providing direct access to device configurations and status through text-based commands.

- Standards: Vendor-specific, no universal standard.

- Versions: Varies by vendor (e.g., Cisco IOS, Juniper Junos, Huawei VRP)

Key Features:

- Text-Based Commands: Allows direct manipulation of device configurations through structured commands.

- Interactive and Scripted Use: Can be used interactively or automated using scripts.

- Universal Availability: Present on virtually all network devices, including routers, switches, and optical equipment.

Advantages:

- Flexibility: Offers detailed and granular control over device configurations.

- Speed: Allows quick execution of commands, especially for power users familiar with the syntax.

- Universality: Supported across all major networking vendors, ensuring broad applicability.

Disadvantages:

- Steep Learning Curve: Requires familiarity with specific command syntax and vendor-specific nuances.

- Error-Prone: Manual command entry increases the risk of human errors, which can lead to misconfigurations.

- Limited Scalability: Managing large numbers of devices through CLI can be time-consuming and inefficient compared to automated protocols.

Use Cases:

- Manual configuration and troubleshooting of network devices.

- Environments where precise, low-level device management is required.

- Small to medium-sized networks where automation is limited or not essential.

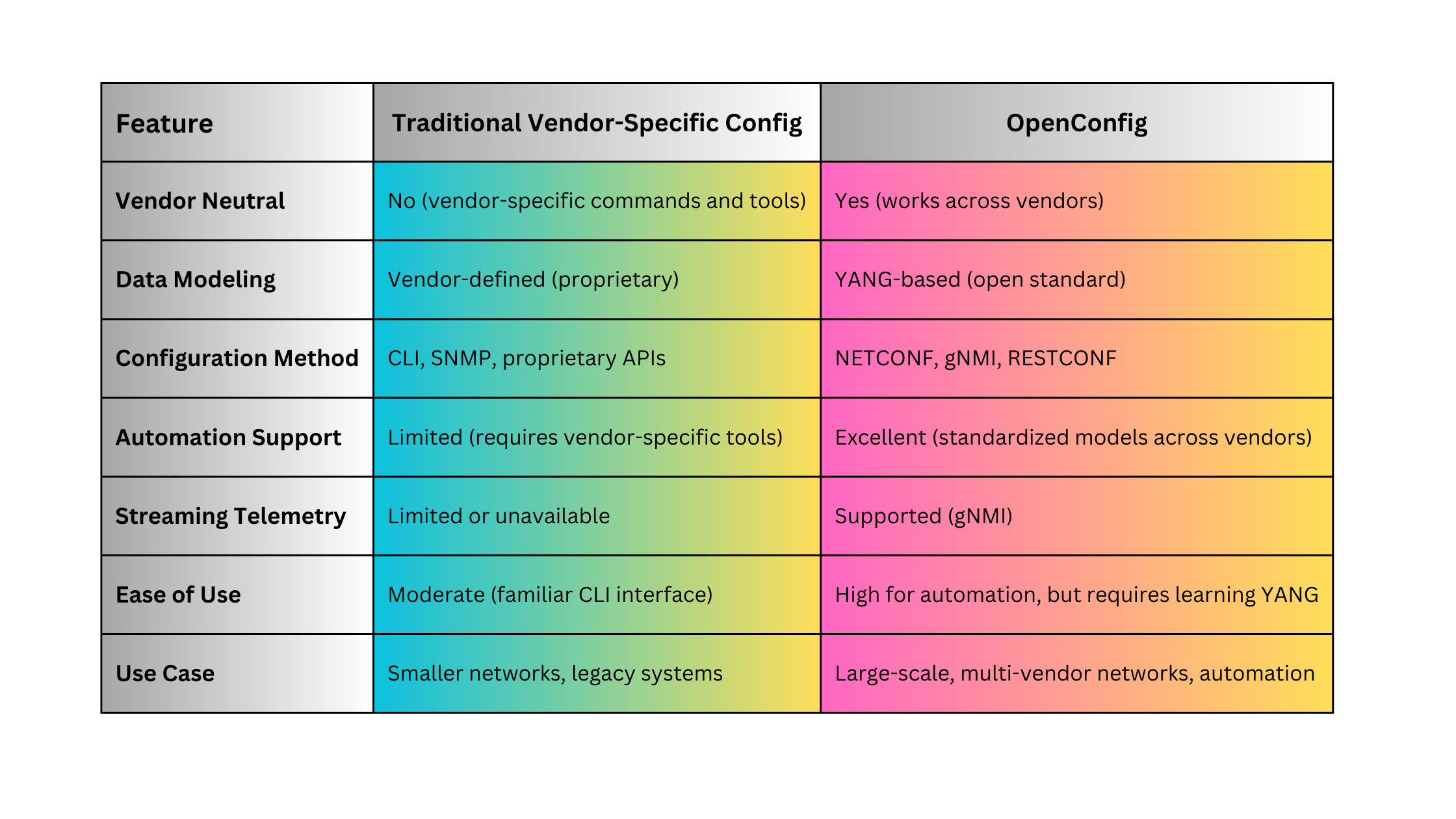

4.7. OpenConfig

OpenConfig is an open-source, vendor-neutral initiative designed to standardize network device configurations and telemetry data across different vendors.

- Standards: OpenConfig models are community-driven and continuously evolving.

- Versions: Continuously updated YANG-based models.

Key Features:

- Vendor Neutrality: Standardizes configurations and telemetry across multi-vendor environments.

- YANG-Based Models: Uses standardized YANG models for consistent data structures.

- Supports Modern Protocols: Integrates seamlessly with NETCONF, RESTCONF, and gNMI for configuration and telemetry.

Advantages:

- Interoperability: Facilitates unified management across diverse network devices from different vendors.

- Scalability: Designed to handle large-scale networks with automated management capabilities.

- Extensibility: Modular and adaptable to evolving network technologies and requirements.

Disadvantages:

- Adoption Rate: Not all vendors fully support OpenConfig models, limiting its applicability in mixed environments.

- Complexity: Requires understanding of YANG and modern network management protocols.

- Continuous Evolution: As an open-source initiative, models are frequently updated, necessitating ongoing adaptation.

Use Cases:

- Multi-vendor network environments seeking standardized management practices.

- Large-scale, automated networks leveraging modern protocols like gNMI and NETCONF.

- Organizations aiming to future-proof their network management strategies with adaptable and extensible models.

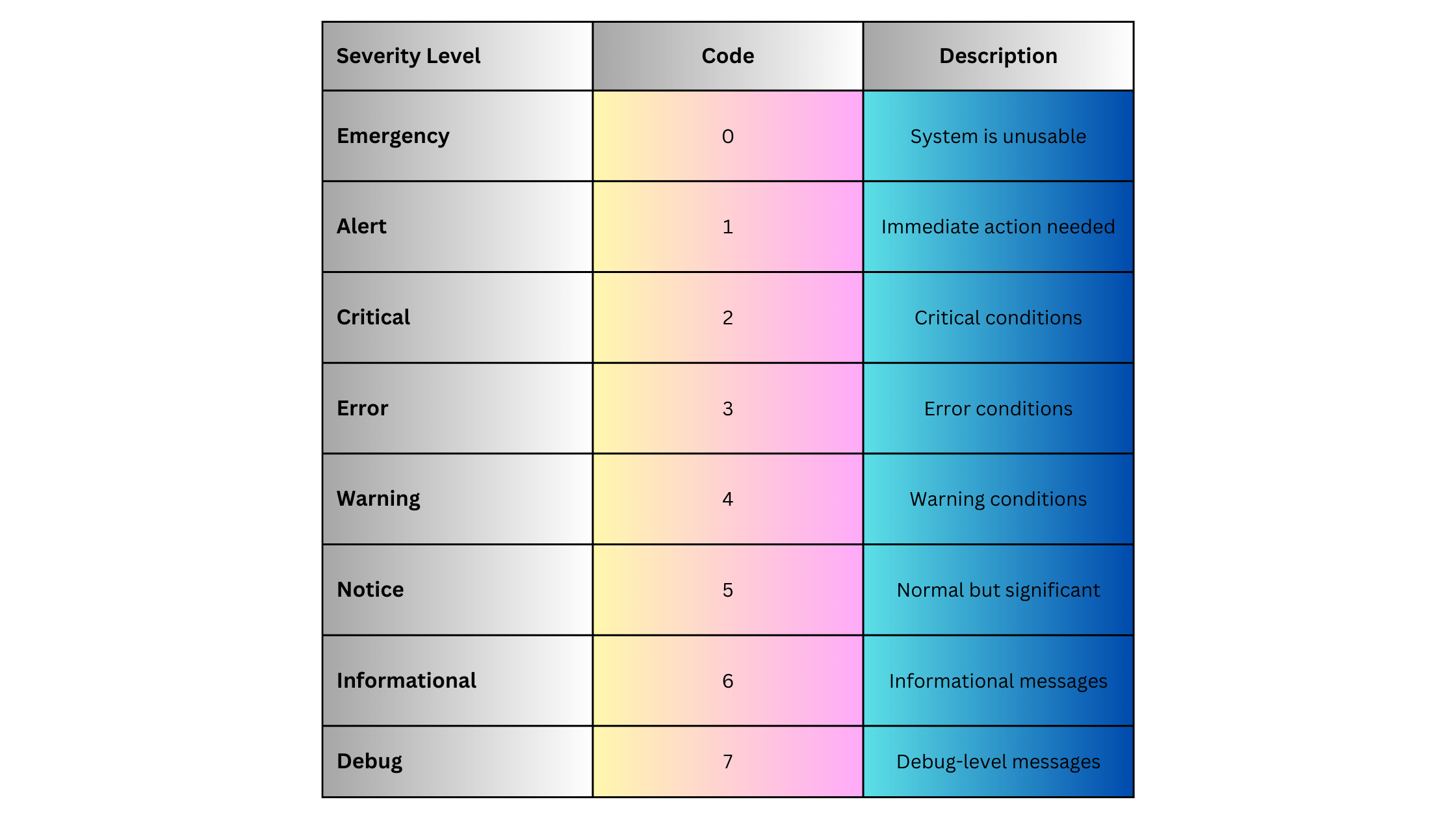

4.8. Syslog

Syslog is a standard for message logging, widely used for monitoring and troubleshooting network devices by capturing event messages.

- Version: Defined by RFC 5424

- Standards:

- RFC 3164: Original Syslog Protocol

- RFC 5424: Syslog Protocol (Enhanced)

Key Features:

- Event Logging: Captures and sends log messages from network devices to a centralized Syslog server.

- Severity Levels: Categorizes logs based on severity, from informational messages to critical alerts.

- Facility Codes: Identifies the source or type of the log message (e.g., kernel, user-level, security).

Advantages:

- Simplicity: Easy to implement and supported by virtually all network devices.

- Centralized Logging: Facilitates the aggregation and analysis of logs from multiple devices in one location.

- Real-Time Alerts: Enables immediate notification of critical events and issues.

Disadvantages:

- Unstructured Data: Traditional Syslog messages can be unstructured and vary by vendor, complicating log analysis.

- Reliability: UDP-based Syslog can result in message loss; however, TCP-based or Syslog over TLS solutions mitigate this issue.

- Scalability: Handling large volumes of log data requires robust Syslog servers and storage solutions.

Use Cases:

- Centralized monitoring and logging of network and optical devices.

- Real-time alerting and notification systems for network faults and security incidents.

- Compliance auditing and forensic analysis through aggregated log data.

5. Network Management Systems (NMS) and Tools

Network Management Systems (NMS) are comprehensive platforms that integrate various network management protocols and tools to provide centralized control, monitoring, and configuration capabilities. The choice of NMS depends on the scale of the network, specific requirements, and the level of automation desired.

5.1. For Small Networks (10 NEs)

Best Tools:

- PRTG Network Monitor: User-friendly, supports SNMP, Syslog, and other protocols. Ideal for small networks with basic monitoring needs.

- Nagios Core: Open-source, highly customizable, supports SNMP and Syslog. Suitable for administrators comfortable with configuring open-source tools.

- SolarWinds Network Performance Monitor (NPM): Provides a simple setup with powerful monitoring capabilities. Ideal for small to medium networks.

- Element Management System from any optical/networking vendor.

Features:

- Basic monitoring of device status, interface metrics, and uptime.

- Simple alerting mechanisms for critical events.

- Easy configuration with minimal setup complexity.

Example:

A small office network with a few routers, switches, and an optical transponder can use PRTG to monitor interface statuses, CPU usage, and power levels of optical devices via SNMP and Syslog.

5.2. For Medium Networks (100 NEs)

Best Tools:

- SolarWinds NPM: Scales well with medium-sized networks, offering advanced monitoring, alerting, and reporting features.

- Zabbix: Open-source, highly scalable, supports SNMP, NETCONF, RESTCONF, and gNMI. Suitable for environments requiring robust customization.

- Cisco Prime Infrastructure: Integrates seamlessly with Cisco devices, providing comprehensive management for medium-sized networks.

- Element Management System from any optical/networking vendor.

Features:

- Advanced monitoring with support for multiple protocols (SNMP, NETCONF).

- Enhanced alerting and notification systems.

- Configuration management and change tracking capabilities.

Example:

A medium-sized enterprise with multiple DWDM systems, routers, and switches can use Zabbix to monitor real-time performance metrics, configure devices via NETCONF, and receive alerts through Syslog messages.

5.3. For Large Networks (1,000 NEs)

Best Tools:

- Cisco DNA Center: Comprehensive management platform for large Cisco-based networks, offering automation, assurance, and advanced analytics.

- Juniper Junos Space: Scalable EMS for managing large Juniper networks, supporting automation and real-time monitoring.

- OpenNMS: Open-source, highly scalable, supports SNMP, RESTCONF, and gNMI. Suitable for diverse network environments.

- Network Management System from any optical/networking vendor.

Features:

- Centralized management with support for multiple protocols.

- High scalability and performance monitoring.

- Advanced automation and orchestration capabilities.

- Integration with SDN controllers and orchestration tools.

Example:

A large telecom provider managing thousands of optical transponders, DWDM channels, and networking devices can use Cisco DNA Center to automate configuration deployments, monitor network health in real-time, and optimize resource utilization through integrated SDN features.

5.4. For Enterprise and Massive Networks (500,000 to 1 Million NEs)

Best Tools:

- Ribbon LightSoft :Comprehensive network management solution for large-scale optical and IP networks.

- Nokia Network Services Platform (NSP): Highly scalable platform designed for massive network deployments, supporting multi-vendor environments.

- Huawei iManager U2000: Comprehensive network management solution for large-scale optical and IP networks.

- Splunk Enterprise: Advanced log management and analytics platform, suitable for handling vast amounts of Syslog data.

- Elastic Stack (ELK): Open-source solution for log aggregation, visualization, and analysis, ideal for massive log data volumes.

Features:

- Extreme scalability to handle millions of NEs.

- Advanced data analytics and machine learning for predictive maintenance and anomaly detection.

- Comprehensive automation and orchestration to manage complex network configurations.

- High-availability and disaster recovery capabilities.

Example:

A global internet service provider with a network spanning multiple continents, comprising millions of NEs including optical transponders, routers, switches, and data centers, can use Nokia NSP integrated with Splunk for real-time monitoring, automated configuration management through OpenConfig and gNMI, and advanced analytics to predict and prevent network failures.

6. Automation in Network Management

Automation in network management refers to the use of software tools and scripts to perform repetitive tasks, configure devices, monitor network performance, and respond to network events without manual intervention. Automation enhances efficiency, reduces errors, and allows network administrators to focus on more strategic activities.

6.1. Benefits of Automation

- Efficiency: Automates routine tasks, saving time and reducing manual workload.

- Consistency: Ensures uniform configuration and management across all network devices, minimizing discrepancies.

- Speed: Accelerates deployment of configurations and updates, enabling rapid scaling.

- Error Reduction: Minimizes human errors associated with manual configurations and monitoring.

- Scalability: Facilitates management of large-scale networks by handling complex tasks programmatically.

- Real-Time Responsiveness: Enables real-time monitoring and automated responses to network events and anomalies.

6.2. Automation Tools and Frameworks

- Ansible: Open-source automation tool that uses playbooks (YAML scripts) for automating device configurations and management tasks.

- Terraform: Infrastructure as Code (IaC) tool that automates the provisioning and management of network infrastructure.

- Python Scripts: Custom scripts leveraging libraries like Netmiko, Paramiko, and ncclient for automating CLI and NETCONF-based tasks.

- Cisco DNA Center Automation: Provides built-in automation capabilities for Cisco networks, including zero-touch provisioning and policy-based management.

- Juniper Automation: Junos Space Automation provides tools for automating complex network tasks in Juniper environments.

- Ribbon Muse SDN orchestrator ,Cisco MDSO and Ciena MCP/BluePlanet from any optical/networking vendor.

Example:

Using Ansible to automate the configuration of multiple DWDM transponders across different vendors by leveraging OpenConfig YANG models and NETCONF protocols ensures consistent and error-free deployments.

7. Best Practices for Network Management

Implementing effective network management requires adherence to best practices that ensure the network operates smoothly, efficiently, and securely.

7.1. Standardize Management Protocols

- Use Unified Protocols: Standardize on protocols like NETCONF, RESTCONF, and OpenConfig for configuration and management to ensure interoperability across multi-vendor environments.

- Adopt Secure Protocols: Always use secure transport protocols (SSH, TLS) to protect management communications.

7.2. Implement Centralized Management Systems

- Centralized Control: Use centralized NMS platforms to manage and monitor all network elements from a single interface.

- Data Aggregation: Aggregate logs and telemetry data in centralized repositories for comprehensive analysis and reporting.

7.3. Automate Routine Tasks

- Configuration Automation: Automate device configurations using scripts or automation tools to ensure consistency and reduce manual errors.

- Automated Monitoring and Alerts: Set up automated monitoring and alerting systems to detect and respond to network issues in real-time.

7.4. Maintain Accurate Documentation

- Configuration Records: Keep detailed records of all device configurations and changes for troubleshooting and auditing purposes.

- Network Diagrams: Maintain up-to-date network topology diagrams to visualize device relationships and connectivity.

7.5. Regularly Update and Patch Devices

- Firmware Updates: Regularly update device firmware to patch vulnerabilities and improve performance.

- Configuration Backups: Schedule regular backups of device configurations to ensure quick recovery in case of failures.

7.6. Implement Role-Based Access Control (RBAC)

- Access Management: Define roles and permissions to restrict access to network management systems based on job responsibilities.

- Audit Trails: Maintain logs of all management actions for security auditing and compliance.

7.7. Leverage Advanced Analytics and Machine Learning

- Predictive Maintenance: Use analytics to predict and prevent network failures before they occur.

- Anomaly Detection: Implement machine learning algorithms to detect unusual patterns and potential security threats.

8. Case Studies and Examples

8.1. Small Network Example (10 NEs)

Scenario: A small office network with 5 routers, 3 switches, and 2 optical transponders.

Solution: Use PRTG Network Monitor to monitor device statuses via SNMP and receive alerts through Syslog.

Steps:

- Setup PRTG: Install PRTG on a central server.

- Configure Devices: Enable SNMP and Syslog on all network devices.

- Add Devices to PRTG: Use SNMP credentials to add routers, switches, and optical transponders to PRTG.

- Create Alerts: Configure alerting thresholds for critical metrics like interface status and optical power levels.

- Monitor Dashboard: Use PRTG’s dashboard to visualize network health and receive real-time notifications of issues.

Outcome: The small network gains visibility into device performance and receives timely alerts for any disruptions, ensuring minimal downtime.

8.2. Optical Network Example

Scenario: A regional optical network with 100 optical transponders and multiple DWDM systems.

Solution: Implement OpenNMS with gNMI support for real-time telemetry and NETCONF for device configuration.

Steps:

- Deploy OpenNMS: Set up OpenNMS as the centralized network management platform.

- Enable gNMI and NETCONF: Configure all optical transponders to support gNMI and NETCONF protocols.

- Integrate OpenConfig Models: Use OpenConfig YANG models to standardize configurations across different vendors’ optical devices.

- Set Up Telemetry Streams: Configure gNMI subscriptions to stream real-time data on optical power levels and channel performance.

- Automate Configurations: Use OpenNMS’s automation capabilities to deploy and manage configurations across the optical network.

Outcome: The optical network benefits from real-time monitoring, automated configuration management, and standardized management practices, enhancing performance and reliability.

8.3. Enterprise Network Example

Scenario: A large enterprise with 10,000 network devices, including routers, switches, optical transponders, and data center equipment.

Solution: Utilize Cisco DNA Center integrated with Splunk for comprehensive management and analytics.

Steps:

- Deploy Cisco DNA Center: Set up Cisco DNA Center to manage all Cisco network devices.

- Integrate Non-Cisco Devices: Use OpenNMS to manage non-Cisco devices via NETCONF and gNMI.

- Setup Splunk: Configure Splunk to aggregate Syslog messages and telemetry data from all network devices.

- Automate Configuration Deployments: Use DNA Center’s automation features to deploy configurations and updates across thousands of devices.

- Implement Advanced Analytics: Use Splunk’s analytics capabilities to monitor network performance, detect anomalies, and generate actionable insights.

Outcome: The enterprise network achieves high levels of automation, real-time monitoring, and comprehensive analytics, ensuring optimal performance and quick resolution of issues.

9. Summary

Network Management is the cornerstone of reliable and high-performing communication networks, particularly in the realm of optical networks where precision and scalability are paramount. As networks continue to expand in size and complexity, the integration of advanced management protocols and automation tools becomes increasingly critical. By understanding and leveraging the appropriate network management protocols—such as SNMP, NETCONF, RESTCONF, gNMI, TL1, CLI, OpenConfig, and Syslog—network administrators can ensure efficient operation, rapid issue resolution, and seamless scalability.Embracing automation and standardization through tools like Ansible, Terraform, and modern network management systems (NMS) enables organizations to manage large-scale networks with minimal manual intervention, enhancing both efficiency and reliability. Additionally, adopting best practices, such as centralized management, standardized protocols, and advanced analytics, ensures that network infrastructures can meet the demands of the digital age, providing robust, secure, and high-performance connectivity.

Reference

- https://en.wikipedia.org/wiki/Software-defined_networking

- https://www.itu.int/rec/T-REC-M.3400-200002-I/en

- www.google.com

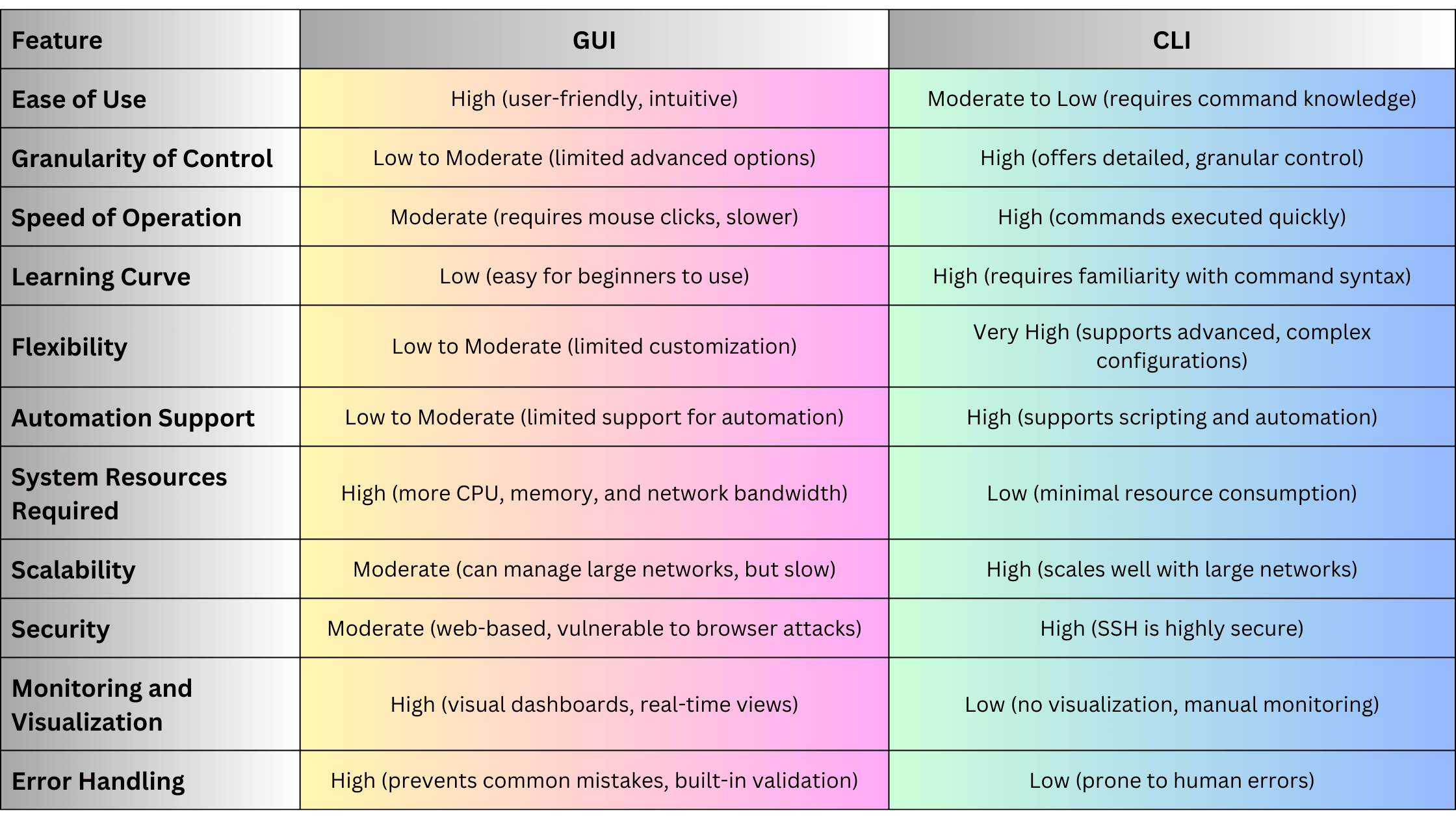

GUI (Graphical User Interface) interfaces have become a crucial part of network management systems, providing users with an intuitive, user-friendly way to manage, monitor, and configure network devices. Many modern networking vendors offer GUI-based management platforms, which are often referred to as Network Management Systems (NMS) or Element Management Systems (EMS), to simplify and streamline network operations, especially for less technically-inclined users or environments where ease of use is a priority.Lets explores the advantages and disadvantages of using GUI interfaces in network operations, configuration, deployment, and monitoring, with a focus on their role in managing networking devices such as routers, switches, and optical devices like DWDM and OTN systems.

Overview of GUI Interfaces in Networking

A GUI interface for network management typically provides users with a visual dashboard where they can manage network elements (NEs) through buttons, menus, and graphical representations of network topologies. Common tasks such as configuring interfaces, monitoring traffic, and deploying updates are presented in a structured, accessible way that minimizes the need for deep command-line knowledge.

Examples of GUI-based platforms include:

- Ribbons Muse, LighSoft

- Ciena One Control

- Cisco DNA Center for Cisco devices.

- Juniper’s Junos Space.

- Huawei iManager U2000 for optical and IP devices.

- Nokia Network Services Platform (NSP).

- SolarWinds Network Performance Monitor (NPM).

Advantages of GUI Interfaces

Ease of Use

The most significant advantage of GUI interfaces is their ease of use. GUIs provide a user-friendly and intuitive interface that simplifies complex network management tasks. With features such as drag-and-drop configurations, drop-down menus, and tooltips, GUIs make it easier for users to manage the network without needing in-depth knowledge of CLI commands.

- Simplified Configuration: GUI interfaces guide users through network configuration with visual prompts and wizards, reducing the chance of misconfigurations and errors.

- Point-and-Click Operations: Instead of remembering and typing detailed commands, users can perform most tasks using simple mouse clicks and menu selections.

This makes GUI-based management systems especially valuable for:

- Less experienced administrators who may not be familiar with CLI syntax.

- Small businesses or environments where IT resources are limited, and administrators need an easy way to manage devices without deep technical expertise.

Visualization of Network Topology

GUI interfaces often include network topology maps that provide a visual representation of the network. This feature helps administrators understand how devices are connected, monitor the health of the network, and troubleshoot issues quickly.

- Real-Time Monitoring: Many GUI systems allow real-time tracking of network status. Colors or symbols (e.g., green for healthy, red for failure) indicate the status of devices and links.

- Interactive Dashboards: Users can click on devices within the topology map to retrieve detailed statistics or configure those devices, simplifying network monitoring and management.

For optical networks, this visualization can be especially useful for managing complex DWDM or OTN systems where channels, wavelengths, and nodes can be hard to track through CLI.

Reduced Learning Curve

For network administrators who are new to networking or have limited exposure to CLI, a GUI interface reduces the learning curve. Instead of memorizing command syntax, users interact with a more intuitive interface that walks them through network operations step-by-step.

- Guided Workflows: GUI interfaces often provide wizards or guided workflows that simplify complex processes like device onboarding, VLAN configuration, or traffic shaping.

This can also speed up training for new IT staff, making it easier for them to get productive faster.

Error Reduction

In a GUI, configurations are typically validated on the fly, reducing the risk of syntax errors or misconfigurations that are common in a CLI environment. Many GUIs incorporate error-checking mechanisms, preventing users from making incorrect configurations by providing immediate feedback if a configuration is invalid.

- Validation Alerts: If a configuration is incorrect or incomplete, the GUI can generate alerts, prompting the user to fix the error before applying changes.

This feature is particularly useful when managing optical networks where incorrect channel configurations or power levels can cause serious issues like signal degradation or link failure.

Faster Deployment for Routine Tasks

For routine network operations such as firmware upgrades, device reboots, or creating backups, a GUI simplifies and speeds up the process. Many network management GUIs include batch processing capabilities, allowing users to:

- Upgrade the firmware on multiple devices simultaneously.

- Schedule backups of device configurations.

- Automate routine maintenance tasks with a few clicks.

For network administrators managing large deployments, this batch processing reduces the time and effort required to keep the network updated and functioning optimally.

Integrated Monitoring and Alerting

GUI-based network management platforms often come with built-in monitoring and alerting systems. Administrators can receive real-time notifications about network status, alarms, bandwidth usage, and device performance, all from a centralized dashboard. Some GUIs also integrate logging systems to help with diagnostics.

- Threshold-Based Alerts: GUI systems allow users to set thresholds (e.g., CPU utilization, link capacity) that, when exceeded, trigger alerts via email, SMS, or in-dashboard notifications.

- Pre-Integrated Monitoring Tools: Many GUI systems come with built-in monitoring capabilities, such as NetFlow analysis, allowing users to track traffic patterns and troubleshoot bandwidth issues.

Disadvantages of GUI Interfaces

Limited Flexibility and Granularity

While GUIs are great for simplifying network management, they often lack the flexibility and granularity of CLI. GUI interfaces tend to offer a subset of the full configuration options available through CLI. Advanced configurations or fine-tuning specific parameters may not be possible through the GUI, forcing administrators to revert to the CLI for complex tasks.

- Limited Features: Some advanced network features or vendor-specific configurations are not exposed in the GUI, requiring manual CLI intervention.

- Simplification Leads to Less Control: In highly complex network environments, some administrators may find that the simplification of GUIs limits their ability to make precise adjustments.

For example, in an optical network, fine-tuning wavelength allocation or optical channel power levels may be better handled through CLI or other specialized interfaces, rather than through a GUI, which may not support detailed settings.

Slower Operations for Power Users

Experienced network engineers often find GUIs slower to operate than CLI when managing large networks. CLI commands can be scripted or entered quickly in rapid succession, whereas GUI interfaces require more time-consuming interactions (clicking, navigating menus, waiting for page loads, etc.).

- Lag and Delays: GUI systems can experience latency, especially when managing a large number of devices, whereas CLI operations typically run with minimal lag.

- Reduced Efficiency for Experts: For network administrators comfortable with CLI, GUIs may slow down their workflow. Tasks that take a few seconds in CLI can take longer due to the extra navigation required in GUIs.

Resource Intensive

GUI interfaces are typically more resource-intensive than CLI. They require more computing power, memory, and network bandwidth to function effectively. This can be problematic in large-scale networks or when managing devices over low-bandwidth connections.

- System Requirements: GUIs often require more robust management servers to handle the graphical load and data processing, which increases the operational cost.

- Higher Bandwidth Use: Some GUI management systems generate more network traffic due to the frequent updates required to refresh the graphical display.

Dependence on External Management Platforms

GUI systems often require an external management platform (such as Cisco’s DNA Center or Juniper’s Junos Space), meaning they can’t be used directly on the devices themselves. This adds a layer of complexity and dependency, as the management platform must be properly configured and maintained.

- Single Point of Failure: If the management platform goes down, the GUI may become unavailable, forcing administrators to revert to CLI or other tools for device management.

- Compatibility Issues: Not all network devices, especially older legacy systems, are compatible with GUI-based management platforms, making it difficult to manage mixed-vendor or mixed-generation environments.

Security Vulnerabilities

GUI systems often come with more potential security risks compared to CLI. GUIs may expose more services (e.g., web servers, APIs) that could be exploited if not properly secured.

- Browser Vulnerabilities: Since many GUI systems are web-based, they can be susceptible to browser-based vulnerabilities, such as cross-site scripting (XSS) or man-in-the-middle (MITM) attacks.

- Authentication Risks: Improperly configured access controls on GUI platforms can expose network management to unauthorized users. GUIs tend to use more open interfaces (like HTTPS) than CLI’s more restrictive SSH.

Comparison of GUI vs. CLI for Network Operations

When to Use GUI Interfaces

GUI interfaces are ideal in the following scenarios:

- Small to Medium-Sized Networks: Where ease of use and simplicity are more important than advanced configuration capabilities.

- Less Technical Environments: Where network administrators may not have deep knowledge of CLI and need a simple, visual way to manage devices.

- Monitoring and Visualization: For environments where real-time network status and visual topology maps are needed for decision-making.

- Routine Maintenance and Monitoring: GUIs are ideal for routine tasks such as firmware upgrades, device status checks, or performance monitoring without requiring CLI expertise.

When Not to Use GUI Interfaces

GUI interfaces may not be the best choice in the following situations:

- Large-Scale or Complex Networks: Where scalability, automation, and fine-grained control are critical, CLI or programmable interfaces like NETCONF and gNMI are better suited.

- Time-Sensitive Operations: For power users who need to configure or troubleshoot devices quickly, CLI provides faster, more direct access.

- Advanced Configuration: For advanced configurations or environments where vendor-specific commands are required, CLI offers greater flexibility and access to all features of the device.

Summary

GUI interfaces are a valuable tool in network management, especially for less-experienced users or environments where ease of use, visualization, and real-time monitoring are priorities. They simplify network management tasks by offering an intuitive, graphical approach, reducing human errors, and providing real-time feedback. However, GUI interfaces come with limitations, such as reduced flexibility, slower operation, and higher resource requirements. As networks grow in complexity and scale, administrators may need to rely more on CLI, NETCONF, or gNMI for advanced configurations, scalability, and automation.

CLI (Command Line Interface) remains one of the most widely used methods for managing and configuring network and optical devices. Network engineers and administrators often rely on CLI to interact directly with devices such as routers, switches, DWDM systems, and optical transponders. Despite the rise of modern programmable interfaces like NETCONF, gNMI, and RESTCONF, CLI continues to be the go-to method for many due to its simplicity, direct access, and universal availability across a wide variety of network hardware.Let explore the fundamentals of CLI, its role in managing networking and optical devices, its advantages and disadvantages, and how it compares to other protocols like TL1, NETCONF, and gNMI. We will also provide practical examples of how CLI can be used to manage optical networks and traditional network devices.

What Is CLI?

CLI (Command Line Interface) is a text-based interface used to interact with network devices. It allows administrators to send commands directly to network devices, view status information, modify configurations, and troubleshoot issues. CLI is widely used in networking devices like routers and switches, as well as optical devices such as DWDM systems and Optical Transport Network (OTN) equipment.

Key Features:

- Text-Based Interface: CLI provides a human-readable way to manage devices by typing commands.

- Direct Access: Users connect to network devices through terminal applications like PuTTY or SSH clients and enter commands directly.

- Wide Support: Almost every networking and optical device from vendors like Ribbon, Ciena, Cisco, Juniper, Nokia, and others has a CLI.

- Manual or Scripted Interaction: CLI can be used both for manual configurations and scripted automation using tools like Python or Expect.

CLI is often the primary interface available for:

- Initial device configuration.

- Network troubleshooting.

- Monitoring device health and performance.

- Modifying network topologies.

CLI Command Structure

CLI commands vary between vendors but follow a general structure where a command invokes a specific action, and parameters or arguments are passed to refine the action. CLI commands can range from basic tasks, like viewing the status of an interface, to complex configurations of optical channels or advanced routing features.

Example of a Basic CLI Command (Cisco):

show ip interface briefThis command displays a summary of the status of all interfaces on a Cisco device.

Example of a CLI Command for Optical Devices:

show interfaces optical-1/1/1 transceiverThis command retrieves detailed information about the optical transceiver installed on interface optical-1/1/1, including power levels, wavelength, and temperature.

CLI Commands for Network and Optical Devices

Basic Network Device Commands

Show Commands

These commands provide information about the current state of the device. For example:

- show running-config: Displays the current configuration of the device.

- show ip route: Shows the routing table, which defines how packets are routed.

- show interfaces: Displays information about each network interface, including IP address, status (up/down), and traffic statistics.

Configuration Commands

Configuration mode commands allow you to make changes to the device’s settings.

- interface GigabitEthernet 0/1: Enter the configuration mode for a specific interface.

- ip address 192.168.1.1 255.255.255.0: Assign an IP address to an interface.

- no shutdown: Bring an interface up (enable it).

Optical Device Commands

Optical devices, such as DWDM systems and OTNs, often use CLI to monitor and manage optical parameters, channels, and alarms.

Show Optical Transceiver Status

Retrieves detailed information about an optical transceiver, including power levels and signal health.

show interfaces optical-1/1/1 transceiverSet Optical Power Levels

Configures the power output of an optical port to ensure the signal is within the required limits for transmission.

interface optical-1/1/1 transceiver power 0.0Monitor DWDM Channels

Shows the status and health of DWDM channels.

show dwdm channel-statusMonitor Alarms

Displays alarms related to optical devices, which can help identify issues such as low signal levels or hardware failures.

show alarmsCLI in Optical Networks

CLI plays a crucial role in optical network management, especially in legacy systems where modern APIs like NETCONF or gNMI may not be available. CLI is still widely used in DWDM systems, SONET/SDH devices, and OTN networks for tasks such as:

Provisioning Optical Channels

Provisioning optical channels on a DWDM system requires configuring frequency, power levels, and other key parameters using CLI commands. For example:

configure terminal

interface optical-1/1/1

wavelength 1550.12

transceiver power -3.5

no shutdownThis command sequence configures optical interface 1/1/1 with a wavelength of 1550.12 nm and a power output of -3.5 dBm, then brings the interface online.

Monitoring Optical Performance

Using CLI, network administrators can retrieve performance data for optical channels and transceivers, including signal levels, bit error rates (BER), and latency.

show interfaces optical-1/1/1 transceiverThis retrieves key metrics for the specified optical interface, such as receive and transmit power levels, SNR (Signal-to-Noise Ratio), and wavelength.

Troubleshooting Optical Alarms

Optical networks generate alarms when there are issues such as power degradation, link failures, or hardware malfunctions. CLI allows operators to view and clear alarms:

show alarms

clear alarmsCLI Advantages

Simplicity and Familiarity

CLI has been around for decades and is deeply ingrained in the daily workflow of network engineers. Its commands are human-readable and simple to learn, making it a widely adopted interface for managing devices.

Direct Device Access

CLI provides direct access to network and optical devices, allowing engineers to issue commands in real-time without the need for additional layers of abstraction.

Universally Supported

CLI is supported across almost all networking devices, from routers and switches to DWDM systems and optical transponders. Vendors like Cisco, Juniper, Ciena, Ribbon, and Nokia all provide CLI access, making it a universal tool for network and optical management.

Flexibility

CLI can be used interactively or scripted using automation tools like Python, Ansible, or Expect. This makes it suitable for both manual troubleshooting and basic automation tasks.

Granular Control

CLI allows for highly granular control over network devices. Operators can configure specific parameters down to the port or channel level, monitor detailed statistics, and fine-tune settings.

CLI Disadvantages

Lack of Automation and Scalability

While CLI can be scripted for automation, it lacks the inherent scalability and automation features provided by modern protocols like NETCONF and gNMI. CLI does not support transactional operations or large-scale configuration changes easily.

Error-Prone

Because CLI is manually driven, there is a higher likelihood of human error when issuing commands. A misconfigured parameter or incorrect command can lead to service disruptions or device failures.

Vendor-Specific Commands

Each vendor often has its own set of CLI commands, which means that operators working with multiple vendors must learn and manage different command structures. For example, Cisco CLI differs from Juniper or Huawei CLI.

Limited Real-Time Data