HomePosts tagged “Data transmission”

Data transmission

Showing 1 - 8 of 8 results

The world of optical communication is undergoing a transformation with the introduction of Hollow Core Fiber (HCF) technology. This revolutionary...

-

Free

-

March 26, 2025

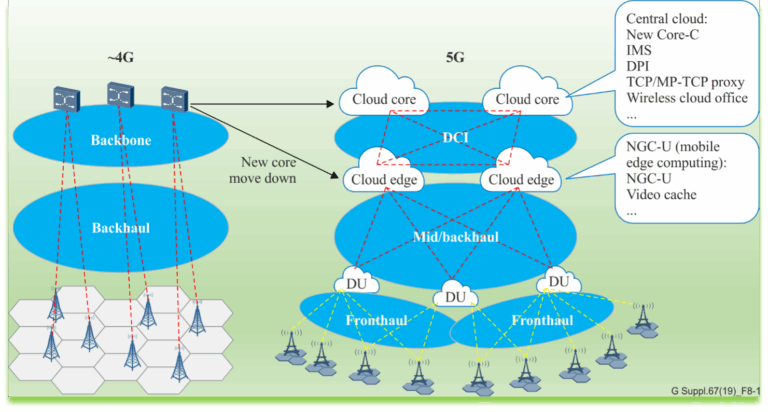

The advent of 5G technology is set to revolutionise the way we connect, and at its core lies a sophisticated...

-

Free

-

March 26, 2025

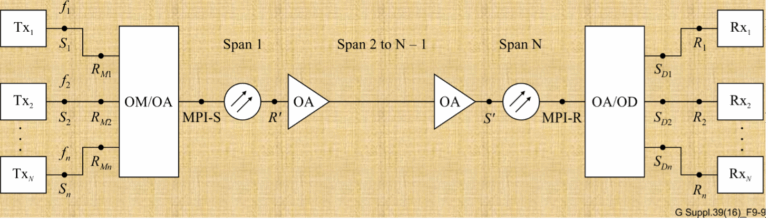

Optical networks are the backbone of the internet, carrying vast amounts of data over great distances at the speed of...

-

Free

-

March 26, 2025

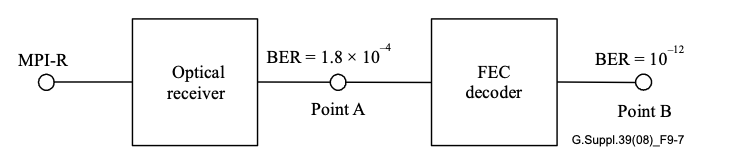

Forward Error Correction (FEC) has become an indispensable tool in modern optical communication, enhancing signal integrity and extending transmission distances....

-

Free

-

March 26, 2025

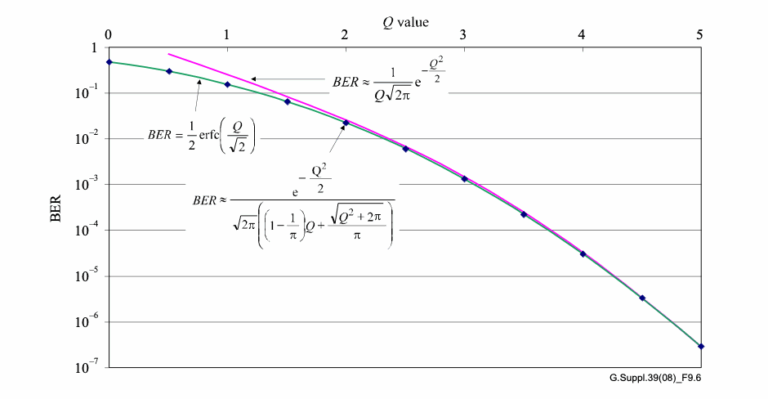

Signal integrity is the cornerstone of effective fiber optic communication. In this sphere, two metrics stand paramount: Bit Error Ratio...

-

Free

-

March 26, 2025

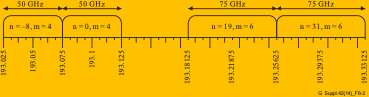

Introduction The telecommunications industry constantly strives to maximize the use of fiber optic capacity. Despite the broad spectral width of...

-

Free

-

March 26, 2025

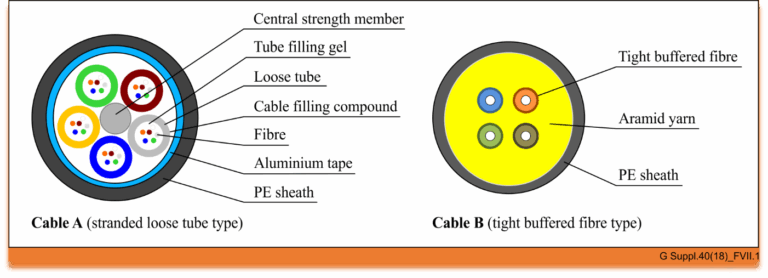

The world of optical communication is intricate, with different cable types designed for specific environments and applications. Today, we’re diving...

-

Free

-

March 26, 2025

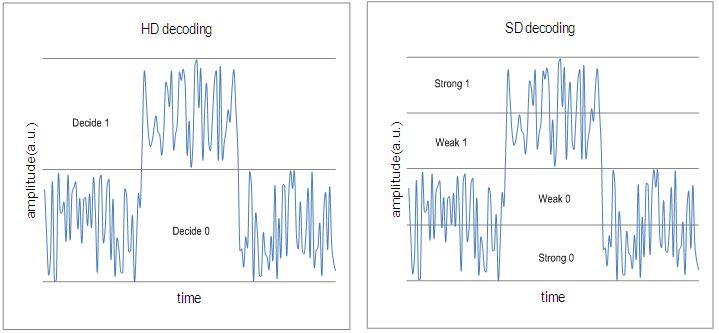

Items HD-FEC SD-FEC Definition Decoding based on hard-bits(the output is quantized only to two levels) is called the “HD(hard-decision) decoding”,...

-

Free

-

March 26, 2025

Explore Articles

Filter Articles

ResetExplore Courses

Tags

automation

ber

Chromatic Dispersion

coherent optical transmission

Data transmission

DWDM

edfa

EDFAs

Erbium-Doped Fiber Amplifiers

fec

Fiber optics

Fiber optic technology

Forward Error Correction

Latency

modulation

network automation

network management

Network performance

noise figure

optical

optical amplifiers

optical automation

Optical communication

Optical fiber

Optical network

optical network automation

optical networking

Optical networks

Optical performance

Optical signal-to-noise ratio

Optical transport network

OSNR

OTN

Q-factor

Raman Amplifier

SDH

Signal integrity

Signal quality

Slider

submarine

submarine cable systems

submarine communication

submarine optical networking

Telecommunications

Ticker